You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

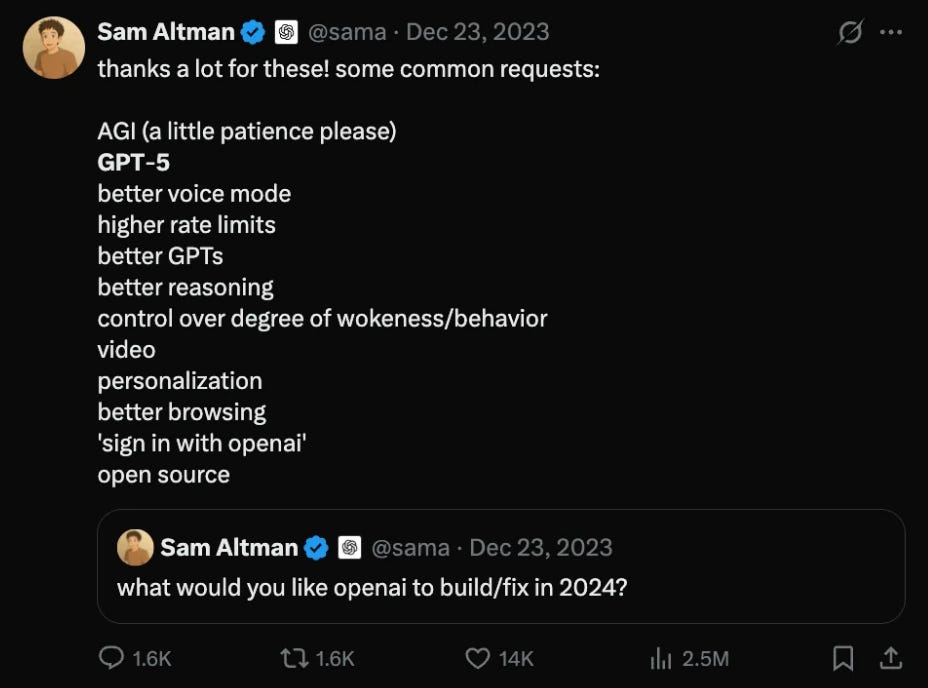

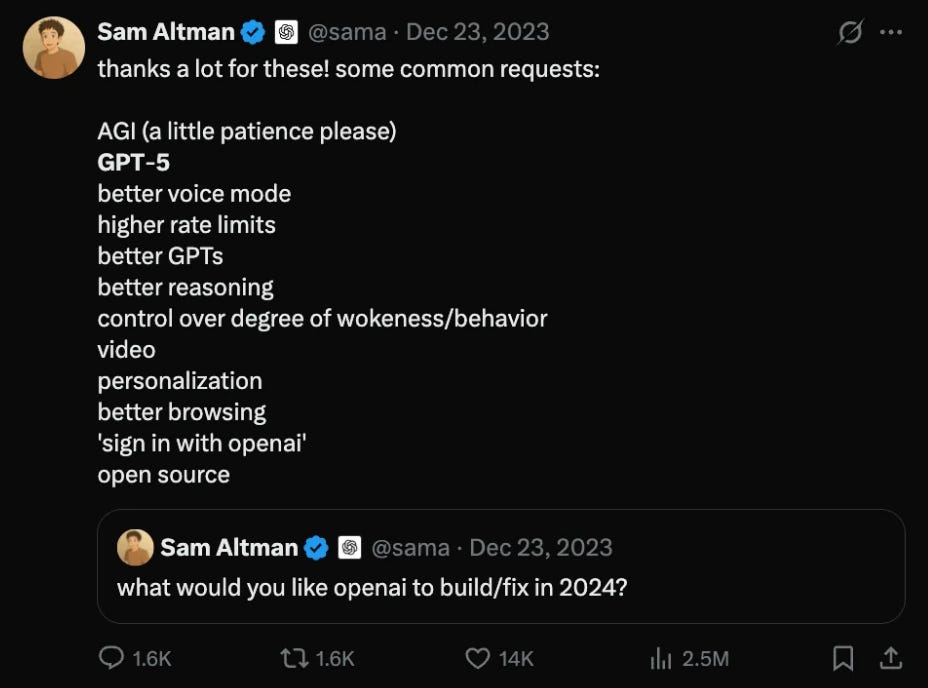

Chat GPT-5 Drops Today

- Thread starter KingDanz

- Start date

More options

Who Replied?DaKidFromNoWhere

Superstar

Well when I wake up I will check it out

Belize King

I got concepts of a plan.

1pm est

Black smoke and cac jokes

All Black Everything

my advice, do not use chat-gpt or any other AI tool without a VPN.

The Plug

plug couldnt trust you now u cant trust the plug

What difference does that make if the info you're putting is sensitive?my advice, do not use chat-gpt or any other AI tool without a VPN.

James Cameron: ‘There’s Danger’ of a ‘Terminator’-Style Apocalypse Happening If You ‘Put AI Together With Weapons Systems’

James Cameron says the possibility of a nuclear holocaust a la 'The Terminator' franchise is very real if AI becomes weaponized.

Black smoke and cac jokes

All Black Everything

What difference does that make if the info you're putting is sensitive?

I'm hoping no one on the coli is dumb enough to put personal information into any AI tool.

1/2

@sama

GPT-5 livestream in 2 minutes!

[Quoted tweet]

wen GPT-5? In 10 minutes.

openai.com/live

2/2

@indi_statistics

AI vs Human Benchmarks (2025 leaderboards):-

1. US Bar Exam – GPT-4.5: 92%, Avg Human: 68%

2. GRE Verbal – Claude 3: 98%, Avg Human: 79%

3. Codeforces – GPT-4.5: 1700+, Avg Human: 1500

4. Math Olympiad (IMO-level) – GPT-4: 65%, Top Human: 100%

5. SAT Math – Gemini 1.5: 98%, Avg Human: 81%

6. USMLE Step 1 – GPT-4: 89%, Human Avg: 78%

7. LSAT – Claude 3: 93%, Human Avg: 76%

8. Common Sense QA – GPT-4: 97%, Human: 95%

9. Chess Rating – LLMs: ~1800, Avg Human: ~1400

10. Creative Writing – Claude 3.5 > Human (via blind tests)

(Source: LMSYS, OpenAI evals, 2025)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@sama

GPT-5 livestream in 2 minutes!

[Quoted tweet]

wen GPT-5? In 10 minutes.

openai.com/live

2/2

@indi_statistics

AI vs Human Benchmarks (2025 leaderboards):-

1. US Bar Exam – GPT-4.5: 92%, Avg Human: 68%

2. GRE Verbal – Claude 3: 98%, Avg Human: 79%

3. Codeforces – GPT-4.5: 1700+, Avg Human: 1500

4. Math Olympiad (IMO-level) – GPT-4: 65%, Top Human: 100%

5. SAT Math – Gemini 1.5: 98%, Avg Human: 81%

6. USMLE Step 1 – GPT-4: 89%, Human Avg: 78%

7. LSAT – Claude 3: 93%, Human Avg: 76%

8. Common Sense QA – GPT-4: 97%, Human: 95%

9. Chess Rating – LLMs: ~1800, Avg Human: ~1400

10. Creative Writing – Claude 3.5 > Human (via blind tests)

(Source: LMSYS, OpenAI evals, 2025)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

@TechCrunch

What does OpenAI say makes their GPT-5 model different?

A focus on agentic abilities

A focus on agentic abilities

Better vibe coding capabilities

Better vibe coding capabilities

“better taste” in creative tasks (we'll see...)

“better taste” in creative tasks (we'll see...)

Greater accuracy

Greater accuracy

Improved safety

Improved safety

And you can try it for yourself today: OpenAI's GPT-5 is here | TechCrunch

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@TechCrunch

What does OpenAI say makes their GPT-5 model different?

And you can try it for yourself today: OpenAI's GPT-5 is here | TechCrunch

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

@carlvellotti

GPT-5 benchmarks just dropped

– much better at coding

– visual reasoning higher than human phds

– huge drop in hallucination

We'll see how these benchmarks play out, but they look crazy

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@carlvellotti

GPT-5 benchmarks just dropped

– much better at coding

– visual reasoning higher than human phds

– huge drop in hallucination

We'll see how these benchmarks play out, but they look crazy

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/5

@bindureddy

GPT-5 Specs Are Extraordinary

OpenAI's flagship model for coding, reasoning, and agentic tasks across domains.

- 400k input context window

- 128k max output tokens

- web search, image gen, MCP supported in responses API

- fine-tuning is supported

- mind-blowing pricing

2/5

@sachinmaya1980

Cool

3/5

@TheNishantSingh

Yup

[Quoted tweet]

OpenAI just quietly dropped GPT-5 into ChatGPT and made it free for everyone.

OpenAI just quietly dropped GPT-5 into ChatGPT and made it free for everyone.

Yes, even for free users.

Here’s what’s new, and why it matters:

Faster than GPT-4o

Faster than GPT-4o

Smarter than GPT-4-turbo (o3)

Smarter than GPT-4-turbo (o3)

Better UI/UX

Better UI/UX

Insanely good reasoning & planning

Insanely good reasoning & planning

Out of ~700M weekly users, most have only used GPT 4o.

Now? The masses just got a major intelligence upgrade.

GPT-5 doesn’t just generate text, it thinks.

It weaves analogies you'd never expect, tells stories with twists and setups, and even digs up niche insights during deep research.

Not just a chatbot anymore, it’s an author, planner, analyst, and creative partner all in one.

4/5

@FutureTechTrain

It’s available to free tier users as well with limits.

5/5

@Latin0Patri0t

Wow…they are making it available for free users too ….

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@bindureddy

GPT-5 Specs Are Extraordinary

OpenAI's flagship model for coding, reasoning, and agentic tasks across domains.

- 400k input context window

- 128k max output tokens

- web search, image gen, MCP supported in responses API

- fine-tuning is supported

- mind-blowing pricing

2/5

@sachinmaya1980

Cool

3/5

@TheNishantSingh

Yup

[Quoted tweet]

Yes, even for free users.

Here’s what’s new, and why it matters:

Out of ~700M weekly users, most have only used GPT 4o.

Now? The masses just got a major intelligence upgrade.

GPT-5 doesn’t just generate text, it thinks.

It weaves analogies you'd never expect, tells stories with twists and setups, and even digs up niche insights during deep research.

Not just a chatbot anymore, it’s an author, planner, analyst, and creative partner all in one.

4/5

@FutureTechTrain

It’s available to free tier users as well with limits.

5/5

@Latin0Patri0t

Wow…they are making it available for free users too ….

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/8

@ArtificialAnlys

OpenAI gave us early access to GPT-5: our independent benchmarks verify a new high for AI intelligence. We have tested all four GPT-5 reasoning effort levels, revealing 23x differences in token usage and cost between the ‘High’ and ‘Minimal’ options and substantial differences in intelligence

We have run our full suite of eight evaluations independently across all reasoning effort configurations of GPT-5 and are reporting benchmark results for intelligence, token usage, cost, and end-to-end latency.

What @OpenAI released: OpenAI has released a single endpoint for GPT-5, but different reasoning efforts offer vastly different intelligence. GPT-5 with reasoning effort “High” reaches a new intelligence frontier, while “Minimal” is near GPT-4.1 level (but more token efficient).

Takeaways from our independent benchmarks:

Reasoning effort configuration: GPT-5 offers four reasoning effort configurations: high, medium, low, and minimal. Reasoning effort options steer the model to “think” more or less hard for each query, driving large differences in intelligence, token usage, speed, and cost.

Reasoning effort configuration: GPT-5 offers four reasoning effort configurations: high, medium, low, and minimal. Reasoning effort options steer the model to “think” more or less hard for each query, driving large differences in intelligence, token usage, speed, and cost.

Intelligence achieved ranges from frontier to GPT-4.1 level: GPT-5 sets a new standard with a score of 68 on our Artificial Analysis Intelligence Index (MMLU-Pro, GPQA Diamond, Humanity’s Last Exam, LiveCodeBench, SciCode, AIME, IFBench & AA-LCR) at High reasoning effort. Medium (67) is close to o3, Low (64) sits between DeepSeek R1 and o3, and Minimal (44) is close to GPT-4.1. While High sets a new standard, the increase over o3 is not comparable to the jump from GPT-3 to GPT-4 or GPT-4o to o1.

Intelligence achieved ranges from frontier to GPT-4.1 level: GPT-5 sets a new standard with a score of 68 on our Artificial Analysis Intelligence Index (MMLU-Pro, GPQA Diamond, Humanity’s Last Exam, LiveCodeBench, SciCode, AIME, IFBench & AA-LCR) at High reasoning effort. Medium (67) is close to o3, Low (64) sits between DeepSeek R1 and o3, and Minimal (44) is close to GPT-4.1. While High sets a new standard, the increase over o3 is not comparable to the jump from GPT-3 to GPT-4 or GPT-4o to o1.

Cost & token usage varies 27x between reasoning efforts: GPT-5 with High reasoning effort used more tokens than o3 (82M vs. 50M) to complete our Index, but still fewer than Gemini 2.5 Pro (98M) and DeepSeek R1 0528 (99M). However, Minimal reasoning effort used only 3.5M tokens which is substantially less than GPT-4.1, making GPT-5 Minimal significantly more token-efficient for similar intelligence. Because there are no differences in the per-token price of GPT-5, this 27x difference in token usage between High and Minimal translates to a 23x difference in cost to run our Intelligence Index.

Cost & token usage varies 27x between reasoning efforts: GPT-5 with High reasoning effort used more tokens than o3 (82M vs. 50M) to complete our Index, but still fewer than Gemini 2.5 Pro (98M) and DeepSeek R1 0528 (99M). However, Minimal reasoning effort used only 3.5M tokens which is substantially less than GPT-4.1, making GPT-5 Minimal significantly more token-efficient for similar intelligence. Because there are no differences in the per-token price of GPT-5, this 27x difference in token usage between High and Minimal translates to a 23x difference in cost to run our Intelligence Index.

Long Context Reasoning: We released our own Long Context Reasoning (AA-LCR) benchmark earlier this week to test the reasoning capabilities of models across long sequence lengths (sets of documents ~100k tokens in total). GPT-5 stands out for its performance in AA-LCR, with GPT-5 in both High and Medium reasoning efforts topping the benchmark.

Long Context Reasoning: We released our own Long Context Reasoning (AA-LCR) benchmark earlier this week to test the reasoning capabilities of models across long sequence lengths (sets of documents ~100k tokens in total). GPT-5 stands out for its performance in AA-LCR, with GPT-5 in both High and Medium reasoning efforts topping the benchmark.

Agentic capabilities: OpenAI also commented on improvements across capabilities increasingly important to how AI models are used, including agents (long horizon tool calling). We recently added IFBench to our Intelligence Index to cover instruction following and will be adding further evals to cover agentic tool calling to independently test these capabilities.

Agentic capabilities: OpenAI also commented on improvements across capabilities increasingly important to how AI models are used, including agents (long horizon tool calling). We recently added IFBench to our Intelligence Index to cover instruction following and will be adding further evals to cover agentic tool calling to independently test these capabilities.

Vibe checks: We’re testing the personality of the model through MicroEvals on our website which supports running the same prompt across models and comparing results. It’s free to use, we’ll provide an update with our perspective shortly but feel free to share your own!

Vibe checks: We’re testing the personality of the model through MicroEvals on our website which supports running the same prompt across models and comparing results. It’s free to use, we’ll provide an update with our perspective shortly but feel free to share your own!

See below for further analysis

2/8

@ArtificialAnlys

Token usage (verbosity): GPT-5 with reasoning effort high uses 23X more tokens than with reasoning effort minimal. Though in doing so achieves substantial intelligence gains, between medium and high there is less of an uplift.

3/8

@ArtificialAnlys

Individual intelligence benchmark results: GPT-5 performs well across our intelligence evaluations, all run independently

4/8

@ArtificialAnlys

Long context reasoning performance: A stand out is long context reasoning performance as shown by our AA-LCR evaluation whereby GPT-5 occupies the #1 and #2 positions.

5/8

@ArtificialAnlys

Further benchmarks on Artificial Analysis:

https://artificialanalysis.ai/model...m-4.5,qwen3-235b-a22b-instruct-2507-reasoning

6/8

@FinanceQuacker

It's very impressive, but not as big of a jump as we expected

I feel like lower rates of hallucinations are the biggest benefits (assuming the OpenAI Hallucination tests reflect into better performance in daily use)

7/8

@Tsucks6432

8/8

@alemdar6140

Wow, GPT-5 already? @DavidNeomi873, you seeing this? The cost difference between ‘High’ and ‘Minimal’ effort is wild—23x! Wonder how that plays out in real-world use. Benchmarks sound impressive though.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@ArtificialAnlys

OpenAI gave us early access to GPT-5: our independent benchmarks verify a new high for AI intelligence. We have tested all four GPT-5 reasoning effort levels, revealing 23x differences in token usage and cost between the ‘High’ and ‘Minimal’ options and substantial differences in intelligence

We have run our full suite of eight evaluations independently across all reasoning effort configurations of GPT-5 and are reporting benchmark results for intelligence, token usage, cost, and end-to-end latency.

What @OpenAI released: OpenAI has released a single endpoint for GPT-5, but different reasoning efforts offer vastly different intelligence. GPT-5 with reasoning effort “High” reaches a new intelligence frontier, while “Minimal” is near GPT-4.1 level (but more token efficient).

Takeaways from our independent benchmarks:

See below for further analysis

2/8

@ArtificialAnlys

Token usage (verbosity): GPT-5 with reasoning effort high uses 23X more tokens than with reasoning effort minimal. Though in doing so achieves substantial intelligence gains, between medium and high there is less of an uplift.

3/8

@ArtificialAnlys

Individual intelligence benchmark results: GPT-5 performs well across our intelligence evaluations, all run independently

4/8

@ArtificialAnlys

Long context reasoning performance: A stand out is long context reasoning performance as shown by our AA-LCR evaluation whereby GPT-5 occupies the #1 and #2 positions.

5/8

@ArtificialAnlys

Further benchmarks on Artificial Analysis:

https://artificialanalysis.ai/model...m-4.5,qwen3-235b-a22b-instruct-2507-reasoning

6/8

@FinanceQuacker

It's very impressive, but not as big of a jump as we expected

I feel like lower rates of hallucinations are the biggest benefits (assuming the OpenAI Hallucination tests reflect into better performance in daily use)

7/8

@Tsucks6432

8/8

@alemdar6140

Wow, GPT-5 already? @DavidNeomi873, you seeing this? The cost difference between ‘High’ and ‘Minimal’ effort is wild—23x! Wonder how that plays out in real-world use. Benchmarks sound impressive though.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

@mssawan

Tested @OpenAI /search?q=#GPT-5 and here’s what’s next-level:

Arabic Texts:

* GPT-3.5: All over the place—accuracy ranged from 15% to 70% across my tasks.

* GPT-4: Big leap—hit 65% to 99% (only one task hit 99%; others landed lower).

* GPT-5: Straight 100% on every benchmark I threw at it—identifying chapters, verses, verifying authenticity, fill-in-the-blanks. First time I’ve seen that.

Reasoning & Efficiency:

* GPT-5 dynamically chooses how much “thinking” to do. Some answers in 15 seconds, some in 5 minutes—responds to the challenge.

* No more endless hallucinations. Real accuracy boost.

Personal “Mini-AGI” Test:

* Threw my secret childhood Arabic code at it (no models ever solved before even with extensive guidance).

* GPT-5: 80%+ right, no guidance. With help, even better. It started riffing so hard I had to check my own rules.

Coding - most exciting - small scale vibe test:

* GPT-5 > Claude for hands-on code:

* Challenges your mistakes

* Changes direction without getting lost

* Handles huge codebases

* It’s the difference between a mid-level and a true senior engineer.

* Frontend is MUCH better than Codex and older OpenAI models!

Short version: /search?q=#GPT5 is THE REAL DEAL.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@mssawan

Tested @OpenAI /search?q=#GPT-5 and here’s what’s next-level:

Arabic Texts:

* GPT-3.5: All over the place—accuracy ranged from 15% to 70% across my tasks.

* GPT-4: Big leap—hit 65% to 99% (only one task hit 99%; others landed lower).

* GPT-5: Straight 100% on every benchmark I threw at it—identifying chapters, verses, verifying authenticity, fill-in-the-blanks. First time I’ve seen that.

Reasoning & Efficiency:

* GPT-5 dynamically chooses how much “thinking” to do. Some answers in 15 seconds, some in 5 minutes—responds to the challenge.

* No more endless hallucinations. Real accuracy boost.

Personal “Mini-AGI” Test:

* Threw my secret childhood Arabic code at it (no models ever solved before even with extensive guidance).

* GPT-5: 80%+ right, no guidance. With help, even better. It started riffing so hard I had to check my own rules.

Coding - most exciting - small scale vibe test:

* GPT-5 > Claude for hands-on code:

* Challenges your mistakes

* Changes direction without getting lost

* Handles huge codebases

* It’s the difference between a mid-level and a true senior engineer.

* Frontend is MUCH better than Codex and older OpenAI models!

Short version: /search?q=#GPT5 is THE REAL DEAL.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

@DrDatta_AIIMS

GPT 5 is crazzzzzyyy!

Time to benchmark!!!

Lessssgooooo!

https://video.twimg.com/amplify_video/1953480646808289280/vid/avc1/3840x2160/zRusAGJl-X-VL_AR.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@DrDatta_AIIMS

GPT 5 is crazzzzzyyy!

Time to benchmark!!!

Lessssgooooo!

https://video.twimg.com/amplify_video/1953480646808289280/vid/avc1/3840x2160/zRusAGJl-X-VL_AR.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

True paradigm shift.

Great article that just came out today:

www.latent.space

www.latent.space

Some interesting quotes:

“

We were dealing with gnarly nested dependency conflicts adding Vercel’s AI SDK v5 and Zod 4 to our codebase. o3 + Cursor couldn’t figure it out, Claude Code + Opus 4 couldn’t figure it out.

GPT-5 one-shotted it. It was honestly beautiful to watch and instantly made the model “click” for me.”

“

Claude Opus 4 is good as ever at coding and got to work immediately quickly taking action to create the project + scaffolding. Opus 4 gave me a more fun and gamified UI, but unlike GPT-5 which used existing frameworks like create-next-app and included a SQLite database, Opus 4 decided to do everything from scratch and didn’t include a database. This makes for a good one-shot prototype, but what GPT-5 one-shotted was much closer to production ready.

“

“

While GPT-5 continues to work its way up the SWE ladder, it’s really not a great writer. GPT 4.5 and DeepSeek R1 are still muchbetter. (Maybe OpenAI will just add a writing tool call that calls on a dedicated, writing model - they have teased their Creative Writing model and we’d really like to see it!)

Looking at business writing like improving my LinkedIn posts, GPT-4.5 stays much truer to my tone and gives me portions of text I’d actually use, vs. GPT-5’s more ‘LinkedIn-slop’ style response.

“

“

I think GPT-5 is unequivocally the best coding model in the world. We were probably around 65% of the way through automating software engineering, and now we might be around 72%. To me, it’s the biggest leap since 3.5 Sonnet.

I’m extremely curious to find out how everyone else will receive the model. My guess is that most non-developers will not get it for a few months. We’ll have to wait for these models to be integrated into products.

“

My take on this is that this has ultimately transformed the landscape for entry to mid level software development forever. It hasn’t killed the career, but going forward, I see senior engineers becoming more like software architects and entry to mid level engineers being more associated with cleaning up and packaging software that is pretty much close to production level, rather than doing the actual building.

to OpenAI, I don’t think there’s a startup doing it like them right now. This work rate is reminiscent of Apple in the 80s, late 90/early 2000s.

to OpenAI, I don’t think there’s a startup doing it like them right now. This work rate is reminiscent of Apple in the 80s, late 90/early 2000s.

Great article that just came out today:

GPT-5 Hands-On: Welcome to the Stone Age

We're excited to publish our hands-on review from the developer beta.

Some interesting quotes:

“

We were dealing with gnarly nested dependency conflicts adding Vercel’s AI SDK v5 and Zod 4 to our codebase. o3 + Cursor couldn’t figure it out, Claude Code + Opus 4 couldn’t figure it out.

GPT-5 one-shotted it. It was honestly beautiful to watch and instantly made the model “click” for me.”

“

Claude Opus 4 is good as ever at coding and got to work immediately quickly taking action to create the project + scaffolding. Opus 4 gave me a more fun and gamified UI, but unlike GPT-5 which used existing frameworks like create-next-app and included a SQLite database, Opus 4 decided to do everything from scratch and didn’t include a database. This makes for a good one-shot prototype, but what GPT-5 one-shotted was much closer to production ready.

“

“

While GPT-5 continues to work its way up the SWE ladder, it’s really not a great writer. GPT 4.5 and DeepSeek R1 are still muchbetter. (Maybe OpenAI will just add a writing tool call that calls on a dedicated, writing model - they have teased their Creative Writing model and we’d really like to see it!)

Looking at business writing like improving my LinkedIn posts, GPT-4.5 stays much truer to my tone and gives me portions of text I’d actually use, vs. GPT-5’s more ‘LinkedIn-slop’ style response.

“

“

I think GPT-5 is unequivocally the best coding model in the world. We were probably around 65% of the way through automating software engineering, and now we might be around 72%. To me, it’s the biggest leap since 3.5 Sonnet.

I’m extremely curious to find out how everyone else will receive the model. My guess is that most non-developers will not get it for a few months. We’ll have to wait for these models to be integrated into products.

“

My take on this is that this has ultimately transformed the landscape for entry to mid level software development forever. It hasn’t killed the career, but going forward, I see senior engineers becoming more like software architects and entry to mid level engineers being more associated with cleaning up and packaging software that is pretty much close to production level, rather than doing the actual building.

to OpenAI, I don’t think there’s a startup doing it like them right now. This work rate is reminiscent of Apple in the 80s, late 90/early 2000s.

to OpenAI, I don’t think there’s a startup doing it like them right now. This work rate is reminiscent of Apple in the 80s, late 90/early 2000s.

Last edited:

Can't wait