Meta's 'Make-A-Scene' AI blends human and computer imagination into algorithmic art | Engadget

On Tuesday, Meta revealed that it has developed an AI image generation engine, dubbed "Make-A-Scene", that it hopes will lead to immersive worlds in the Metaverse and the emergence of digital high art..

It combines cutting-edge text-to-image generation with hand-drawn sketch inputs.

A. Tarantola

@terrortola

July 14, 2022 7:00 AM

In this article: news, Art, Meta, GAN, tomorrow, AI

Text-to-image generation is the hot algorithmic process right now, with OpenAI’s Craiyon (formerly DALL-E mini) and Google’s Imagen AIs unleashing tidal waves of wonderfully weird procedurally generated art synthesized from human and computer imaginations. On Tuesday, Meta revealed that it too has developed an AI image generation engine, one that it hopes will help to build immersive worlds in the Metaverse and create high digital art.

A lot of work into creating an image based on just the phrase, “there's a horse in the hospital,” when using a generation AI. First the phrase itself is fed through a transformer model, a neural network that parses the words of the sentence and develops a contextual understanding of their relationship to one another. Once it gets the gist of what the user is describing, the AI will synthesize a new image using a set of GANs (generative adversarial networks).

Thanks to efforts in recent years to train ML models on increasingly expandisve, high-definition image sets with well-curated text descriptions, today’s state-of-the-art AIs can create photorealistic images of most whatever nonsense you feed them. The specific creation process differs between AIs.

Meta AI

For example, Google’s Imagen uses a Diffusion model, “which learns to convert a pattern of random dots to images,” per a June Keyword blog. “These images first start as low resolution and then progressively increase in resolution.” Google’s Parti AI, on the other hand, “first converts a collection of images into a sequence of code entries, similar to puzzle pieces. A given text prompt is then translated into these code entries and a new image is created.”

While these systems can create most anything described to them, the user doesn’t have any control over the specific aspects of the output image. “To realize AI’s potential to push creative expression forward,” Meta CEO Mark Zuckerberg stated in Tuesday’s blog, “people should be able to shape and control the content a system generates.”

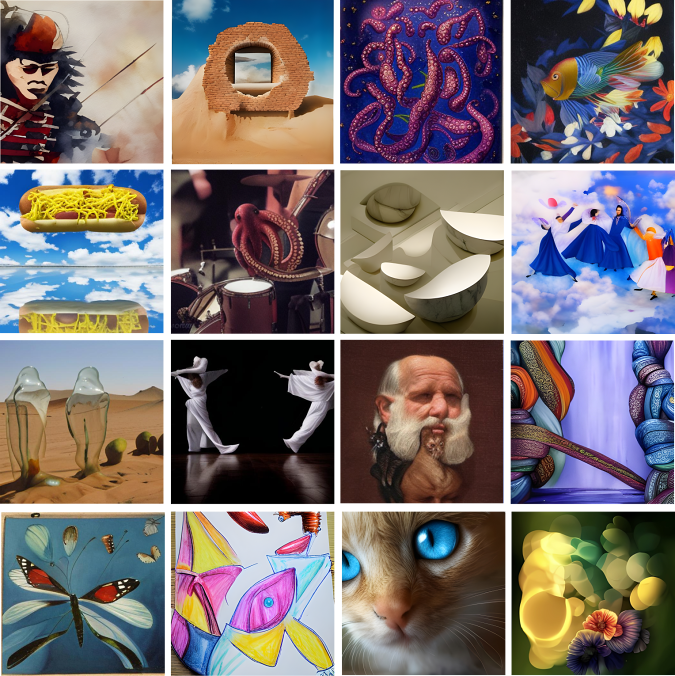

The company’s “exploratory AI research concept,” dubbed Make-A-Scene, does just that by incorporating user-created sketches to its text-based image generation, outputting a 2,048 x 2,048-pixel image. This combination allows the user to not just describe what they want in the image but also dictate the image’s overall composition as well. “It demonstrates how people can use both text and simple drawings to convey their vision with greater specificity, using a variety of elements, forms, arrangements, depth, compositions, and structures,” Zuckerberg said.

In testing, a panel of human evaluators overwhelmingly chose the text-and-sketch image over the text-only image as better aligned with the original sketch (99.54 percent of the time) and better aligned with the original text description 66 percent of the time. To further develop the technology, Meta has shared its Make-A-Scene demo with prominent AI artists including Sofia Crespo, Scott Eaton, Alexander Reben, and Refik Anadol, who will use the system and provide feedback. There’s no word on when the AI will be made available to the public.