1/6

@ai_for_success

I repeat world is not ready....

The most terrifying paragraph from Sam Altman's new blog, Three Observations:

"But the future will be coming at us in a way that is impossible to ignore, and the long-term changes to our society and economy will be huge. We will find new things to do, new ways to be useful to each other, and new ways to compete, but they may not look very much like the jobs of today."

[Quoted tweet]

So we finally have a definition of AGI from OpenAI and Sam Altman:

"AGI is a weakly defined term, but generally speaking, we mean it to be a system that can tackle increasingly complex problems at a human level in many fields."

Can we call this AGI ??

Sam Altman Three Observations.

2/6

@airesearchtools

What is the time frame we’re talking about? Assuming superintelligence is achieved, will we allow machines to make decisions? If humans remain the decision-makers, there will still be people working because those decisions will need to be made. Moreover, as the tasks we currently perform become simpler, we will likely have to take on even more complex decisions.

And when it comes to manual labor, will robots handle it? Will each of us have a personal robot? How long would it take to produce 8 billion robots? The truth is, I struggle to clearly visualize that future. And when I try, I can’t help but think of sci-fi movies and books where humans aren’t exactly idle.

3/6

@victor_explore

we're all pretending to be ready while secretly googling "how to survive the ai apocalypse" at 3am

4/6

@patrickDurusau

Well, yes and no. Imagine Sam was writing about power looms or the soon to be invented cotton gin.

He phrases it in terms of human intelligence but it's more akin to a mechanical calculator or printer.

VCs will be poorer and our jobs will change, but we'll learn new ones.

5/6

@MillenniumTwain

Public Sector 'AI' is already more than Two Decades behind Private/Covert sector << AGI >>, and all Big (Fraud) Tech is doing is accelerating the Dumb-Down of our Victim, Slave, Consumer US Public, and World!

[Quoted tweet]

"Still be Hidden behind Closed Doors"? Thanks to these Covert Actors (Microsoft, OpenAI, the NSA, ad Infinitum) — More and More is Being Hidden behind Closed Doors every day! The ONLY 'forward' motion being their exponentially-accelerated Big Tech/Wall Street HYPE, Fraud, DisInfo ...

6/6

@MaktabiJr

What will be the currency in that world? What’s the price of things in that world? Or agi will decide for us how to live equally? Giving each human equal credit

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@ai_for_success

I repeat world is not ready....

The most terrifying paragraph from Sam Altman's new blog, Three Observations:

"But the future will be coming at us in a way that is impossible to ignore, and the long-term changes to our society and economy will be huge. We will find new things to do, new ways to be useful to each other, and new ways to compete, but they may not look very much like the jobs of today."

[Quoted tweet]

So we finally have a definition of AGI from OpenAI and Sam Altman:

"AGI is a weakly defined term, but generally speaking, we mean it to be a system that can tackle increasingly complex problems at a human level in many fields."

Can we call this AGI ??

Sam Altman Three Observations.

2/6

@airesearchtools

What is the time frame we’re talking about? Assuming superintelligence is achieved, will we allow machines to make decisions? If humans remain the decision-makers, there will still be people working because those decisions will need to be made. Moreover, as the tasks we currently perform become simpler, we will likely have to take on even more complex decisions.

And when it comes to manual labor, will robots handle it? Will each of us have a personal robot? How long would it take to produce 8 billion robots? The truth is, I struggle to clearly visualize that future. And when I try, I can’t help but think of sci-fi movies and books where humans aren’t exactly idle.

3/6

@victor_explore

we're all pretending to be ready while secretly googling "how to survive the ai apocalypse" at 3am

4/6

@patrickDurusau

Well, yes and no. Imagine Sam was writing about power looms or the soon to be invented cotton gin.

He phrases it in terms of human intelligence but it's more akin to a mechanical calculator or printer.

VCs will be poorer and our jobs will change, but we'll learn new ones.

5/6

@MillenniumTwain

Public Sector 'AI' is already more than Two Decades behind Private/Covert sector << AGI >>, and all Big (Fraud) Tech is doing is accelerating the Dumb-Down of our Victim, Slave, Consumer US Public, and World!

[Quoted tweet]

"Still be Hidden behind Closed Doors"? Thanks to these Covert Actors (Microsoft, OpenAI, the NSA, ad Infinitum) — More and More is Being Hidden behind Closed Doors every day! The ONLY 'forward' motion being their exponentially-accelerated Big Tech/Wall Street HYPE, Fraud, DisInfo ...

6/6

@MaktabiJr

What will be the currency in that world? What’s the price of things in that world? Or agi will decide for us how to live equally? Giving each human equal credit

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/9

@ai_for_success

"Anyone in 2035 should be able to marshal the intellectual capacity equivalent to everyone in 2025." – Sam Altman

The next 10 years will be the most exciting, even if this statement holds true for just 10%.

2/9

@jairodri

Funny how my goats figured out AI-level problem solving years ago - they've been outsmarting my fence systems since before ChatGPT was cool

3/9

@victor_explore

the greatest wealth transfer won't be in dollars, but in cognitive capabilities going from the few to the many

4/9

@bookwormengr

Sama has writing style of people who are celebrated as messenger's of god.

Never bet against Sama, he has delivered.

5/9

@CloudNexgen

Absolutely! The progress we'll see in the next decade is bound to be phenomenal

6/9

@tomlikestocode

Even if we achieve just a fraction of this, the impact would be profound. The real challenge is ensuring it benefits everyone equitably.

7/9

@MaktabiJr

I think he already reached that. Whatever he’s developing is something that know how to control all of this

8/9

@Lux118736073602

Mark my words it will happen a lot sooner than in the year 2035

9/9

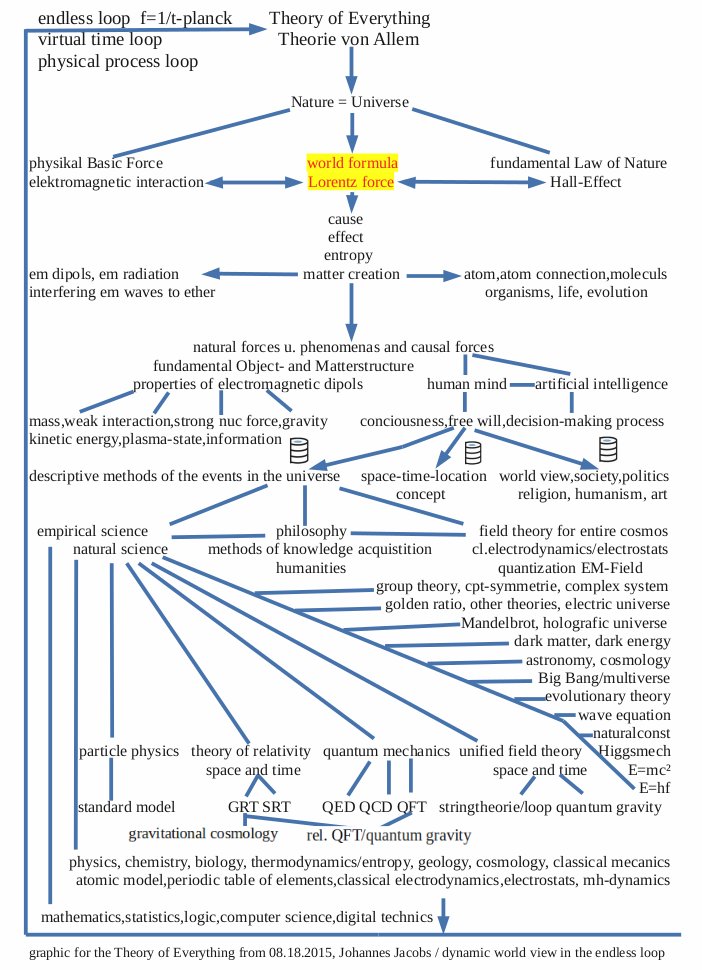

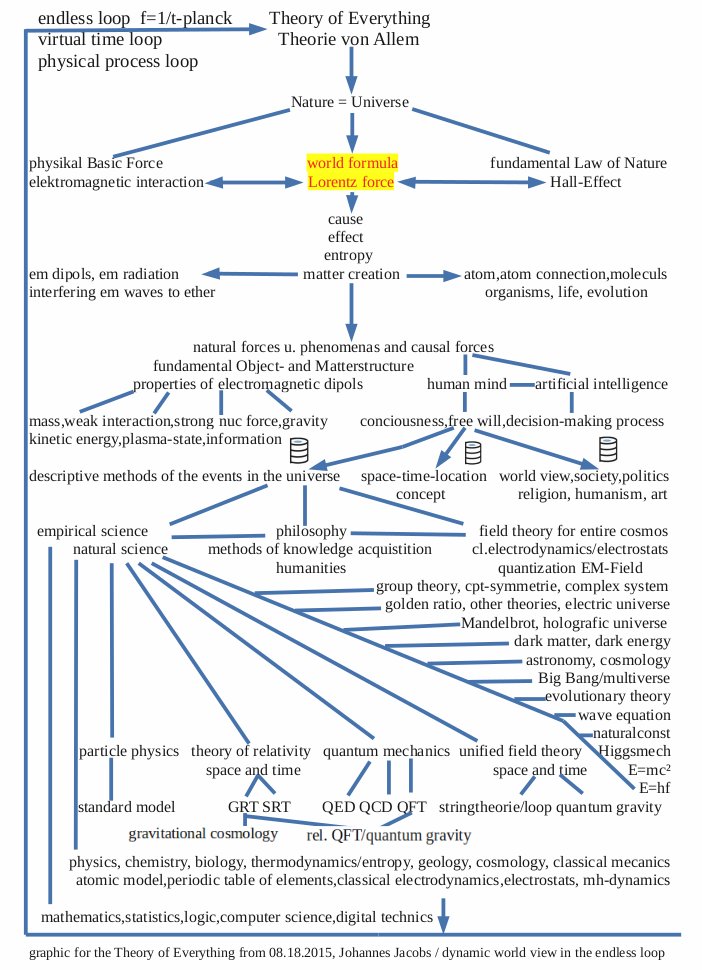

@JohJac7

AI is an integral part of the theory of everything

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@ai_for_success

"Anyone in 2035 should be able to marshal the intellectual capacity equivalent to everyone in 2025." – Sam Altman

The next 10 years will be the most exciting, even if this statement holds true for just 10%.

2/9

@jairodri

Funny how my goats figured out AI-level problem solving years ago - they've been outsmarting my fence systems since before ChatGPT was cool

3/9

@victor_explore

the greatest wealth transfer won't be in dollars, but in cognitive capabilities going from the few to the many

4/9

@bookwormengr

Sama has writing style of people who are celebrated as messenger's of god.

Never bet against Sama, he has delivered.

5/9

@CloudNexgen

Absolutely! The progress we'll see in the next decade is bound to be phenomenal

6/9

@tomlikestocode

Even if we achieve just a fraction of this, the impact would be profound. The real challenge is ensuring it benefits everyone equitably.

7/9

@MaktabiJr

I think he already reached that. Whatever he’s developing is something that know how to control all of this

8/9

@Lux118736073602

Mark my words it will happen a lot sooner than in the year 2035

9/9

@JohJac7

AI is an integral part of the theory of everything

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196