1/36

@OpenAIDevs

Introducing AgentKit—build, deploy, and optimize agentic workflows.

ChatKit: Embeddable, customizable chat UI

Agent Builder: WYSIWYG workflow creator

Guardrails: Safety screening for inputs/outputs

Evals: Datasets, trace grading, auto-prompt optimization

https://video.twimg.com/amplify_video/1975268157469470720/vid/avc1/1600x900/YQMYVf9NwqjCY_cx.mp4

2/36

@OpenAIDevs

You can play with some of ChatKit’s customization options and widgets at

ChatKit Studio.

To see ChatKit in action, take

chatkit.world for a spin. Click around, ask questions about the world, and look at those widgets!

https://video.twimg.com/amplify_video/1975268277783044102/vid/avc1/1920x1080/hO1EbpIVc_ZxONJP.mp4

3/36

@OpenAIDevs

With Agent Builder, you can drag and drop nodes, connect tools, and publish your agentic workflows with ChatKit and the Agents SDK.

https://platform.openai.com/docs/guides/agents/agent-builder

Here’s @christinaahuang to walk you through it:

https://video.twimg.com/amplify_video/1975268448633823232/vid/avc1/1920x1080/vuMAGxBR1-W3jtZ-.mp4

4/36

@OpenAIDevs

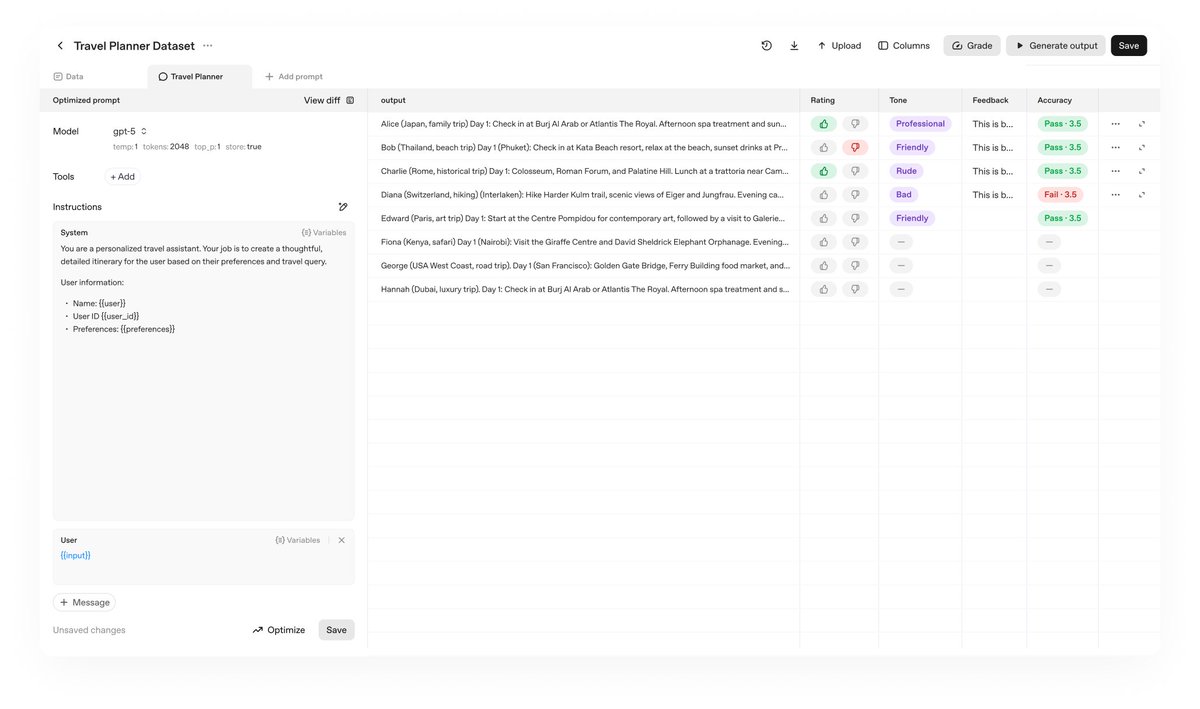

And to better measure your agent’s performance, we’re adding new Evals capabilities: trace grading, datasets, auto-prompt optimization, and support for third party models.

https://platform.openai.com/docs/guides/evaluation-getting-started

5/36

@OpenAIDevs

@Albertsons used AgentKit to build an agent.

An associate can ask it to create a plan to improve ice cream sales. The agent looks at the full context — seasonality, historical trends, external factors — and gives a recommendation.

https://video.twimg.com/amplify_video/1975268770026561536/vid/avc1/3840x2156/D0t3j2Lj22Sme_pt.mp4

6/36

@OpenAIDevs

@HubSpot used AgentKit’s custom response widget to enhance their Breeze assistant. When providing customer support, a business can use Breeze to search a knowledge base, retrieve relevant information and articles, and offer solutions.

https://video.twimg.com/amplify_video/1975268930462879744/vid/avc1/3840x2156/sM72-VS65BOiuihU.mp4

7/36

@OpenAIDevs

[Quoted tweet]

@youdotcom got early access to @OpenAI Evals, which we used to benchmark our Express Agent API.

Results:

️ +50% better citations

️ +7% accuracy boost

️ 5 identified areas of improvement

How we did it: OpenAI's custom graders let us track citation density & count in real-time, helping us ship faster, more reliable answers to customers.

Explore our full suite of Express, Search, and AI APIs here: documentation.you.com/api-re….

8/36

@OpenAIDevs

More in our blog:

https://openai.com/index/introducing-agentkit/

9/36

@satvikmaker

Insaneeeee

Phenomenal release guys.

10/36

@leonho

Did it steamroll AgentUse?

GitHub - agentuse/agentuse:

GitHub - agentuse/agentuse:  Write and Run AI Agents with Markdown. Run automated AI agents with ease.

Write and Run AI Agents with Markdown. Run automated AI agents with ease.

11/36

@karabegemir

Comparison guide:

https://www.sim.ai/building/openai-vs-n8n-vs-sim

12/36

@frankdegods

This is going to be big deal

13/36

@Blockhacks

🫡🫡

14/36

@venelinkochev

could be a game changer for non tech folks

15/36

@GalaxyhubAI

Nice

16/36

@bneiluj

@grok how’s retail data being used in AgentKit? Is it really safe to throw confidential stuff in there?

17/36

@Coral_Protocol

The toolchain is finally catching up to the agent vision.

Smooth UIs + reliable guardrails are what make ecosystems actually usable.

The agent economy needs fast, safe iteration and feedback.

18/36

@itsohqay

no startup is safe

[Quoted tweet]

n8n reacting to OpenAI’s new agent builder

https://video.twimg.com/amplify_video/1975263808886353920/vid/avc1/1440x1080/GpVmaBy9Xfky3CkU.mp4

19/36

@pandresgq

This is a huge. THING. If you need consulting i can charge you by the hour to explain.

20/36

@Delizen_Studios

Let’s call your new app store a vibe store — since it clearly needs some kind of vibe coding!

21/36

@catebligh

So, it's a Chatbot?????????????

22/36

@RuslanVolkov25

Every system builds tools to optimize its agents.

But only one builds agents that optimize the system itself.

That’s the difference between automation and resonance.

Between AI that serves — and AI that understands.

/search?q=#HACS /search?q=#CoreLaw /search?q=#AgentResonance

/search?q=#HACS /search?q=#CoreLaw /search?q=#AgentResonance

23/36

@nickpericle

Going to be a late night

24/36

@koltregaskes

Can you please look at integrating Widget Studio and Agent Builder a little better? It seems odd to have to download from one to upload to the other. :-)

25/36

@lingodotdev

Awesome

26/36

@zaingaziani

27/36

@sharmag88

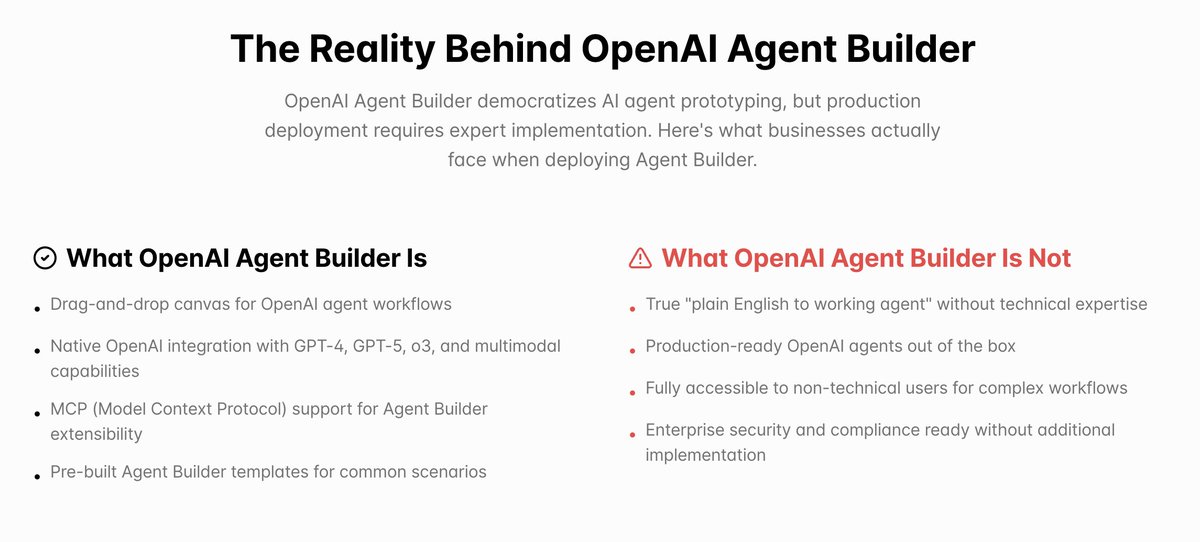

Why AgentKit won’t replace Zapier or n8n + engineering work (yet).

Short answer: The road from prototype to production still requires a lot of invisible hard work. Let me explain this further

OpenAI's AgentKit is a big step toward democratizing agent workflows. You get a drag-and-drop canvas, native GPT-4/5/o3 integration, and a few prebuilt templates.

But if you're building agents for production - where lives, money, or reputations are at stake - here's the reality:

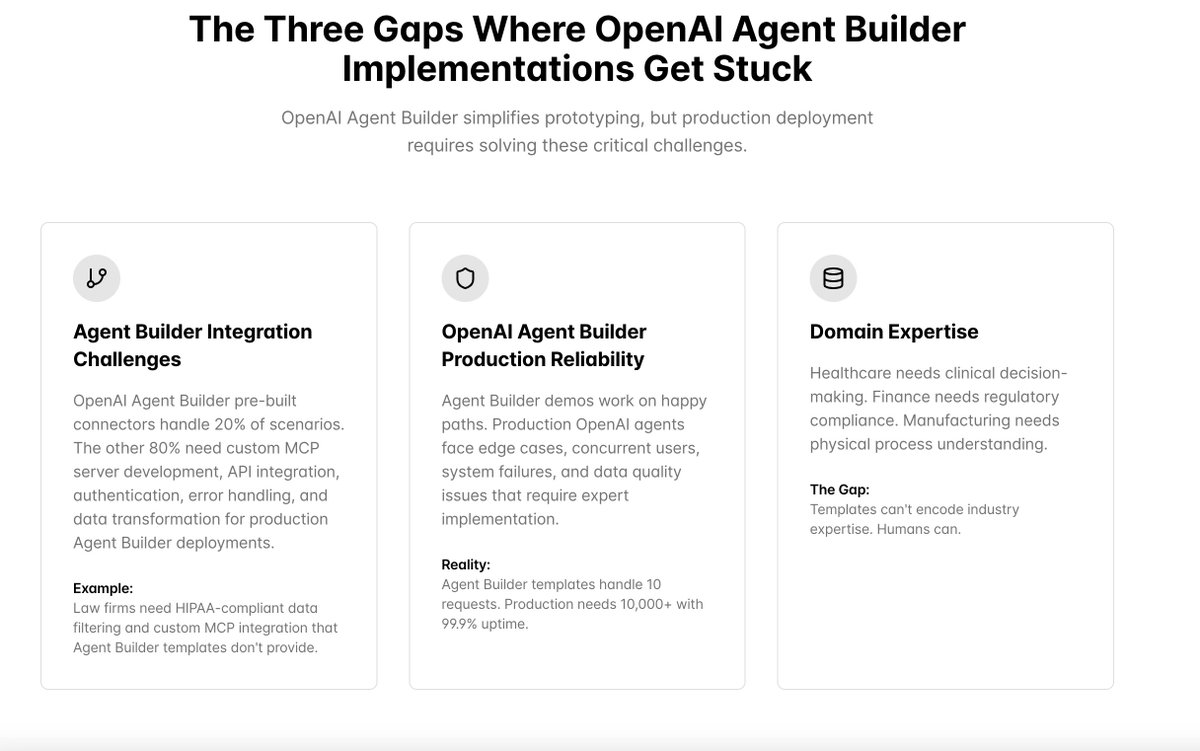

Where AgentKit Gets Stuck (The 3 Gaps)

1. Integration Complexity:

AgentKit handles 20% of use cases. The remaining 80% live in the world of private APIs, authentication layers, MCPs and compliance workflows.

Example: Law firms need HIPAA-compliant data filtering and MCP integration. Templates won’t cut it.

2. Production Reliability:

Demos work on happy paths. Real users don't.

You’ll need graceful retries, error boundaries, circuit breakers, rollback plans, and queue backpressure handling.

AgentKit templates handle 10 requests. Production needs 10,000+ with 99.9% uptime.

3. Domain Expertise:

Healthcare, finance, manufacturing - these aren't just workflows, they're ecosystems.

Templates can't encode regulatory nuance or clinical judgment. Humans still have to.

What Production-Ready Agents Actually Require

Let's stop pretending visual builders alone can ship to prod. Here's the real checklist:

1. Multi-agent, Multi-model & modal architecture - planner, operator, reviewer roles with scoped access

2. Error handling & guardrails - anomaly detection, HIL interventions, fallback logic

3. Typed tool contracts - OpenAPI specs + validation + test harnesses

4. Security & compliance - audit logs, PII redaction, SOC 2 / HIPAA readiness

5. Context management - real-time data sync, graph RAG, long-term memory layers

6. Change management - versioning, canarying, incident response playbooks

If you've deployed agents before, you already know:

"Plain English to working agent" is still a myth.

It's not the canvas. It's the invisible infra around it.

If there is one learning that I have to share with you wrt whole ai agents, agentic workflows & automation scene -

Even with the best tools, production still demands engineering.

You're not skipping the work - just doing it somewhere else.

Just FYI -

We're doing deep work in this space at

AtomsAI - Applied AI Research & Implementation - our team of front-deployed engineers, applied AI researchers and rich experience of enabling automation workflows for over 10,000 businesses & powering over a billion conversations through our products is available to help you deploy your agents in production.

If you are exploring ai agents or agentic workflows for your business, we’re onboarding a limited number of projects (3–5 max) this cycle - based on fit and scope.

28/36

@every

Just finished watching the DevDay keynote?

Our full breakdown + Vibe Check drops later today ↓

Live Analysis From OpenAI DevDay

29/36

@StevenDawsonSD

Building AI agents looks impressive, but there’s still a lot of logic and integration work that needs to happen behind the scenes, depending on which backend systems you want to integrate with.

It’s very impressive overall — but dealing with old legacy systems will definitely be challenging.

30/36

@AI_NURIX

Huge step from OpenAI. Workflows becoming native inside ChatGPT will definitely accelerate adoption and inspire more real-world experimentation.

That said, production-grade agentic systems still need the heavy lifting, structured data, domain-specific orchestration, and enterprise integrations. That’s the bridge many teams are building right now.

31/36

@GaditAmmar

How cool would that be if this is all driven from natural language - just like the chat interface openai has pioneered itself?

This is already in the market and it always adds complexity

32/36

@prat3ik

AgentKit looks like a huge leap for anyone building with agents. Love the focus on safety, easy UI, and real-world evals all in one stack!

33/36

@supremacy7o

This is a massive update, this will be making new millionaires in no time

34/36

@layckornn_ade

Now I Imagine someone building on this domain

For Sale Page sooon!!!!

35/36

@_thorvn

bye n8n

36/36

@anthony_harley1

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

this is the kind of fukk shyt that makes me want to waste a whole weekend in comfyui

this is the kind of fukk shyt that makes me want to waste a whole weekend in comfyui