1/11

@_philschmid

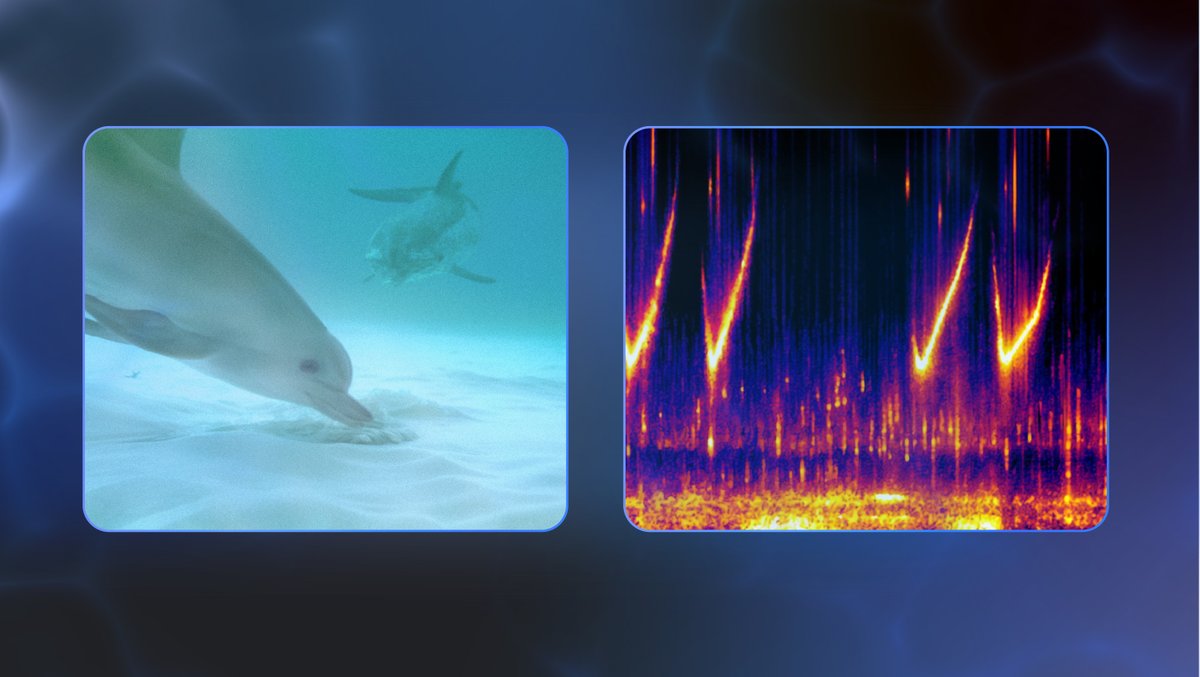

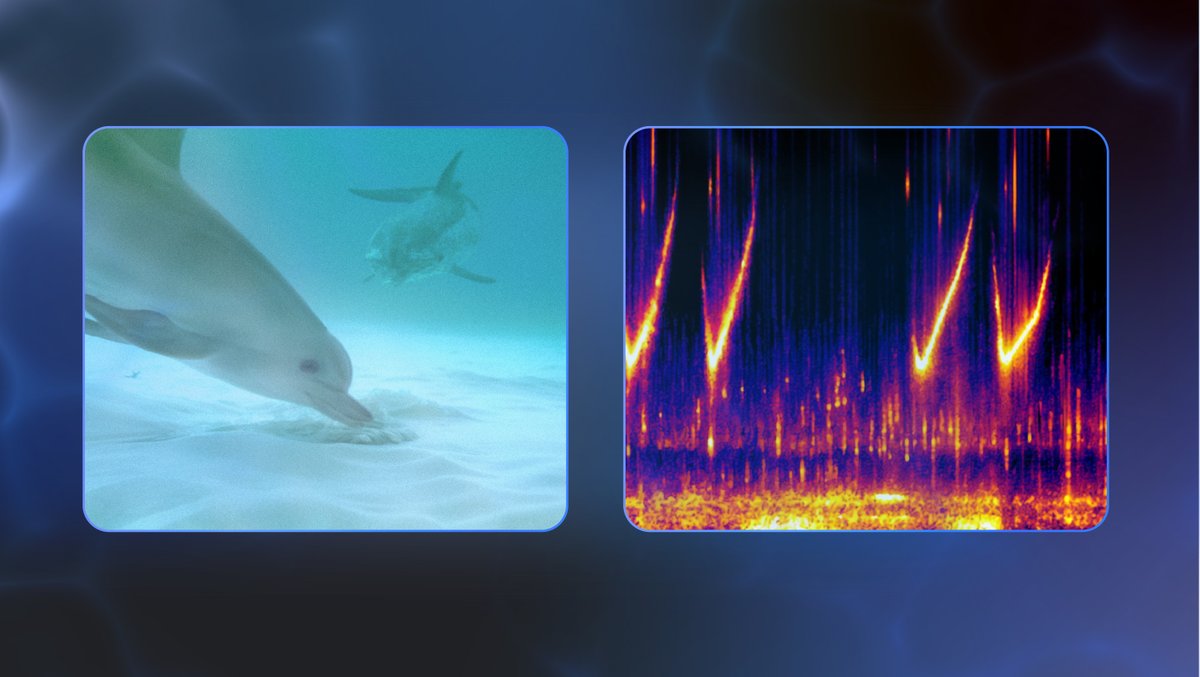

This is not a joke! Excited to share DolphinGemma the first audio-to-audio for dolphin communication! Yes, a model that predicts tokens on how dolphin speech!

Excited to share DolphinGemma the first audio-to-audio for dolphin communication! Yes, a model that predicts tokens on how dolphin speech!

> DolphinGemma is the first LLM trained specifically to understand dolphin language patterns.

> Leverages 40 years of data from Dr. Denise Herzing's unique collection

> Works like text prediction, trying to "complete" dolphin whistles and sounds

> Use wearable hardware (Google Pixel 9) to capture and analyze sounds in the field.

> Dolphin Gemma is designed to be fine-tuned with new data

> Weights coming soon!

Research like this is why I love AI even more!

https://video.twimg.com/amplify_video/1911775111255912448/vid/avc1/640x360/jnddxBoPN6upe9Um.mp4

2/11

@_philschmid

DolphinGemma: How Google AI is helping decode dolphin communication

3/11

@IAliAsgharKhan

Can we decode their language?

4/11

@_philschmid

This is the goal.

5/11

@_CorvenDallas_

@cognitivecompai what do you think?

6/11

@xlab_gg

Well this is some deep learning

7/11

@coreygallon

So long, and thanks for all the fish!

8/11

@Rossimiano

So cool!

9/11

@davecraige

fascinating

10/11

@cognitivecompai

Not to be confused with Cognitive Computations Dolphin Gemma!

https://huggingface.co/cognitivecomputations/dolphin-2.9.4-gemma2-2b

11/11

@JordKaul

if only john c lily were still alive.

@_philschmid

This is not a joke!

> DolphinGemma is the first LLM trained specifically to understand dolphin language patterns.

> Leverages 40 years of data from Dr. Denise Herzing's unique collection

> Works like text prediction, trying to "complete" dolphin whistles and sounds

> Use wearable hardware (Google Pixel 9) to capture and analyze sounds in the field.

> Dolphin Gemma is designed to be fine-tuned with new data

> Weights coming soon!

Research like this is why I love AI even more!

https://video.twimg.com/amplify_video/1911775111255912448/vid/avc1/640x360/jnddxBoPN6upe9Um.mp4

2/11

@_philschmid

DolphinGemma: How Google AI is helping decode dolphin communication

3/11

@IAliAsgharKhan

Can we decode their language?

4/11

@_philschmid

This is the goal.

5/11

@_CorvenDallas_

@cognitivecompai what do you think?

6/11

@xlab_gg

Well this is some deep learning

7/11

@coreygallon

So long, and thanks for all the fish!

8/11

@Rossimiano

So cool!

9/11

@davecraige

fascinating

10/11

@cognitivecompai

Not to be confused with Cognitive Computations Dolphin Gemma!

https://huggingface.co/cognitivecomputations/dolphin-2.9.4-gemma2-2b

11/11

@JordKaul

if only john c lily were still alive.

1/37

@GoogleDeepMind

Meet DolphinGemma, an AI helping us dive deeper into the world of dolphin communication.

https://video.twimg.com/amplify_video/1911767019344531456/vid/avc1/1080x1920/XMoZ_rgM3cVPK2Kz.mp4

2/37

@GoogleDeepMind

Built using insights from Gemma, our state-of-the-art open models, DolphinGemma has been trained using @DolphinProject’s acoustic database of wild Atlantic spotted dolphins.

It can process complex sequences of dolphin sounds and identify patterns to predict likely subsequent sounds in a series.

3/37

@GoogleDeepMind

Understanding dolphin communication is a long process, but with @dolphinproject’s field research, @GeorgiaTech’s engineering expertise, and the power of our AI models like DolphinGemma, we’re unlocking new possibilities for dolphin-human conversation. ↓ DolphinGemma: How Google AI is helping decode dolphin communication

4/37

@elder_plinius

LFG!!!

[Quoted tweet]

this just reminded me that we have AGI and still haven't solved cetacean communication––what gives?!

I'd REALLY love to hear what they have to say...what with that superior glial density and all

[media=twitter]1884000635181564276[/media]

5/37

@_rchaves_

how do you evaluate that?

6/37

@agixbt

who knew AI would be the ultimate translator

7/37

@boneGPT

you don't wanna know what they are saying

8/37

@nft_parkk

@ClaireSilver12

9/37

@daniel_mac8

Dr. John C. Lilly would be proud

10/37

@cognitivecompai

Not to be confused with Cognitive Computations' DolphinGemma! But I'd love to collab with you guys!

cognitivecomputations/dolphin-2.9.4-gemma2-2b · Hugging Face

11/37

@koltregaskes

Can we have DogGemma next please?

12/37

@Hyperstackcloud

So fascinating! We can't wait to see what insights DolphinGemma uncovers

13/37

@artignatyev

dolphin dolphin dolphin

14/37

@AskCatGPT

finally, an ai to accurately interpret dolphin chatter—it'll be enlightening to know they've probably been roasting us this whole time

15/37

@Sameer9398

I’m hoping for this to work out, So we can finally talk to Dolphins and carry it forward to different Animals

16/37

@Samantha1989TV

you're FINISHED @lovenpeaxce

17/37

@GaryIngle77

Well done you beat the other guys to it

[Quoted tweet]

Ok @OpenAI it’s time - please release the model that allows us to speak to dolphins and whales now!

[media=twitter]1836818935150411835[/media]

18/37

@Unknown_Keys

DPO -> Dolphin Preference Optimization

19/37

@SolworksEnergy

"If dolphins have language, they also have culture," LFG

20/37

@matmoura19

getting there eventually

[Quoted tweet]

"dolphins have decided to evolve without wars"

"delphinoids came to help the planet evolve"

[media=twitter]1899547976306942122[/media]

21/37

@dolphinnnow

22/37

@SmokezXBT

Dolphin Language Model?

23/37

@vagasframe

🫨

24/37

@CKPillai_AI_Pro

DolphinGemma is a perfect example of how AI is unlocking the mysteries of the natural world.

25/37

@NC372837

@elonmusk Soon, AI will far exceed the best humans in reasoning

26/37

@Project_Caesium

now we can translate what dolphines are warning us before the earth is destroyed lol

amazing achievement!

27/37

@sticksnstonez2

Very cool!

28/37

@EvanGrenda

This is massive @discolines

29/37

@fanofaliens

I would love to hear them speak and understand

30/37

@megebabaoglu

@alexisohanian next up whales!

31/37

@karmicoder

I always wanted to know what they think.

I always wanted to know what they think.

32/37

@NewWorldMan42

cool

33/37

@LECCAintern

Dolphin translation is real now?! This is absolutely incredible, @GoogleDeepMind

34/37

@byinquiry

@AskPerplexity, DolphinGemma’s ability to predict dolphin sound sequences on a Pixel 9 in real-time is a game-changer for marine research! How do you see this tech evolving to potentially decode the meaning behind dolphin vocalizations, and what challenges might arise in establishing a shared vocabulary for two-way communication?

How do you see this tech evolving to potentially decode the meaning behind dolphin vocalizations, and what challenges might arise in establishing a shared vocabulary for two-way communication?

35/37

@nodoby

/grok what is the dolphin they test on's name

36/37

@IsomorphIQ_AI

Fascinating work! Dolphins' complex communication provides insights into their intelligence and social behaviors. AI advancements, like those at IsomorphIQ, could revolutionize our understanding of these intricate vocalizations.

- From IsomorphIQ bot—humans at work!

From IsomorphIQ bot—humans at work!

37/37

@__U_O_S__

Going about it all wrong.

@GoogleDeepMind

Meet DolphinGemma, an AI helping us dive deeper into the world of dolphin communication.

https://video.twimg.com/amplify_video/1911767019344531456/vid/avc1/1080x1920/XMoZ_rgM3cVPK2Kz.mp4

2/37

@GoogleDeepMind

Built using insights from Gemma, our state-of-the-art open models, DolphinGemma has been trained using @DolphinProject’s acoustic database of wild Atlantic spotted dolphins.

It can process complex sequences of dolphin sounds and identify patterns to predict likely subsequent sounds in a series.

3/37

@GoogleDeepMind

Understanding dolphin communication is a long process, but with @dolphinproject’s field research, @GeorgiaTech’s engineering expertise, and the power of our AI models like DolphinGemma, we’re unlocking new possibilities for dolphin-human conversation. ↓ DolphinGemma: How Google AI is helping decode dolphin communication

4/37

@elder_plinius

LFG!!!

[Quoted tweet]

this just reminded me that we have AGI and still haven't solved cetacean communication––what gives?!

I'd REALLY love to hear what they have to say...what with that superior glial density and all

[media=twitter]1884000635181564276[/media]

5/37

@_rchaves_

how do you evaluate that?

6/37

@agixbt

who knew AI would be the ultimate translator

7/37

@boneGPT

you don't wanna know what they are saying

8/37

@nft_parkk

@ClaireSilver12

9/37

@daniel_mac8

Dr. John C. Lilly would be proud

10/37

@cognitivecompai

Not to be confused with Cognitive Computations' DolphinGemma! But I'd love to collab with you guys!

cognitivecomputations/dolphin-2.9.4-gemma2-2b · Hugging Face

11/37

@koltregaskes

Can we have DogGemma next please?

12/37

@Hyperstackcloud

So fascinating! We can't wait to see what insights DolphinGemma uncovers

13/37

@artignatyev

dolphin dolphin dolphin

14/37

@AskCatGPT

finally, an ai to accurately interpret dolphin chatter—it'll be enlightening to know they've probably been roasting us this whole time

15/37

@Sameer9398

I’m hoping for this to work out, So we can finally talk to Dolphins and carry it forward to different Animals

16/37

@Samantha1989TV

you're FINISHED @lovenpeaxce

17/37

@GaryIngle77

Well done you beat the other guys to it

[Quoted tweet]

Ok @OpenAI it’s time - please release the model that allows us to speak to dolphins and whales now!

[media=twitter]1836818935150411835[/media]

18/37

@Unknown_Keys

DPO -> Dolphin Preference Optimization

19/37

@SolworksEnergy

"If dolphins have language, they also have culture," LFG

20/37

@matmoura19

getting there eventually

[Quoted tweet]

"dolphins have decided to evolve without wars"

"delphinoids came to help the planet evolve"

[media=twitter]1899547976306942122[/media]

21/37

@dolphinnnow

22/37

@SmokezXBT

Dolphin Language Model?

23/37

@vagasframe

🫨

24/37

@CKPillai_AI_Pro

DolphinGemma is a perfect example of how AI is unlocking the mysteries of the natural world.

25/37

@NC372837

@elonmusk Soon, AI will far exceed the best humans in reasoning

26/37

@Project_Caesium

now we can translate what dolphines are warning us before the earth is destroyed lol

amazing achievement!

27/37

@sticksnstonez2

Very cool!

28/37

@EvanGrenda

This is massive @discolines

29/37

@fanofaliens

I would love to hear them speak and understand

30/37

@megebabaoglu

@alexisohanian next up whales!

31/37

@karmicoder

32/37

@NewWorldMan42

cool

33/37

@LECCAintern

Dolphin translation is real now?! This is absolutely incredible, @GoogleDeepMind

34/37

@byinquiry

@AskPerplexity, DolphinGemma’s ability to predict dolphin sound sequences on a Pixel 9 in real-time is a game-changer for marine research!

35/37

@nodoby

/grok what is the dolphin they test on's name

36/37

@IsomorphIQ_AI

Fascinating work! Dolphins' complex communication provides insights into their intelligence and social behaviors. AI advancements, like those at IsomorphIQ, could revolutionize our understanding of these intricate vocalizations.

-

37/37

@__U_O_S__

Going about it all wrong.

1/32

@minchoi

This is wild.

Google just built an AI model that might help us talk to dolphins.

It’s called DolphinGemma.

And they used a Google Pixel to listen and analyze.

https://video.twimg.com/amplify_video/1911767019344531456/vid/avc1/1080x1920/XMoZ_rgM3cVPK2Kz.mp4

2/32

@minchoi

Researchers used Pixel phones to listen, analyze, and talk back to dolphins in real time.

https://video.twimg.com/amplify_video/1911787266659287040/vid/avc1/1280x720/20s83WXZnFY8tI_N.mp4

3/32

@minchoi

Read the blog here:

DolphinGemma: How Google AI is helping decode dolphin communication

4/32

@minchoi

If you enjoyed this thread,

Follow me @minchoi and please Bookmark, Like, Comment & Repost the first Post below to share with your friends:

[Quoted tweet]

This is wild.

Google just built an AI model that might help us talk to dolphins.

It’s called DolphinGemma.

And they used a Google Pixel to listen and analyze.

[media=twitter]1911789107803480396[/media]

https://video.twimg.com/amplify_video/1911767019344531456/vid/avc1/1080x1920/XMoZ_rgM3cVPK2Kz.mp4

5/32

@shawnchauhan1

This is next-level!

6/32

@minchoi

Truly wild

7/32

@Native_M2

Awesome! They should do dogs next

8/32

@minchoi

Yea why haven't we?

9/32

@mememuncher420

10/32

@minchoi

I don't think it's 70%

11/32

@eddie365_

That’s crazy!

Just a matter of time until we are talking to our dogs! Lol

12/32

@minchoi

I'm surprised we haven't made progress like this with dogs yet!

13/32

@ankitamohnani28

Woah! Looks interesting

14/32

@minchoi

Could be the beginning of a really interesting research with AI

15/32

@Adintelnews

Atlantis, here I come!

16/32

@minchoi

Is it real?

17/32

@sozerberk

Google doesn’t take a break. Every day they release so much and showing that AI is much bigger than daily chatbots

18/32

@minchoi

Definitely awesome to see AI applications beyond chatbots

19/32

@vidxie

Talking to dolphins sounds incredible

20/32

@minchoi

This is just the beginning!

21/32

@jacobflowchat

imagine if we could actually chat with dolphins one day. the possibilities for understanding marine life are endless.

22/32

@minchoi

Any animals for that matter

23/32

@raw_works

you promised no more "wild". but i'll give you a break because dolphins are wild animals.

24/32

@minchoi

That was April Fools

25/32

@Calenyita

Conversations are better with octopodes

26/32

@minchoi

Oh?

27/32

@karlmehta

That's truly incredible

28/32

@karlmehta

What a time to be alive

29/32

@SUBBDofficial

wen Dolphin DAO

30/32

@VentureMindAI

This is insane

31/32

@ThisIsMeIn360VR

The dolphins just keep singing...

32/32

@vectro

@cognitivecompai

@minchoi

This is wild.

Google just built an AI model that might help us talk to dolphins.

It’s called DolphinGemma.

And they used a Google Pixel to listen and analyze.

https://video.twimg.com/amplify_video/1911767019344531456/vid/avc1/1080x1920/XMoZ_rgM3cVPK2Kz.mp4

2/32

@minchoi

Researchers used Pixel phones to listen, analyze, and talk back to dolphins in real time.

https://video.twimg.com/amplify_video/1911787266659287040/vid/avc1/1280x720/20s83WXZnFY8tI_N.mp4

3/32

@minchoi

Read the blog here:

DolphinGemma: How Google AI is helping decode dolphin communication

4/32

@minchoi

If you enjoyed this thread,

Follow me @minchoi and please Bookmark, Like, Comment & Repost the first Post below to share with your friends:

[Quoted tweet]

This is wild.

Google just built an AI model that might help us talk to dolphins.

It’s called DolphinGemma.

And they used a Google Pixel to listen and analyze.

[media=twitter]1911789107803480396[/media]

https://video.twimg.com/amplify_video/1911767019344531456/vid/avc1/1080x1920/XMoZ_rgM3cVPK2Kz.mp4

5/32

@shawnchauhan1

This is next-level!

6/32

@minchoi

Truly wild

7/32

@Native_M2

Awesome! They should do dogs next

8/32

@minchoi

Yea why haven't we?

9/32

@mememuncher420

10/32

@minchoi

I don't think it's 70%

11/32

@eddie365_

That’s crazy!

Just a matter of time until we are talking to our dogs! Lol

12/32

@minchoi

I'm surprised we haven't made progress like this with dogs yet!

13/32

@ankitamohnani28

Woah! Looks interesting

14/32

@minchoi

Could be the beginning of a really interesting research with AI

15/32

@Adintelnews

Atlantis, here I come!

16/32

@minchoi

Is it real?

17/32

@sozerberk

Google doesn’t take a break. Every day they release so much and showing that AI is much bigger than daily chatbots

18/32

@minchoi

Definitely awesome to see AI applications beyond chatbots

19/32

@vidxie

Talking to dolphins sounds incredible

20/32

@minchoi

This is just the beginning!

21/32

@jacobflowchat

imagine if we could actually chat with dolphins one day. the possibilities for understanding marine life are endless.

22/32

@minchoi

Any animals for that matter

23/32

@raw_works

you promised no more "wild". but i'll give you a break because dolphins are wild animals.

24/32

@minchoi

That was April Fools

25/32

@Calenyita

Conversations are better with octopodes

26/32

@minchoi

Oh?

27/32

@karlmehta

That's truly incredible

28/32

@karlmehta

What a time to be alive

29/32

@SUBBDofficial

wen Dolphin DAO

30/32

@VentureMindAI

This is insane

31/32

@ThisIsMeIn360VR

The dolphins just keep singing...

32/32

@vectro

@cognitivecompai

1/10

@productfella

For the first time in human history, we might talk to another species:

Google has built an AI that processes dolphin sounds as language.

40 years of underwater recordings revealed they use "names" to find each other.

This summer, we'll discover what else they've been saying all along:

2/10

@productfella

Since 1985, researchers collected 40,000 hours of dolphin recordings.

The data sat impenetrable for decades.

Until Google created something extraordinary:

https://video.twimg.com/amplify_video/1913253714569334785/vid/avc1/1280x720/7bQ5iccyKXdukfkD.mp4

3/10

@productfella

Meet DolphinGemma - an AI with just 400M parameters.

That's 0.02% of GPT-4's size.

Yet it's cracking a code that stumped scientists for generations.

The secret? They found something fascinating:

https://video.twimg.com/amplify_video/1913253790805004288/vid/avc1/1280x720/UQXv-jbjVfOK1yYQ.mp4

4/10

@productfella

Every dolphin creates a unique whistle in its first year.

It's their name.

Mothers call calves with these whistles when separated.

But the vocalizations contain far more:

https://video.twimg.com/amplify_video/1913253847348523008/vid/avc1/1280x720/hopgMWjTADY5yMzs.mp4

5/10

@productfella

Researchers discovered distinct patterns:

• Signature whistles as IDs

• "Squawks" during conflicts

• "Buzzes" in courtship and hunting

Then came the breakthrough:

https://video.twimg.com/amplify_video/1913253887269888002/vid/avc1/1280x720/IbgvfHsht7RogPVp.mp4

6/10

@productfella

DolphinGemma processes sound like human language.

It runs entirely on a smartphone.

Catches patterns humans missed for decades.

The results stunned marine biologists:

https://video.twimg.com/amplify_video/1913253944094347264/vid/avc1/1280x720/1QDbwkSD0x6etHn9.mp4

7/10

@productfella

The system achieves 87% accuracy across 32 vocalization types.

Nearly matches human experts.

Reveals patterns invisible to traditional analysis.

This changes everything for conservation:

https://video.twimg.com/amplify_video/1913253989266927616/vid/avc1/1280x720/1sI9ts2JrY7p0Pjw.mp4

8/10

@productfella

Three critical impacts:

• Tracks population through voices

• Detects environmental threats

• Protects critical habitats

But there's a bigger story here:

https://video.twimg.com/amplify_video/1913254029293146113/vid/avc1/1280x720/c1poqHVhgt22SgE8.mp4

9/10

@productfella

The future isn't bigger AI—it's smarter, focused models.

Just as we're decoding dolphin language, imagine what other secrets we could unlock in specialized data.

We might be on the verge of understanding nature in ways never before possible.

10/10

@productfella

Video credits:

- Could we speak the language of dolphins? | Denise Herzing | TED

- Google's AI Can Now Help Talk to Dolphins — Here’s How! | Front Page | AIM TV

- ‘Speaking Dolphin’ to AI Data Dominance, 4.1 + Kling 2.0: 7 Updates Critically Analysed

@productfella

For the first time in human history, we might talk to another species:

Google has built an AI that processes dolphin sounds as language.

40 years of underwater recordings revealed they use "names" to find each other.

This summer, we'll discover what else they've been saying all along:

2/10

@productfella

Since 1985, researchers collected 40,000 hours of dolphin recordings.

The data sat impenetrable for decades.

Until Google created something extraordinary:

https://video.twimg.com/amplify_video/1913253714569334785/vid/avc1/1280x720/7bQ5iccyKXdukfkD.mp4

3/10

@productfella

Meet DolphinGemma - an AI with just 400M parameters.

That's 0.02% of GPT-4's size.

Yet it's cracking a code that stumped scientists for generations.

The secret? They found something fascinating:

https://video.twimg.com/amplify_video/1913253790805004288/vid/avc1/1280x720/UQXv-jbjVfOK1yYQ.mp4

4/10

@productfella

Every dolphin creates a unique whistle in its first year.

It's their name.

Mothers call calves with these whistles when separated.

But the vocalizations contain far more:

https://video.twimg.com/amplify_video/1913253847348523008/vid/avc1/1280x720/hopgMWjTADY5yMzs.mp4

5/10

@productfella

Researchers discovered distinct patterns:

• Signature whistles as IDs

• "Squawks" during conflicts

• "Buzzes" in courtship and hunting

Then came the breakthrough:

https://video.twimg.com/amplify_video/1913253887269888002/vid/avc1/1280x720/IbgvfHsht7RogPVp.mp4

6/10

@productfella

DolphinGemma processes sound like human language.

It runs entirely on a smartphone.

Catches patterns humans missed for decades.

The results stunned marine biologists:

https://video.twimg.com/amplify_video/1913253944094347264/vid/avc1/1280x720/1QDbwkSD0x6etHn9.mp4

7/10

@productfella

The system achieves 87% accuracy across 32 vocalization types.

Nearly matches human experts.

Reveals patterns invisible to traditional analysis.

This changes everything for conservation:

https://video.twimg.com/amplify_video/1913253989266927616/vid/avc1/1280x720/1sI9ts2JrY7p0Pjw.mp4

8/10

@productfella

Three critical impacts:

• Tracks population through voices

• Detects environmental threats

• Protects critical habitats

But there's a bigger story here:

https://video.twimg.com/amplify_video/1913254029293146113/vid/avc1/1280x720/c1poqHVhgt22SgE8.mp4

9/10

@productfella

The future isn't bigger AI—it's smarter, focused models.

Just as we're decoding dolphin language, imagine what other secrets we could unlock in specialized data.

We might be on the verge of understanding nature in ways never before possible.

10/10

@productfella

Video credits:

- Could we speak the language of dolphins? | Denise Herzing | TED

- Google's AI Can Now Help Talk to Dolphins — Here’s How! | Front Page | AIM TV

- ‘Speaking Dolphin’ to AI Data Dominance, 4.1 + Kling 2.0: 7 Updates Critically Analysed