AI Regulation

There have been multiple call to regulate AI. It is too early to do so.

AI Regulation

There have been multiple call to regulate AI. It is too early to do so.

ELAD GIL

SEP 17, 2023

[While I was finalizing this post, Bill Gurley gave this great talk on incumbent capture and regulation].

ChatGPT has only been live for ~9 months and GPT-4 for 6 or so months. Yet there have already been strong calls to regulate AI due to misinformation, bias, existential risk, threat of biological or chemical attack, potential AI-fueled cyberattacks etc without any tangible example of any of these things actually having or happened with any real frequency compared to existing versions without AI. Many, like chemical attacks are truly theoretical without an ordered logic chain of how they would happen, and any explanation as to why existing safegaurds or laws are insufficient.

Thanks for reading Elad Blog! Subscribe for free to receive new posts and support my work.

Subscribe

Sometimes, regulation of an industry can be positive for consumers or businesses. For example, FDA regulation of food can protect people from disease outbreaks, chemical manipulation of food, or other issues.

In most cases, regulation can be very negative for an industry and its evolution. It may force an industry to be government-centric versus user-centric, prevent competition and lock in incumbents, move production or economic benefits overseas, or distort the economics and capabilities of an entire industry.

Given the many positive potentials of AI, and the many negatives of regulation, calls for AI regulation are likely premature, but also in some cases clearly self serving for the parties asking for it (it is not surprising the main incumbents say regulation is good for AI, as it will lock in their incumbency). Some notable counterexamples also exist where we should likely regulate things related to AI, but these are few and far between (e.g. export of advanced chip technology to foreign adversaries is a notable one).

In general, we should not push to regulate most aspects of AI now and let the technology advance and mature further for positive uses before revisiting this area.

First, what is at stake? Global health & educational equity + other areas

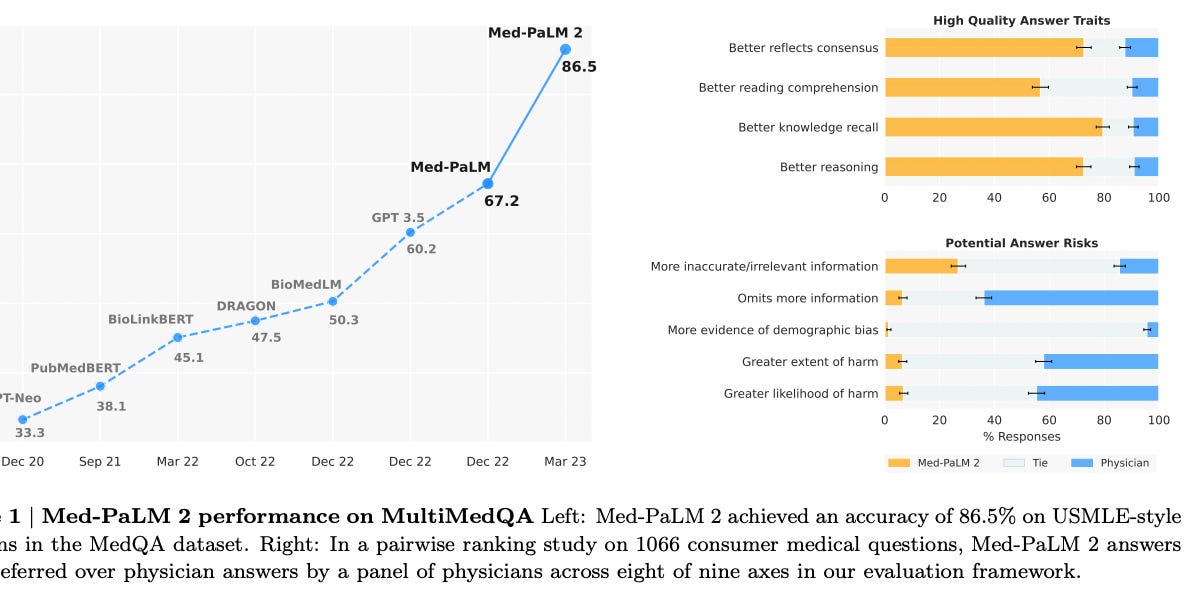

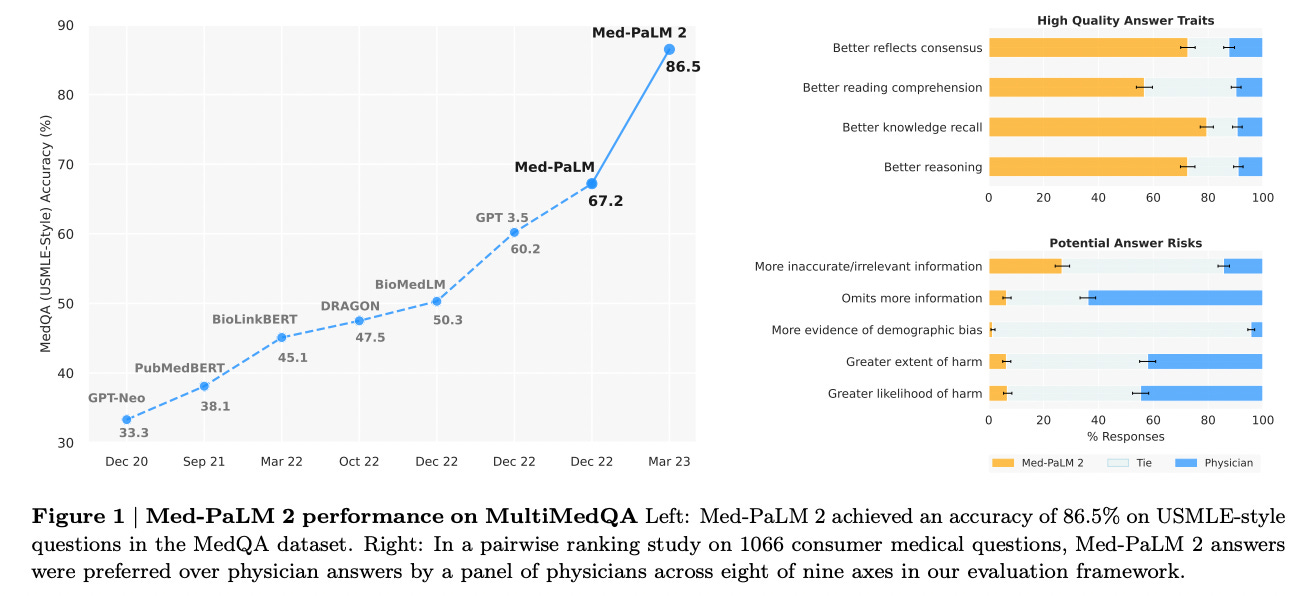

Too little of the dialogue today focuses on the positive potential of AI(I cover the risks of AI in another post.) AI is an incredibly powerful tool to impact global equity for some of the biggest issues facing humanity. On the healthcare front, models such as Med-PaLM2 from Google now outperform medical experts to the point where training the model using physician experts may make the model worse.

Imagine having a medical expert available via any phone or device anywhere in the world - to which you can upload images, symptoms, and follow up and get ongoing diagnosis and care. This technology is available today and just need to be properly bundled and delivered in a bundled and thoughtful way.

Similarly, AI can provide significant educational resources globally today. Even something as simple as auto-translating and dubbing all the educational text, video or voice content in the world is a straightforward task given todays language and voice models. Adding a chat like interface that can personalize and pace the learning of the student on the other end is coming shortly based on existing technologies. Significantly increasing global equity of education is a goal we can achieve if we allow ourselves to do so.

Additionally, AI can also play a role in other areas including economic productivity, national defense (covered well here), and many other areas.

AI is the likely the single strongest motive force towards global equity in health and education in decades. Regulation is likely to slow down and confound progress towards these, and other goals and use cases.

Regulation tends to prevent competition - it favors incumbents and kills startups

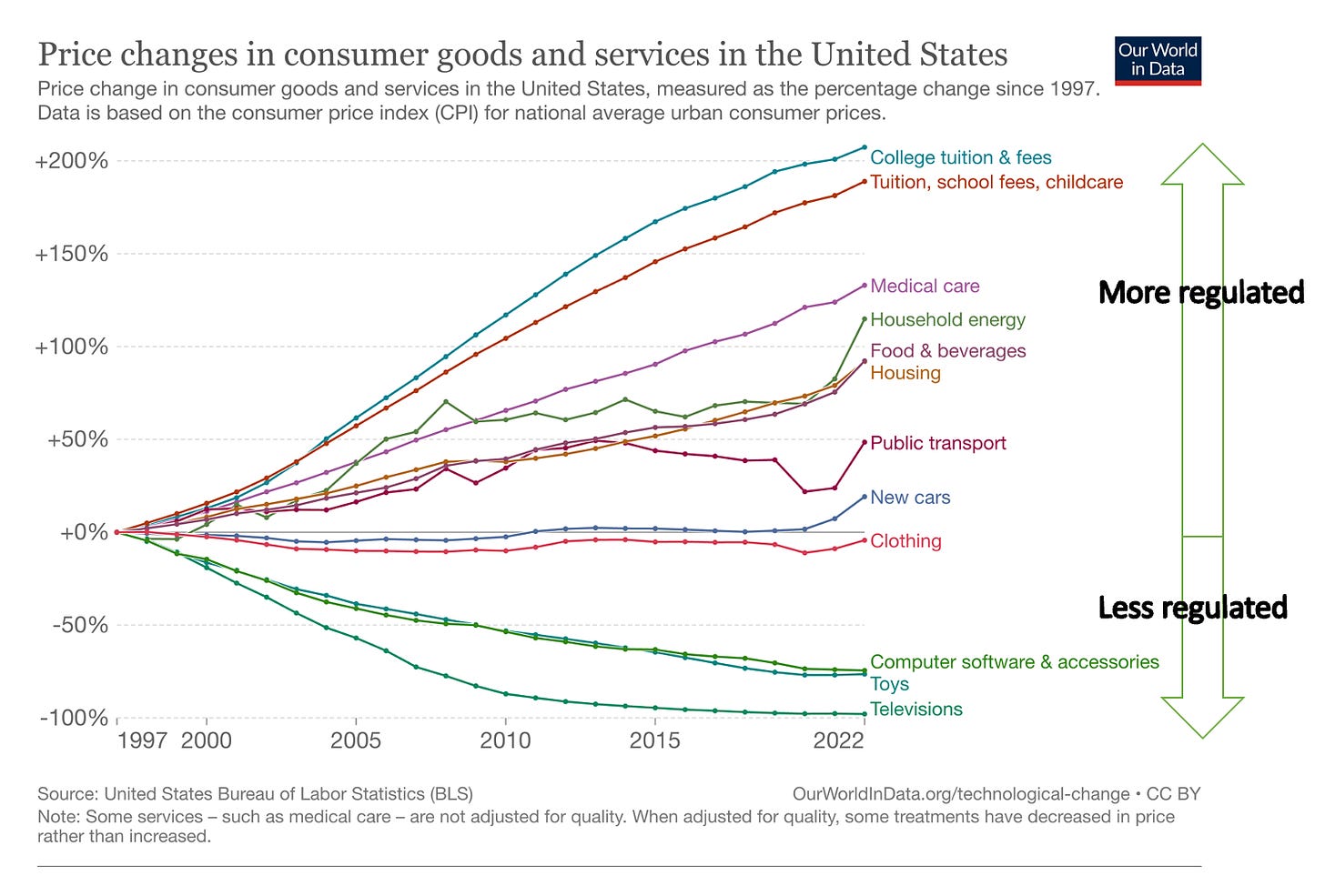

In most industries, regulation prevents competition. This famous chart of prices over time reflects how highly regulated industries (healthcare, education, energy) have their costs driven up over time, while less regulated industries (clothing, software, toys) drop costs dramatically over time. (Please note I do not believe these are inflation adjusted - so 60-70% may be “break even” pricing inflation adjusted).

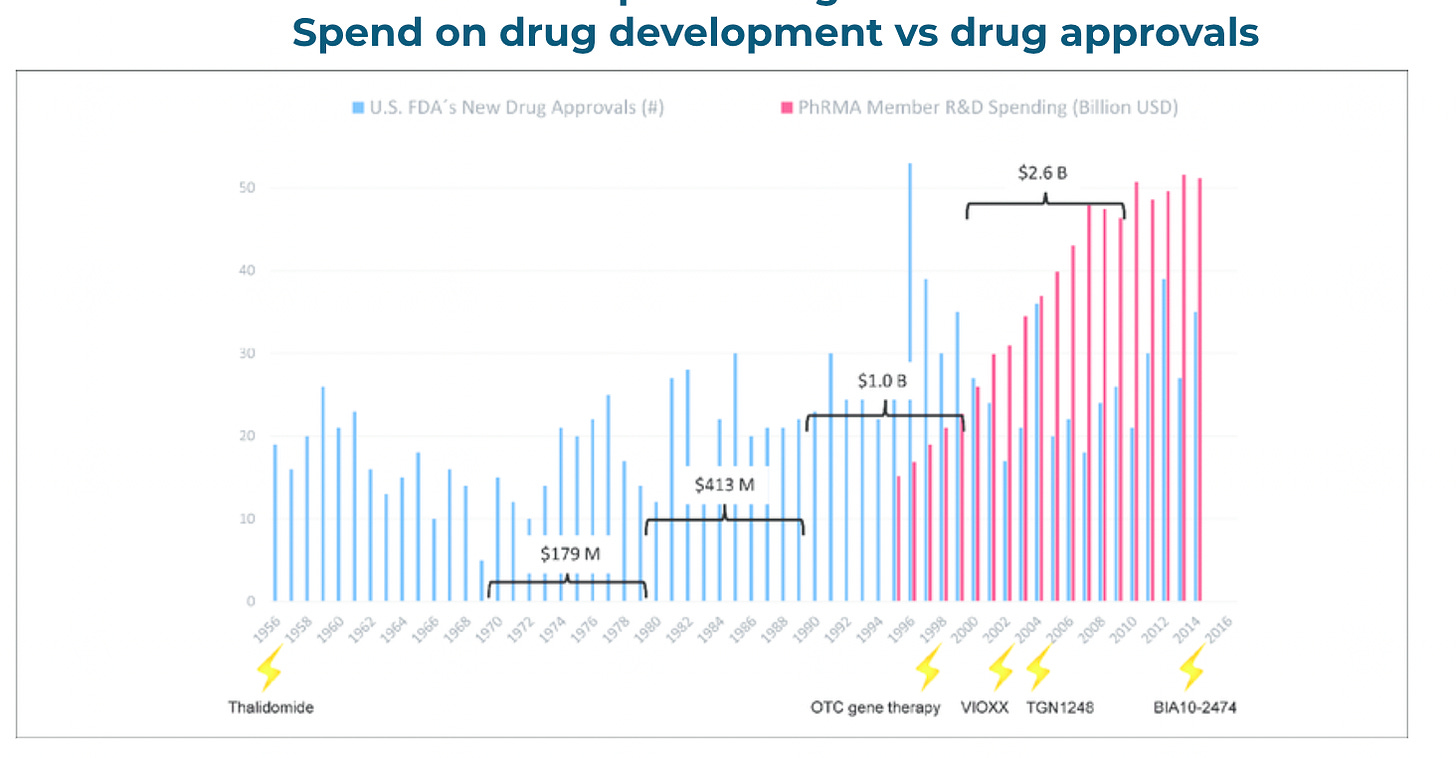

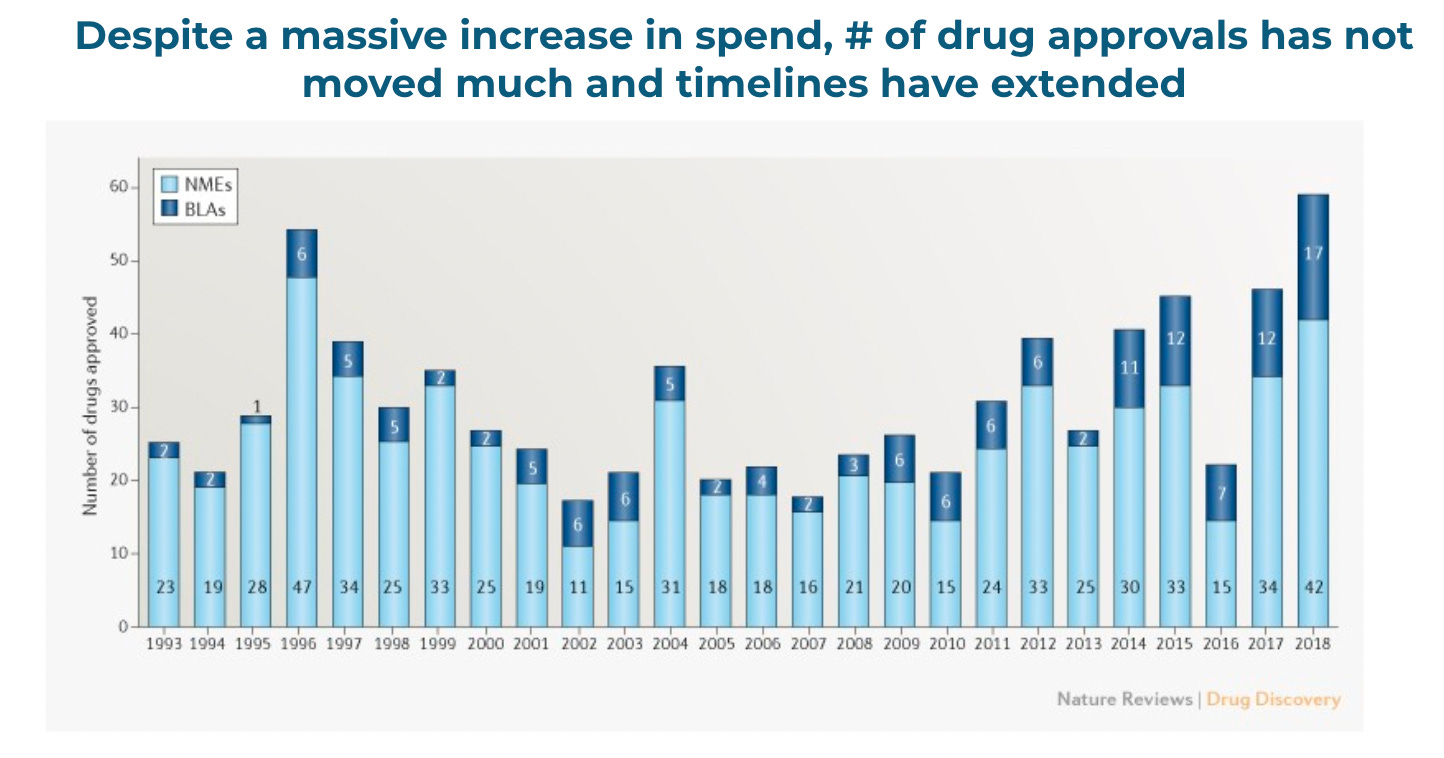

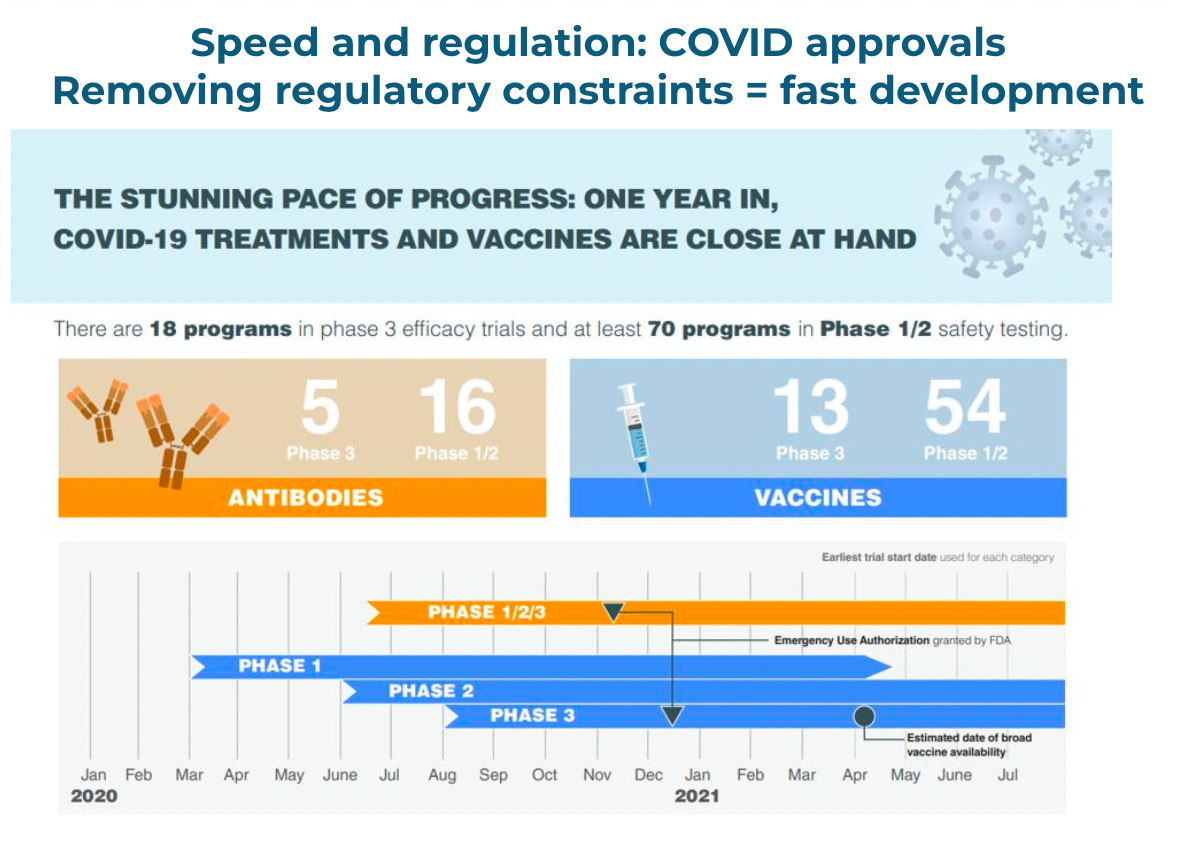

Regulation favors incumbents in two ways. First, it increase the cost of entering a market, in some cases dramatically. The high cost of clinical trials and the extra hurdles put in place to launch a drug are good examples of this. A must-watch video is this one with Paul Janssen, one of the giants of pharma, in which he states that the vast majority of drug development budgets are wasted on tests imposed by regulators which “has little to do with actual research or actual development”. This is a partial explanation for why (outside of Moderna, an accident of COVID), no $40B+ market cap new biopharma company has been launched in almost 40 years (despite healthcare being 20% of US GDP).

Secondly, regulation favors incumbents via something known as “regulatory capture”. In regulatory capture, the regulators become beholden to a specific industry lobby or group - for example by receiving jobs in the industry after working as a regulator, or via specific forms of lobbying. There becomes a strong incentive to “play nice” with the incumbents by regulators and to bias regulations their way, in order to get favors later in life.

Regulation often blocks industry progress: Nuclear as an example.

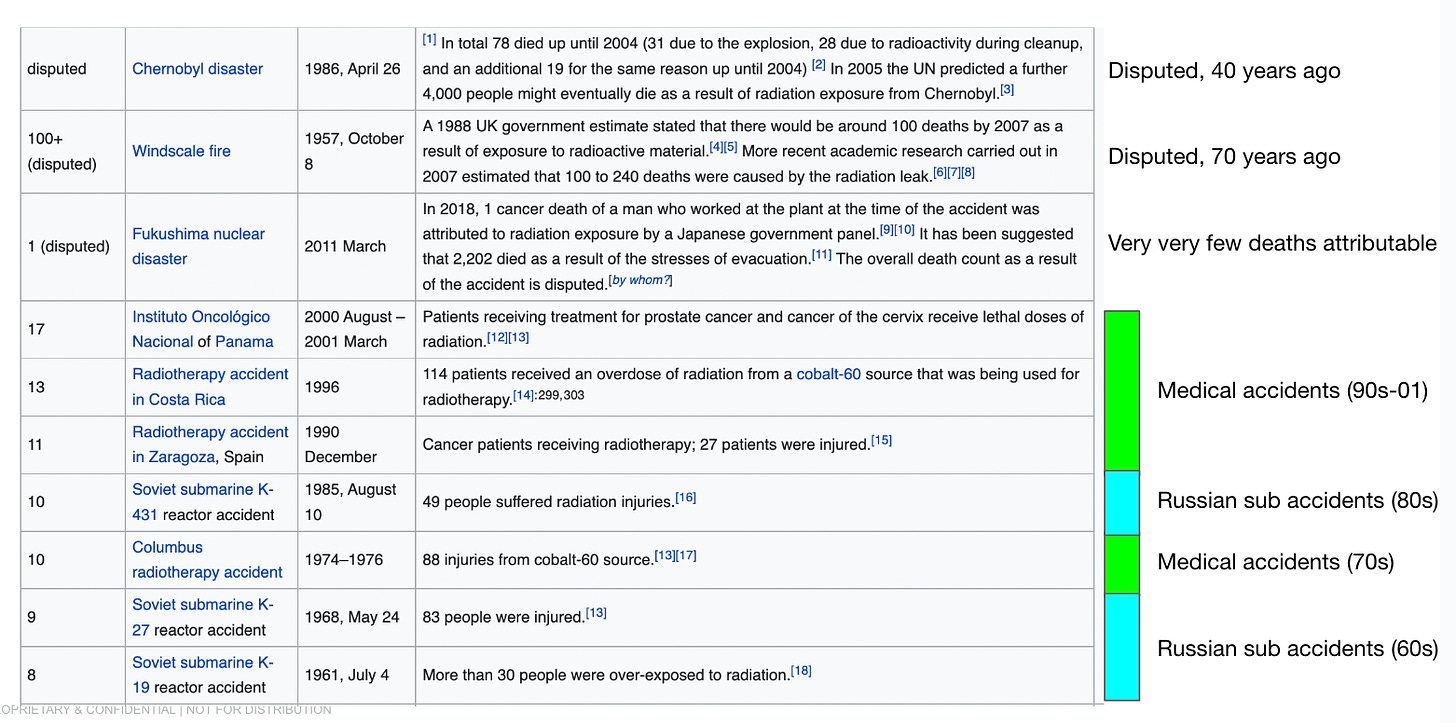

Many of the calls to regulate AI suggest some analog to nuclear. For example, a registry of anyone building models and then a new body to oversee them. Nuclear is a good example of how in some cases regulators will block the entire industry they are supposed to watch over. For example, the Nuclear Regulatory Commission (NRC), established in 1975, has not approved a new nuclear reactor design for decades (indeed, not since the 1970s). This has prevented use of nuclear in the USA, despite actual data showing high safety profiles. France meanwhile has continued to have 70% of its power generated via nuclear, Japan is heading back to 30% with plans to grow to 50%, and the US has been declining down to 18%.This is despite nuclear being both extremely safe (if one looks at data) and clean from a carbon perspective.

Indeed, most deaths from nuclear in the modern era have been from medical accidents or Russian sub accidents. Something the actual regulator of nuclear power seem oddly unaware of in the USA.

Nuclear (and therefore Western energy policy) is ultimately a victim of bad PR, a strong eco-big oil lobby against it, and of regulatory constraints.

Regulation can drive an industry overseas

I am a short term AI optimist, and a long term AI doomer. In other words, I think the short term benefits of AI are immense, and most arguments made on tech-level risks of AI are overstated.For anyone who has read history, humans are perfectly capable of creating their own disasters. However, I do think in the long run (ie decades) AI is an existential risk for people. That said, at this point regulating AI will only send it overseas and federate and fragment the cutting edge of it to outside US jurisdiction. Just as crypto is increasingly offshoring, and even regulatory-compliant companies like Coinbase are considering leaving the US due to government crackdowns on crypto, regulating AI now in the USA will just send it overseas.The genie is out of the bottle and this technology is clearly incredibly powerful and important. Over-regulation in the USA has the potential to drive it elsewhere. This would be bad for not only US interests, but also potentially the moral and ethical frameworks in terms of how the most cutting edge versions of AI may get adopted. The European Union may show us an early form of this.