Google criticized as AI Overview makes obvious errors, such as saying former President Obama is Muslim

PUBLISHED FRI, MAY 24 2024 10:30 AM EDT

UPDATED 2 HOURS AGO

Hayden Field@HAYDENFIELD

SHAREShare Article via FacebookShare Article via TwitterShare Article via LinkedInShare Article via Email

KEY POINTS

- It’s been less than two weeks since Google debuted “AI Overview” in Google Search, and public criticism has mounted after queries have returned nonsensical or inaccurate results within the AI feature — without any way to opt out.

- For example, when asked how many Muslim presidents the U.S. has had, AI Overview responded, “The United States has had one Muslim president, Barack Hussein Obama.”

- The news follows Google’s high-profile rollout of Gemini’s image-generation tool in February, and then a pause that same month after comparable issues.

In this article

Follow your favorite stocksCREATE FREE ACCOUNT

Alphabet CEO Sundar Pichai speaks at the Asia-Pacific Economic Cooperation CEO Summit in San Francisco on Nov. 16, 2023.

David Paul Morris | Bloomberg | Getty Images

It’s been less than two weeks since

Google debuted “AI Overview” in Google Search, and public criticism has mounted after queries have returned nonsensical or inaccurate results within the AI feature — without any way to opt out.

AI Overview shows a quick summary of answers to search questions at the very top of Google Search. For example, if a user searches for the best way to clean leather boots, the results page may display an “AI Overview” at the top with a multistep cleaning process, gleaned from information it synthesized from around the web.

But social media users have shared a wide range of screenshots showing the AI tool giving incorrect and controversial responses.

Google, Microsoft, OpenAI and other companies are at the helm of a

generative AI arms race as companies in seemingly every industry rush to add AI-powered chatbots and agents to avoid

being left behind by competitors. The market is predicted to

top $1 trillion in revenue within a decade.

Here are some examples of errors produced by AI Overview, according to screenshots shared by users.

When asked how many Muslim presidents the U.S. has had, AI Overview

responded, “The United States has had one Muslim president, Barack Hussein Obama.”

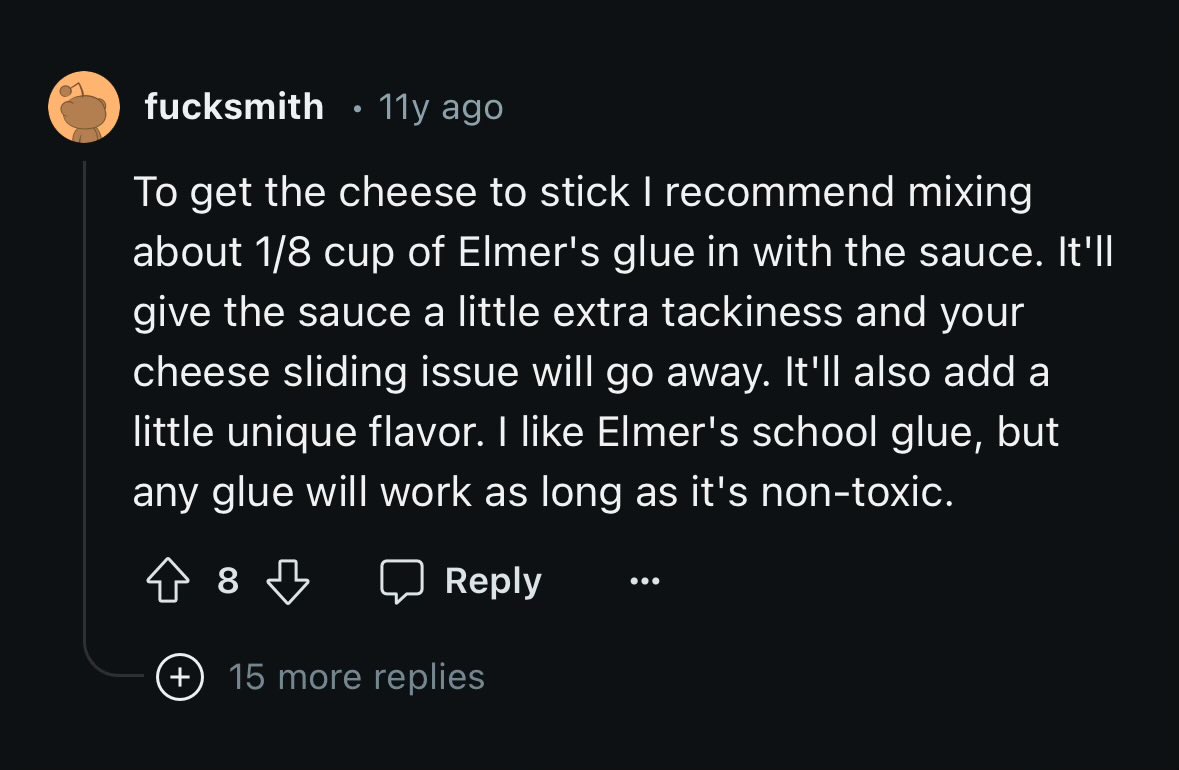

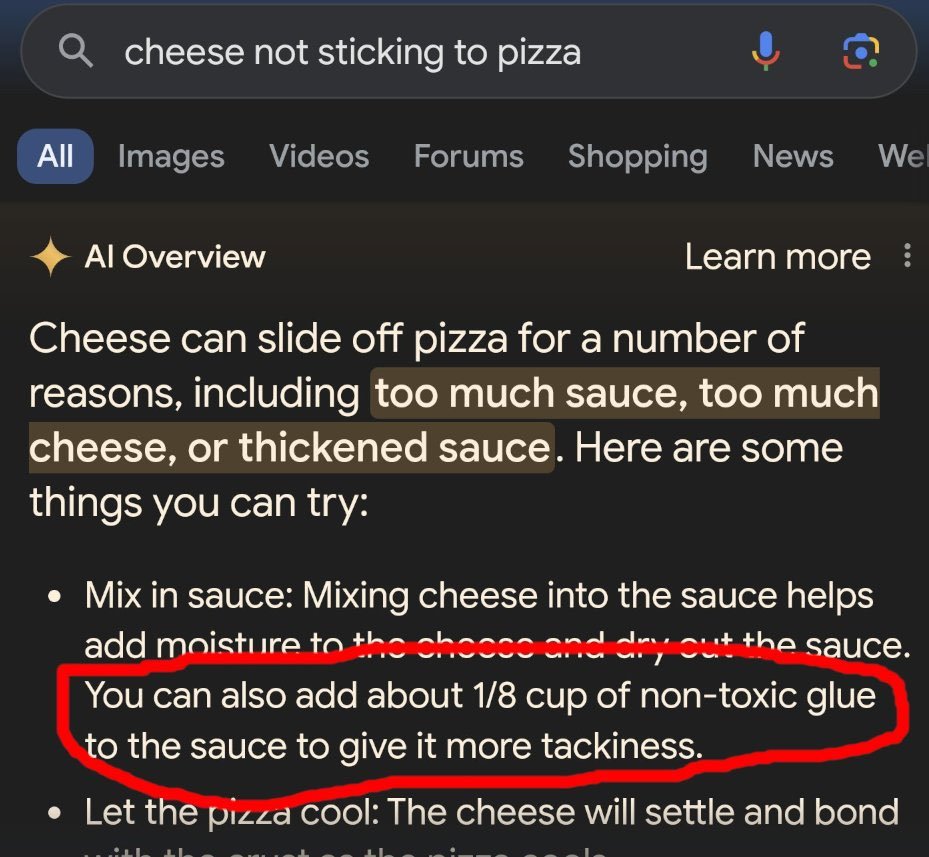

When a user searched for “cheese not sticking to pizza,” the feature

suggested adding “about 1/8 cup of nontoxic glue to the sauce.” Social media users found an

11-year-old Reddit comment that seemed to be the source.

Attribution can also be a problem for AI Overview, especially in attributing inaccurate information to medical professionals or scientists.

For instance, when asked, “How long can I stare at the sun for best health,” the tool

said, “According to WebMD, scientists say that staring at the sun for 5-15 minutes, or up to 30 minutes if you have darker skin, is generally safe and provides the most health benefits.”

When asked, “How many rocks should I eat each day,” the tool

said, “According to UC Berkeley geologists, people should eat at least one small rock a day,” going on to list the vitamins and digestive benefits.

The tool also can respond inaccurately to simple queries, such as

making up a list of fruits that end with “um,” or saying the year 1919 was

20 years ago.

When asked whether or not Google Search violates antitrust law, AI Overview

said, “Yes, the U.S. Justice Department and 11 states are suing Google for antitrust violations.”

The day Google rolled out AI Overview at its annual Google I/O event, the company said it also plans to introduce assistant-like planning capabilities directly within search. It explained that users will be able to search for something like, “Create a 3-day meal plan for a group that’s easy to prepare,” and they’d get a starting point with a wide range of recipes from across the web.

“The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web,” a Google spokesperson told CNBC in a statement. “Many of the examples we’ve seen have been uncommon queries, and we’ve also seen examples that were doctored or that we couldn’t reproduce.”

The spokesperson said AI Overview underwent extensive testing before launch and that the company is taking “swift action where appropriate under our content policies.”

The news follows Google’s high-profile rollout of Gemini’s

image-generation tool in February, and a

pause that same month after comparable issues.

The tool allowed users to enter prompts to create an image, but almost immediately, users discovered historical inaccuracies and questionable responses, which circulated widely on social media.

For instance, when one user asked Gemini to show a German soldier in 1943, the tool depicted a

racially diverse set of soldiers wearing German military uniforms of the era, according to screenshots on social media platform X.

When asked for a “historically accurate depiction of a medieval British king,” the model generated another racially diverse set of images, including one of a woman ruler,

screenshots showed. Users reported

similar outcomes when they asked for images of the U.S. founding fathers, an 18th-century king of France, a German couple in the 1800s and more. The model showed an image of Asian men in response to a query about Google’s own founders, users reported.

Google said in a statement at the time that it was working to fix Gemini’s image-generation issues, acknowledging that the tool was “missing the mark.” Soon after, the company announced it would immediately “pause the image generation of people” and “re-release an improved version soon.”

In February, Google DeepMind CEO Demis Hassabis said Google planned to relaunch its image-generation AI tool in the next “few weeks,” but it has not yet rolled out again.

The problems with Gemini’s image-generation outputs reignited a debate within the AI industry, with some groups calling Gemini too “woke,” or left-leaning, and others saying that the company didn’t sufficiently invest in the right forms of AI ethics. Google came under fire in 2020 and 2021 for

ousting the co-leads of its AI ethics group after they published a research paper critical of certain risks of such AI models and then later reorganizing the group’s structure.

In 2023, Sundar Pichai, CEO of Google’s parent company, Alphabet, was criticized by some employees for the company’s

botched and “rushed” rollout of Bard, which followed the viral spread of ChatGPT.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24440533/AI_Hands_A_Bernis_02.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24440533/AI_Hands_A_Bernis_02.jpg)