man I would love to animate my own art. I’m a musician too.. could do my own visualizer.

Makes me wanna learn C# and unity. I could do my own game + cutscenes

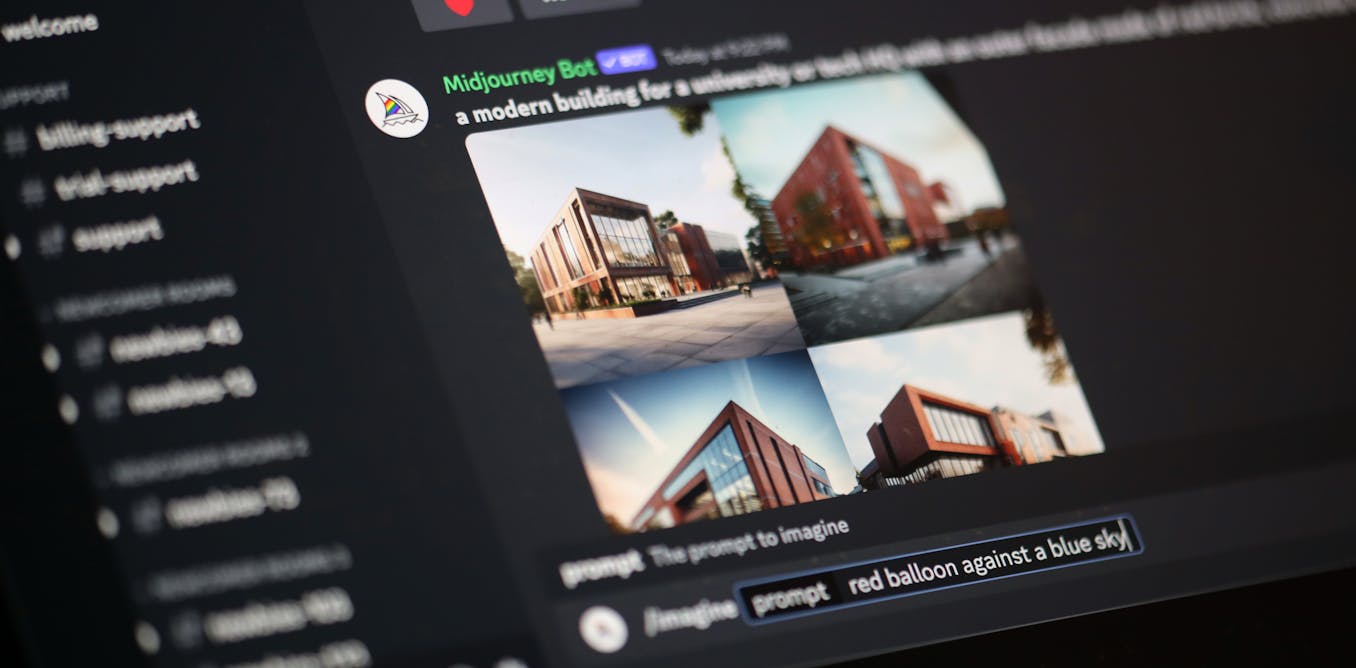

Ai can definitely be an equalizer for the working man.. at least until it takes over

stability-ai/stable-video-diffusion – Run with an API on Replicate

SVD is a research-only image to video model

idk why that's what people are pushing for, use that shyt to handle all of the boring tedious aspects of adult life instead so everyone can enjoy actually having enough free time to do creative hobbies

idk why that's what people are pushing for, use that shyt to handle all of the boring tedious aspects of adult life instead so everyone can enjoy actually having enough free time to do creative hobbies