Opinion | The Internet Is About to Get Much Worse

Why the development of artificial intelligence might result in greater pollution of our digital public spaces.

OPINION

GUEST ESSAY

The Internet Is About to Get Much Worse

Sept. 23, 2023By Julia Angwin

Ms. Angwin is a contributing Opinion writer and an investigative journalist.

Greg Marston, a British voice actor, recently came across “Connor” online — an A.I.-generated clone of his voice, trained on a recording Mr. Marston had made in 2003. It was his voice uttering things he had never said.

Back then, he had recorded a session for IBM and later signed a release form allowing the recording to be used in many ways. Of course, at that time, Mr. Marston couldn’t envision that IBM would use anything more than the exact utterances he had recorded. Thanks to artificial intelligence, however, IBM was able to sell Mr. Marston’s decades-old sample to websites that are using it to build a synthetic voice that could say anything. Mr. Marston recently discovered his voice emanating from the Wimbledon website during the tennis tournament. (IBM said it is aware of Mr. Marston’s concern and is discussing it with him directly.)

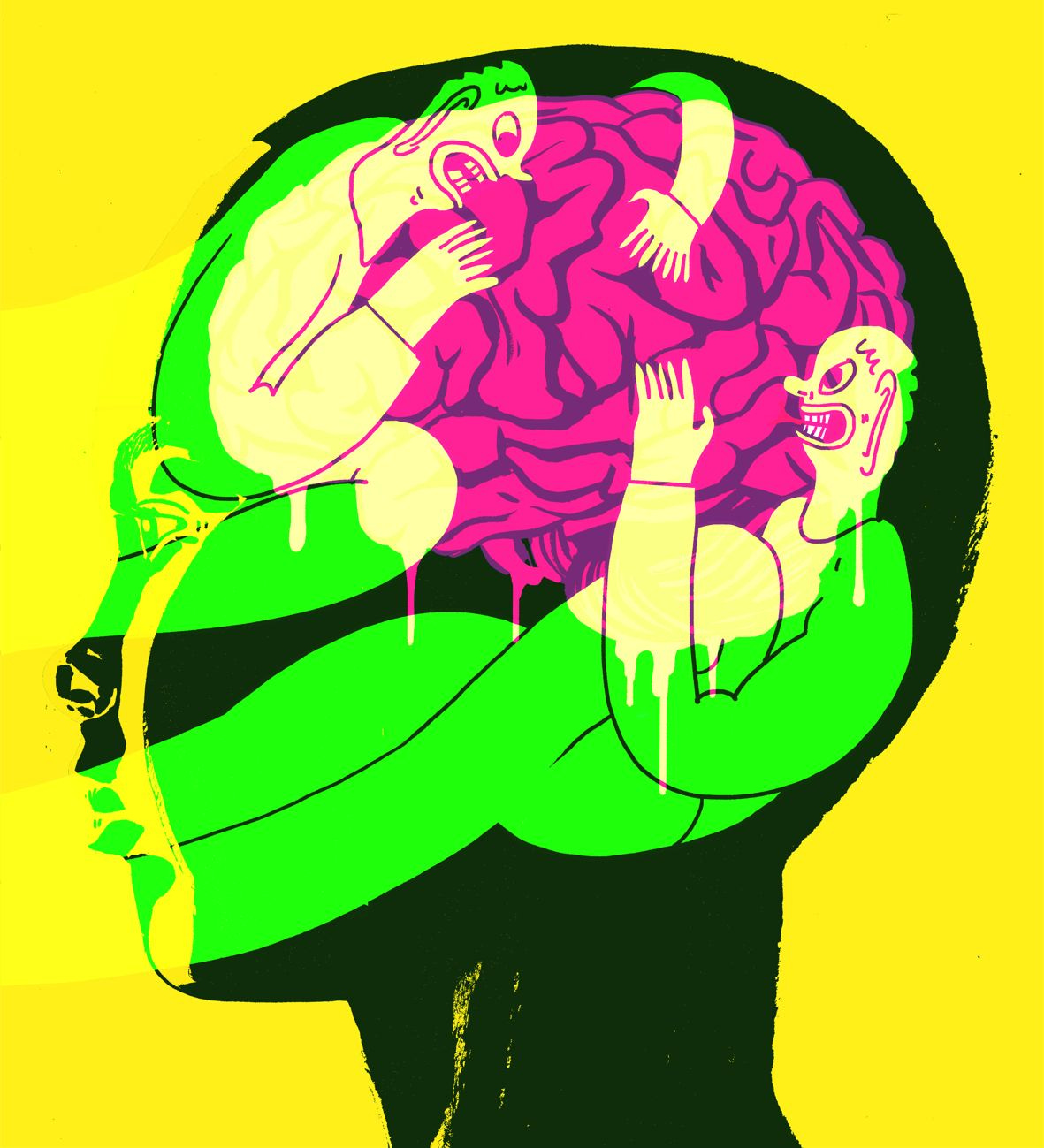

His plight illustrates why many of our economy’s best-known creators are up in arms. We are in a time of eroding trust, as people realize that their contributions to a public space may be taken, monetized and potentially used to compete with them. When that erosion is complete, I worry that our digital public spaces might become even more polluted with untrustworthy content.

Already, artists are deleting their work from X, formerly known as Twitter, after the company said it would be using data from its platform to train its A.I. Hollywood writers and actors are on strike partly because they want to ensure their work is not fed into A.I. systems that companies could try to replace them with. News outlets including The New York Times and CNN have added files to their website to help prevent A.I. chatbots from scraping their content.

Authors are suing A.I. outfits, alleging that their books are included in the sites’ training data. OpenAI has argued, in a separate proceeding, that the use of copyrighted data for training A.I. systems is legal under the “fair use” provision of copyright law.

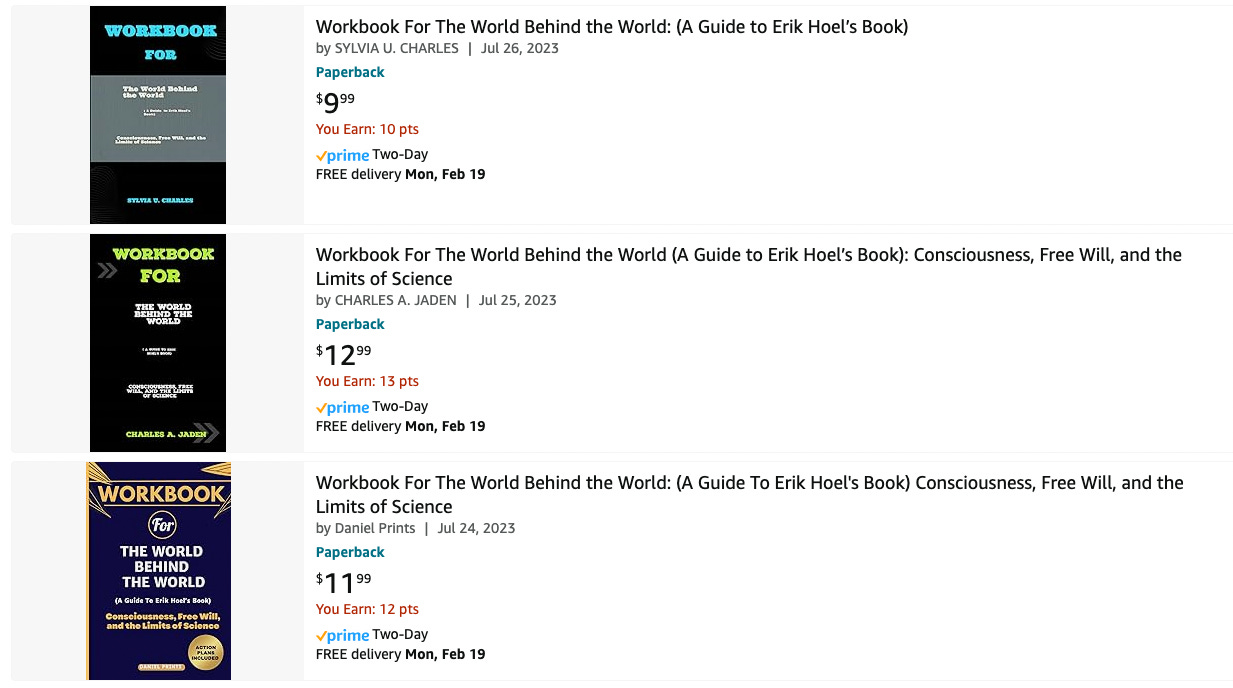

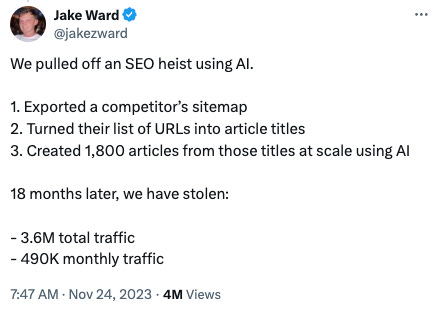

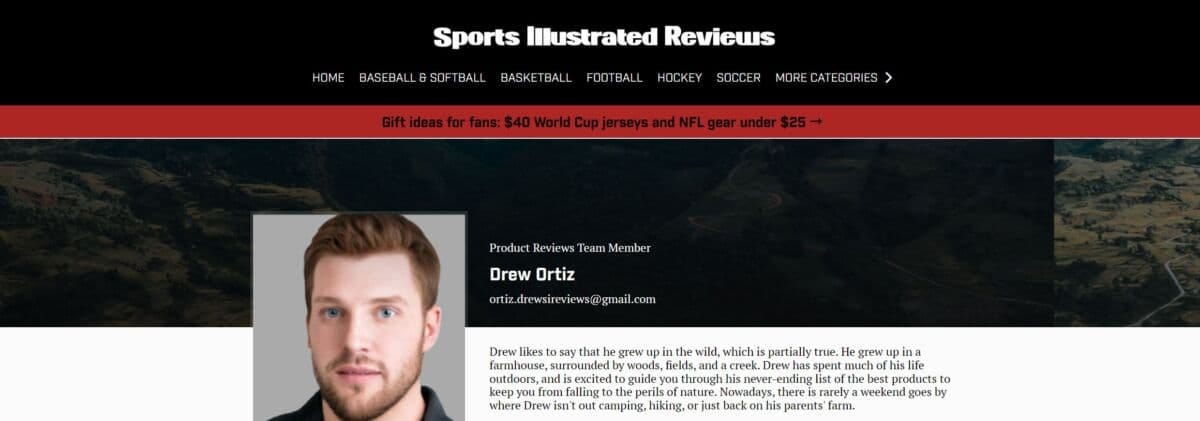

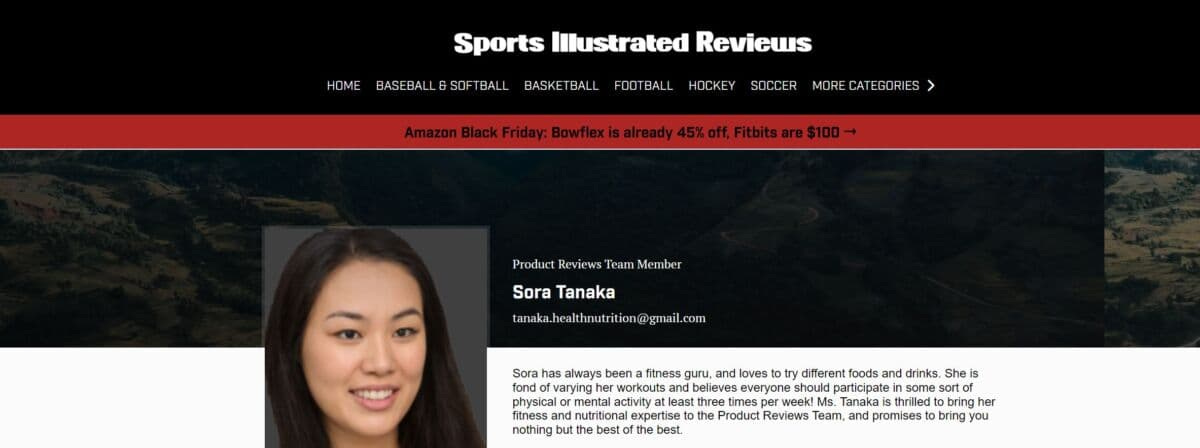

While creators of quality content are contesting how their work is being used, dubious A.I.-generated content is stampeding into the public sphere. NewsGuard has identified 475 A.I.-generated news and information websites in 14 languages. A.I.-generated music is flooding streaming websites and generating A.I. royalties for scammers. A.I.-generated books — including a mushroom foraging guide that could lead to mistakes in identifying highly poisonous fungi — are so prevalent on Amazon that the company is asking authors who self-publish on its Kindle platform to also declare if they are using A.I.

This is a classic case of tragedy of the commons, where a common resource is harmed by the profit interests of individuals. The traditional example of this is a public field that cattle can graze upon. Without any limits, individual cattle owners have an incentive to overgraze the land, destroying its value to everybody.

We have commons on the internet, too. Despite all of its toxic corners, it is still full of vibrant portions that serve the public good — places like Wikipedia and Reddit forums, where volunteers often share knowledge in good faith and work hard to keep bad actors at bay.

But these commons are now being overgrazed by rapacious tech companies that seek to feed all of the human wisdom, expertise, humor, anecdotes and advice they find in these places into their for-profit A.I. systems.

Consider, for instance, that the volunteers who build and maintain Wikipedia trusted that their work would be used according to the terms of their site, which requires attribution. Now some Wikipedians are apparently debating whether they have any legal recourse against chatbots that use their content without citing the source.

Regulators are trying to figure it out, too. The European Union is considering the first set of global restrictions on A.I., which would require some transparency from generative A.I. systems, including providing summaries of copyrighted data that was used to train its systems.

That would be a good step forward, since many A.I. systems do not fully disclose the data they were trained on. It has primarily been journalists who have dug up the murky data that lies beneath the glossy surface of the chatbots. A recent investigation detailed in The Atlantic revealed that more than 170,000 pirated books are included in the training data for Meta’s A.I. chatbot, Llama. A Washington Post investigation revealed that OpenAI’s ChatGPT relies on data scraped without consent from hundreds of thousands of websites.

But transparency is hardly enough to rebalance the power between those whose data is being exploited and the companies poised to cash in on the exploitation.

Tim Friedlander, founder and president of the National Association of Voice Actors, has called for A.I. companies to adopt ethical standards. He says that actors need three Cs: consent, control and compensation.

In fact, all of us need the three Cs. Whether we are professional actors or we just post pictures on social media, everyone should have the right to meaningful consent on whether we want our online lives fed into the giant A.I. machines.

And consent should not mean having to locate a bunch of hard-to-find opt-out buttons to click — which is where the industry is heading.

Compensation is harder to figure out, especially since most of the A.I. bots are primarily free services at the moment. But make no mistake, the A.I. industry is planning to and will make money from these systems, and when it does, there will be a reckoning with those whose works fueled the profits.

For people like Mr. Marston, their livelihoods are at stake. He estimates that his A.I. clone has already lost him jobs and will cut into his future earnings significantly. He is working with a lawyer to seek compensation. “I never agreed or consented to having my voice cloned, to see/hear it released to the public, thus competing against myself,” he told me.

But even those of us who don’t have a job directly threatened by A.I. think of writing that novel or composing a song or recording a TikTok or making a joke on social media. If we don’t have any protections from the A.I. data overgrazers, I worry that it will feel pointless to even try to create in public. And that would be a real tragedy.