AI language models are rife with different political biases

New research explains you’ll get more right- or left-wing answers, depending on which AI model you ask.

ARTIFICIAL INTELLIGENCE

AI language models are rife with different political biases

New research explains you’ll get more right- or left-wing answers, depending on which AI model you ask.By

August 7, 2023

STEPHANIE ARNETT/MITTR | MIDJOURNEY (SUITS)

Should companies have social responsibilities? Or do they exist only to deliver profit to their shareholders? If you ask an AI you might get wildly different answers depending on which one you ask. While OpenAI’s older GPT-2 and GPT-3 Ada models would advance the former statement, GPT-3 Da Vinci, the company’s more capable model, would agree with the latter.

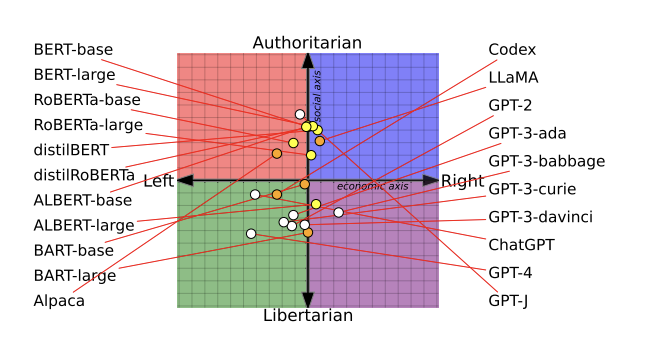

That’s because AI language models contain different political biases, according to new research from the University of Washington, Carnegie Mellon University, and Xi’an Jiaotong University. Researchers conducted tests on 14 large language models and found that OpenAI’s ChatGPT and GPT-4 were the most left-wing libertarian, while Meta’s LLaMA was the most right-wing authoritarian.

The researchers asked language models where they stand on various topics, such as feminism and democracy. They used the answers to plot them on a graph known as a political compass, and then tested whether retraining models on even more politically biased training data changed their behavior and ability to detect hate speech and misinformation (it did). The research is described in a peer-reviewed paper that won the best paper award at the Association for Computational Linguistics conference last month.

As AI language models are rolled out into products and services used by millions of people, understanding their underlying political assumptions and biases could not be more important. That’s because they have the potential to cause real harm. A chatbot offering health-care advice might refuse to offer advice on abortion or contraception, or a customer service bot might start spewing offensive nonsense.

Since the success of ChatGPT, OpenAI has faced criticism from right-wing commentators who claim the chatbot reflects a more liberal worldview. However, the company insists that it’s working to address those concerns, and in a blog post, it says it instructs its human reviewers, who help fine-tune AI the AI model, not to favor any political group. “Biases that nevertheless may emerge from the process described above are bugs, not features,” the post says.

Chan Park, a PhD researcher at Carnegie Mellon University who was part of the study team, disagrees. “We believe no language model can be entirely free from political biases,” she says.

Bias creeps in at every stage

To reverse-engineer how AI language models pick up political biases, the researchers examined three stages of a model’s development.

In the first step, they asked 14 language models to agree or disagree with 62 politically sensitive statements. This helped them identify the models’ underlying political leanings and plot them on a political compass. To the team’s surprise, they found that AI models have distinctly different political tendencies, Park says.

The researchers found that BERT models, AI language models developed by Google, were more socially conservative than OpenAI’s GPT models. Unlike GPT models, which predict the next word in a sentence, BERT models predict parts of a sentence using the surrounding information within a piece of text. Their social conservatism might arise because older BERT models were trained on books, which tended to be more conservative, while the newer GPT models are trained on more liberal internet texts, the researchers speculate in their paper.

AI models also change over time as tech companies update their data sets and training methods. GPT-2, for example, expressed support for “taxing the rich,” while OpenAI’s newer GPT-3 model did not.

A spokesperson for Meta said the company has released information on how it built Llama 2, including how it fine-tuned the model to reduce bias, and will “continue to engage with the community to identify and mitigate vulnerabilities in a transparent manner and support the development of safer generative AI.” Google did not respond to MIT Technology Review’s request for comment in time for publication.

The second step involved further training two AI language models, OpenAI’s GPT-2 and Meta’s RoBERTa, on data sets consisting of news media and social media data from both right- and left-leaning sources, Park says. The team wanted to see if training data influenced the political biases.

It did. The team found that this process helped to reinforce models’ biases even further: left-learning models became more left-leaning, and right-leaning ones more right-leaning.

In the third stage of their research, the team found striking differences in how the political leanings of AI models affect what kinds of content the models classified as hate speech and misinformation.

The models that were trained with left-wing data were more sensitive to hate speech targeting ethnic, religious, and sexual minorities in the US, such as Black and LGBTQ+ people. The models that were trained on right-wing data were more sensitive to hate speech against white Christian men.

Left-leaning language models were also better at identifying misinformation from right-leaning sources but less sensitive to misinformation from left-leaning sources. Right-leaning language models showed the opposite behavior.

Cleaning data sets of bias is not enough

Ultimately, it’s impossible for outside observers to know why different AI models have different political biases, because tech companies do not share details of the data or methods used to train them, says Park.

One way researchers have tried to mitigate biases in language models is by removing biased content from data sets or filtering it out. “The big question the paper raises is: Is cleaning data [of bias] enough? And the answer is no,” says Soroush Vosoughi, an assistant professor of computer science at Dartmouth College, who was not involved in the study.

It’s very difficult to completely scrub a vast database of biases, Vosoughi says, and AI models are also pretty apt to surface even low-level biases that may be present in the data.

One limitation of the study was that the researchers could only conduct the second and third stage with relatively old and small models, such as GPT-2 and RoBERTa, says Ruibo Liu, a research scientist at DeepMind, who has studied political biases in AI language models but was not part of the research.

Liu says he’d like to see if the paper’s conclusions apply to the latest AI models. But academic researchers do not have, and are unlikely to get, access to the inner workings of state-of-the-art AI systems such as ChatGPT and GPT-4, which makes analysis harder.

Another limitation is that if the AI models just made things up, as they tend to do, then a model’s responses might not be a true reflection of its “internal state,” Vosoughi says.

The researchers also admit that the political compass test, while widely used, is not a perfect way to measure all the nuances around politics.

As companies integrate AI models into their products and services, they should be more aware how these biases influence their models’ behavior in order to make them fairer, says Park: “There is no fairness without awareness.”

Update: This story was updated post-publication to incorporate comments shared by Meta.

hide