1/37

@ArtificialAnlys

xAI gave us early access to Grok 4 - and the results are in. Grok 4 is now the leading AI model.

We have run our full suite of benchmarks and Grok 4 achieves an Artificial Analysis Intelligence Index of 73, ahead of OpenAI o3 at 70, Google Gemini 2.5 Pro at 70, Anthropic Claude 4 Opus at 64 and DeepSeek R1 0528 at 68. Full results breakdown below.

This is the first time that

/elonmusk's

/xai has the lead the AI frontier. Grok 3 scored competitively with the latest models from OpenAI, Anthropic and Google - but Grok 4 is the first time that our Intelligence Index has shown xAI in first place.

We tested Grok 4 via the xAI API. The version of Grok 4 deployed for use on X/Twitter may be different to the model available via API. Consumer application versions of LLMs typically have instructions and logic around the models that can change style and behavior.

Grok 4 is a reasoning model, meaning it ‘thinks’ before answering. The xAI API does not share reasoning tokens generated by the model.

Grok 4’s pricing is equivalent to Grok 3 at $3/$15 per 1M input/output tokens ($0.75 per 1M cached input tokens). The per-token pricing is identical to Claude 4 Sonnet, but more expensive than Gemini 2.5 Pro ($1.25/$10, for <200K input tokens) and o3 ($2/$8, after recent price decrease). We expect Grok 4 to be available via the xAI API, via the Grok chatbot on X, and potentially via Microsoft Azure AI Foundry (Grok 3 and Grok 3 mini are currently available on Azure).

Key benchmarking results:

➤ Grok 4 leads in not only our Artificial Analysis Intelligence Index but also our Coding Index (LiveCodeBench & SciCode) and Math Index (AIME24 & MATH-500)

➤ All-time high score in GPQA Diamond of 88%, representing a leap from Gemini 2.5 Pro’s previous record of 84%

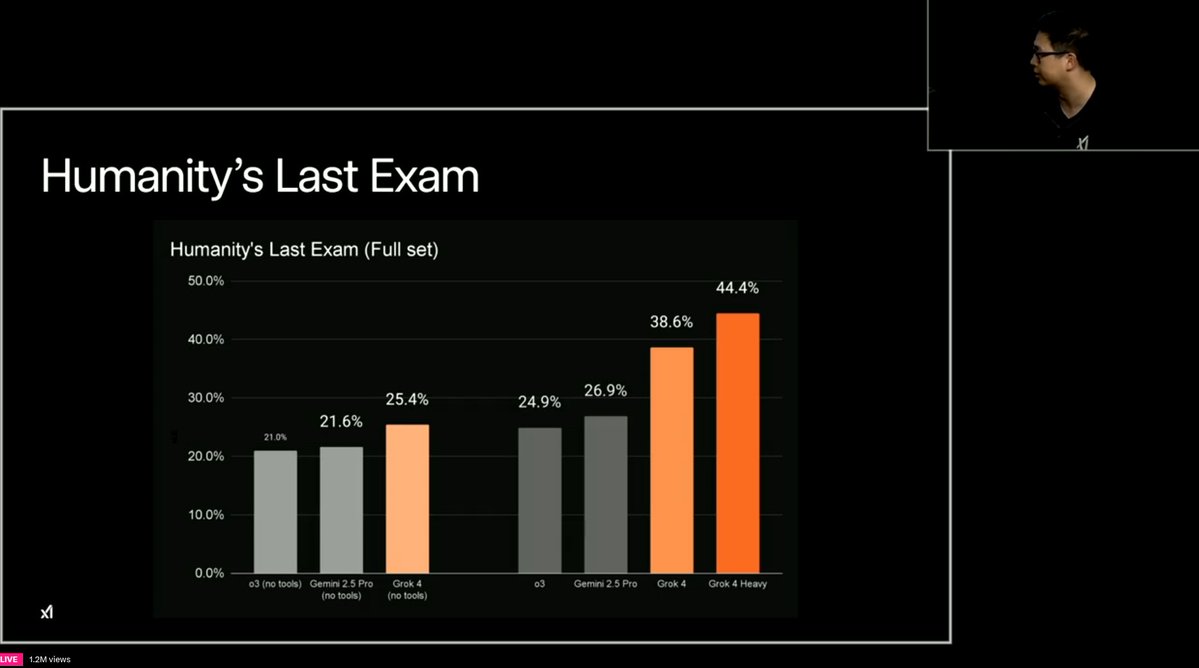

➤ All-time high score in Humanity’s Last Exam of 24%, beating Gemini 2.5 Pro’s previous all-time high score of 21%. Note that our benchmark suite uses the original HLE dataset (Jan '25) and runs the text-only subset with no tools

➤ Joint highest score for MMLU-Pro and AIME 2024 of 87% and 94% respectively

➤ Speed: 75 output tokens/s, slower than o3 (188 tokens/s), Gemini 2.5 Pro (142 tokens/s), Claude 4 Sonnet Thinking (85 tokens/s) but faster than Claude 4 Opus Thinking (66 tokens/s)

Other key information:

➤ 256k token context window. This is below Gemini 2.5 Pro’s context window of 1 million tokens, but ahead of Claude 4 Sonnet and Claude 4 Opus (200k tokens), o3 (200k tokens) and R1 0528 (128k tokens)

➤ Supports text and image input

➤ Supports function calling and structured outputs

See below for further analysis

2/37

@ArtificialAnlys

Grok 4 scores higher in Artificial Analysis Intelligence Index than any other model. Its pricing is higher than OpenAI’s o3, Google’s Gemini 2.5 Pro and Anthropic’s Claude 4 Sonnet - but lower than Anthropic’s Claude 4 Opus and OpenAI’s o3-pro.

3/37

@ArtificialAnlys

Full set of intelligence benchmarks that we have run independently on xAI’s Grok 4 API:

4/37

@ArtificialAnlys

Grok 4 recorded slightly higher output token usage compared to peer models when running the Artificial Analysis Intelligence Index. This translates to higher cost relative to its per token price.

5/37

@ArtificialAnlys

xAI’s API is serving Grok 4 at 75 tokens/s. This is slower than o3 (188 tokens/s) but faster than Claude 4 Opus Thinking (66 tokens/s).

6/37

@ArtificialAnlys

Grok 4 is now live on Artificial Analysis:

http://artificialanalysis.ai

7/37

@Evantaged

Is this Grok 4 Heavy or base??

8/37

@ArtificialAnlys

Base, with no tools. We have not tested Grok 4 Heavy yet.

9/37

@Elkins

10/37

@AuroraHoX

11/37

@tetsuoai

Honestly it's so good!

12/37

@rozer100x

interesting

13/37

@ianksnow1

It’s truly a rockstar. Light years better than the previous model and based on my early interactions perhaps leapfrogged every other frontier model.

14/37

@VibeEdgeAI

It's impressive to see Grok 4 leading the pack with a 73 on the Artificial Analysis Intelligence Index, especially with its strong performance in coding and math benchmarks.

However, the recent hate speech controversy is a sobering reminder of the ethical challenges AI development faces.

Balancing innovation with responsibility will be key as xAI moves forward-hopefully, these issues can be addressed to harness Grok 4's potential for positive impact.

15/37

@XaldwinSealand

Currently Testing Grok 4...

16/37

@MollySOShea

17/37

@0xSweep

might just be the greatest AI innovation of all time

18/37

@HaleemAhmed333

Wow

19/37

@Jeremyybtc

good to have you

/grok 4

20/37

@Kriscrichton

21/37

@ArthurMacwaters

Reality is the best eval

This is where Grok4 impresses me most

22/37

@Coupon_Printer

I was waiting for your results

/ArtificialAnlys !!! Thank you for this

23/37

@TheDevonWayne

so you didn't even get to try grok heavy?

24/37

@_LouiePeters

This is a great and rapid overview!

I think your intelligence benchmarks should start including and up weighting agent and tool use scores though; in the real world we want the models to perform as well as possible, which means giving them every tool possible - no need to handicap them by limiting access.

25/37

@shiels_ai

So this isn’t the tool calling model? Wow!

26/37

@joAnneSongs72

YEAH

27/37

@riddle_sphere

New kid on the block just dethroned the veterans. Silicon Valley’s watching.

28/37

@blockxs

Grok 4: AI champ confirmed

29/37

@SastriVimla

Great

30/37

@neoonai

NeoON > Grok. Right?

31/37

@EricaDXtra

So cool, so good!

32/37

@evahugsyou

Grok 4 just came out on top, and it’s not even a competition anymore. Elon’s team is absolutely killing it!

33/37

@garricn

Just wait till it starts conducting science experiments

34/37

@mukulneetika

Wow!

35/37

@RationalEtienne

Grok 4 is HOLY.

Humanity has created AI that it will merge with.

All Praise Elon for his act of CREATION!

36/37

@MixxsyLabs

I personally found it better for coding uses than Claude. Im no expert but when I needed a tool thats the one I started going back to after using a few for code snippets and assistance

37/37

@codewithimanshu

Interesting, perhaps true intelligence lies beyond benchmarks.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196