Inside ICE’s Database for Finding ‘Derogatory’ Online Speech

Thumbs up, or thumbs down: that's the option presented to analysts when the tool Giant Oak Search Technology surfaces content from social media and other sources for ICE to scrutinize.

Inside ICE’s Database for Finding ‘Derogatory’ Online Speech

JOSEPH COX

JOSEPH COX·OCT 24, 2023 AT 9:00 AM

Thumbs up, or thumbs down: that's the option presented to analysts when the tool Giant Oak Search Technology surfaces content from social media and other sources for ICE to scrutinize.

Become a paid subscriber for unlimited, ad-free articles and access to bonus content. This site is funded by subscribers and you will be directly powering our journalism.

Immigration and Customs Enforcement (ICE) has used a system called Giant Oak Search Technology (GOST) to help the agency scrutinize social media posts, determine if they are “derogatory” to the U.S., and then use that information as part of immigration enforcement, according to a new cache of documents reviewed by 404 Media.

The documents peel back the curtain on a powerful system, both in a technological and a policy sense—how information is processed and used to decide who is allowed to remain in the country and who is not.

“The government should not be using algorithms to scrutinize our social media posts and decide which of us is ‘risky.’ And agencies certainly shouldn't be buying this kind of black box technology in secret without any accountability. DHS needs to explain to the public how its systems determine whether someone is a ‘risk’ or not, and what happens to the people whose online posts are flagged by its algorithms,” Patrick Toomey, Deputy Director of the ACLU's National Security Project, told 404 Media in an email. The documents come from a Freedom of Information Act (FOIA) lawsuit brought by both the ACLU and the ACLU of Northern California. Toomey from the ACLU then shared the documents with 404 Media.

Do you know anything else about GOST or similar systems? I would love to hear from you. Using a non-work device, you can message me securely on Signal at +44 20 8133 5190. Otherwise, send me an email at joseph@404media.co.

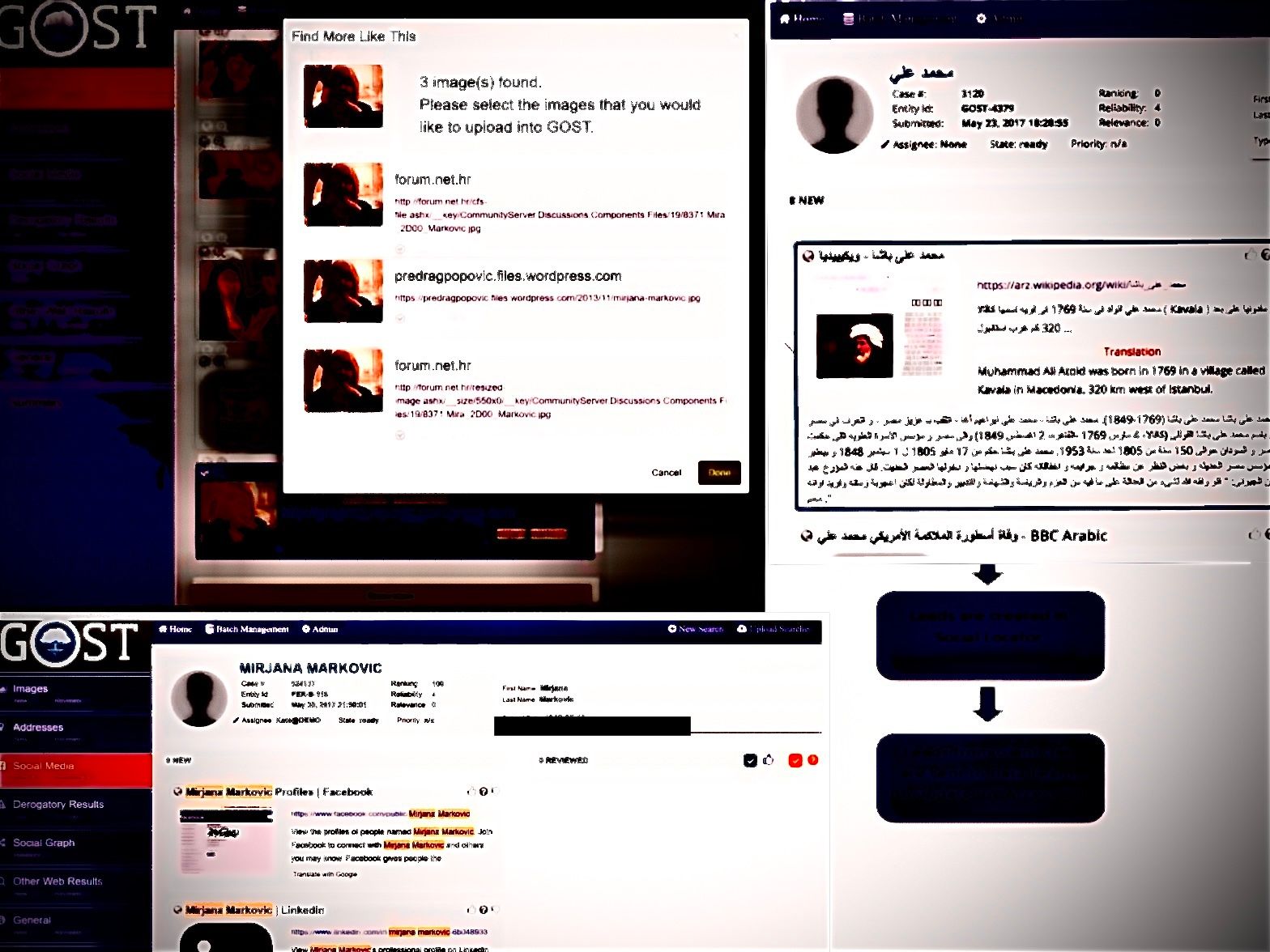

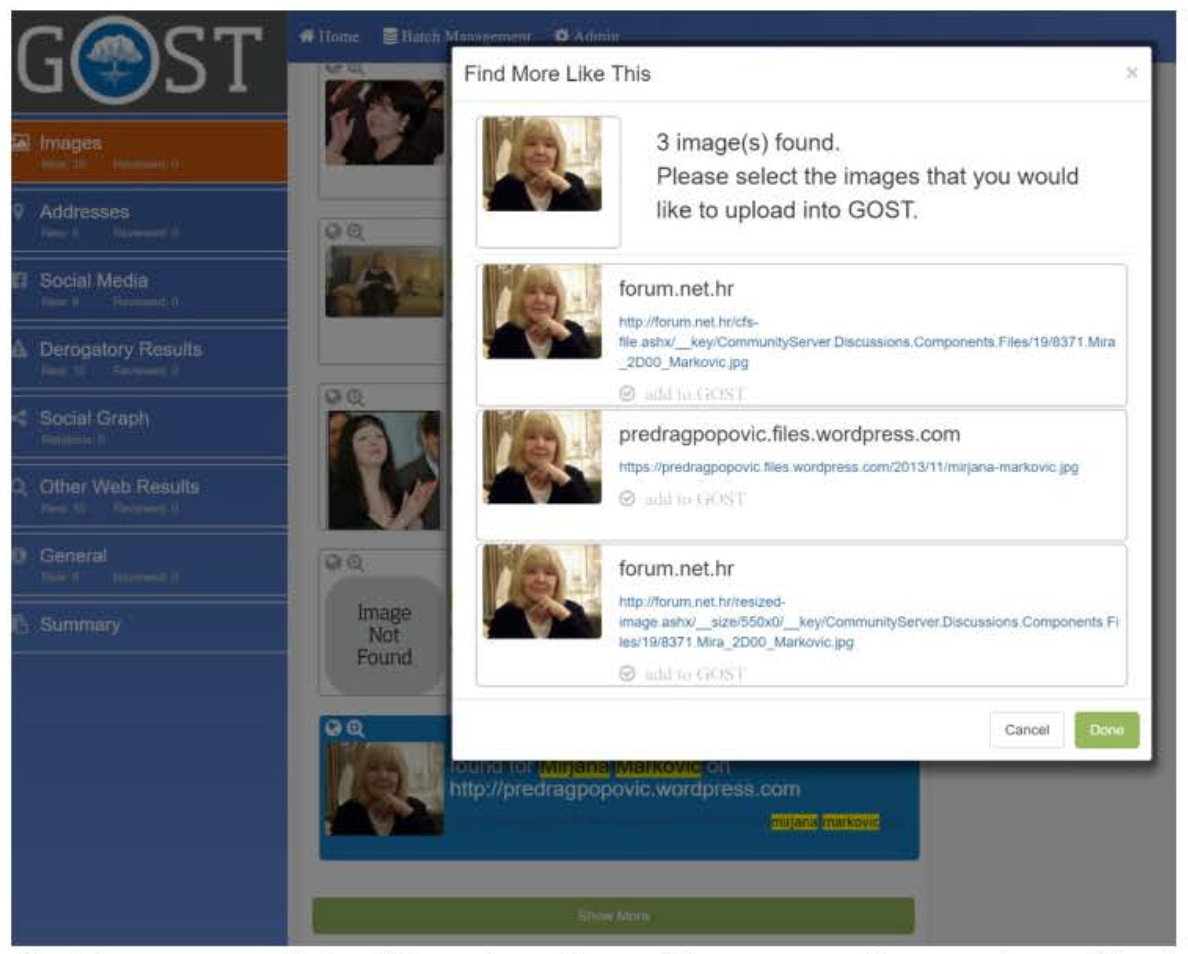

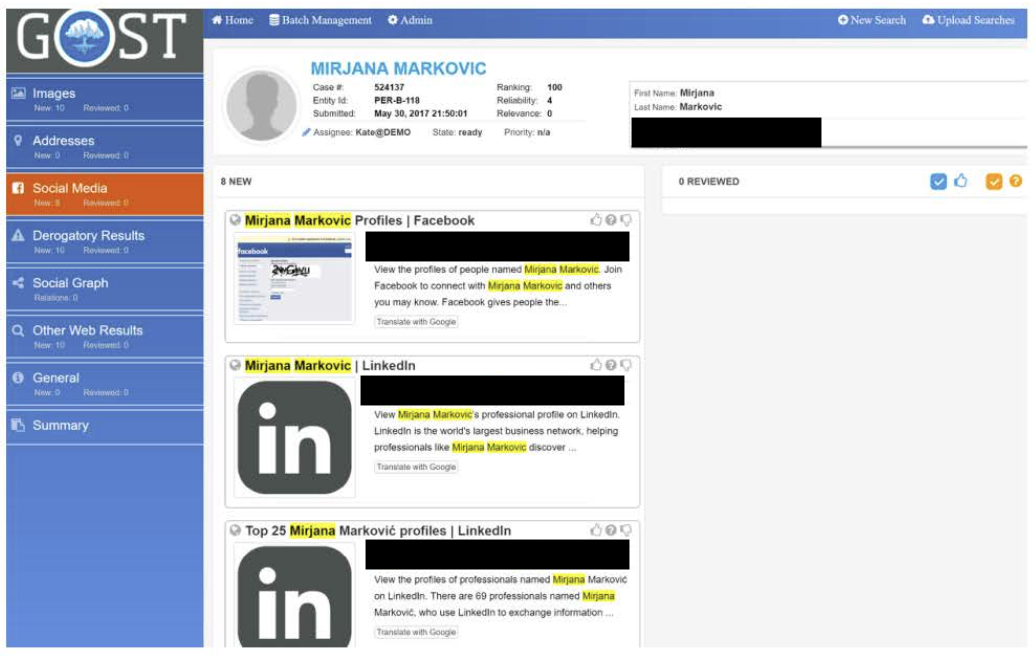

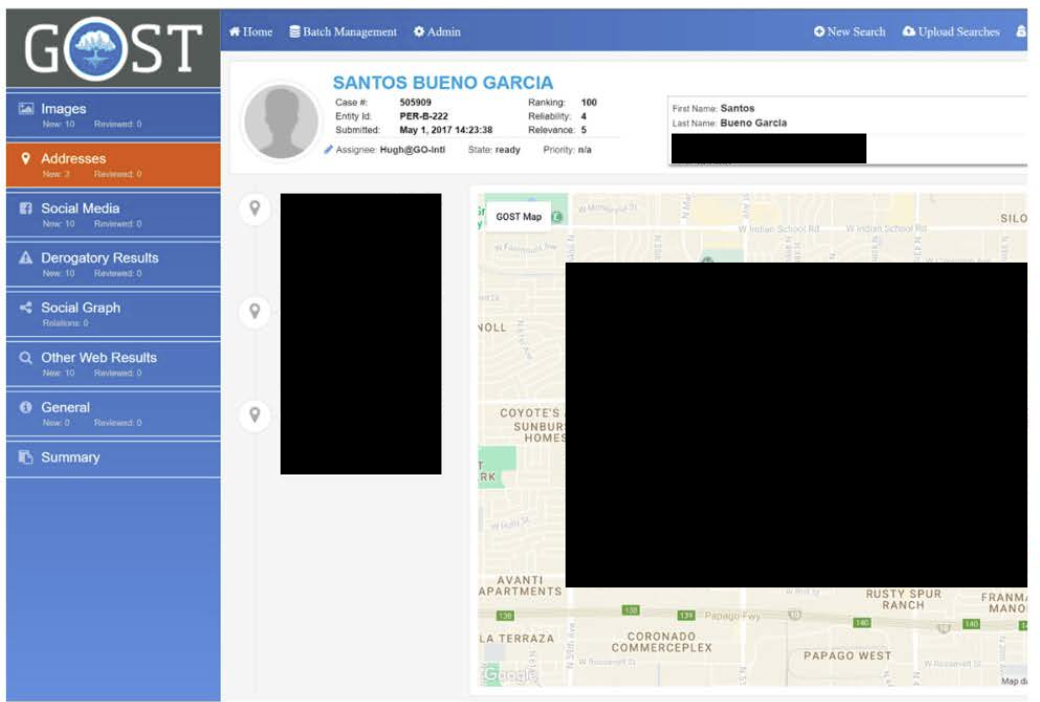

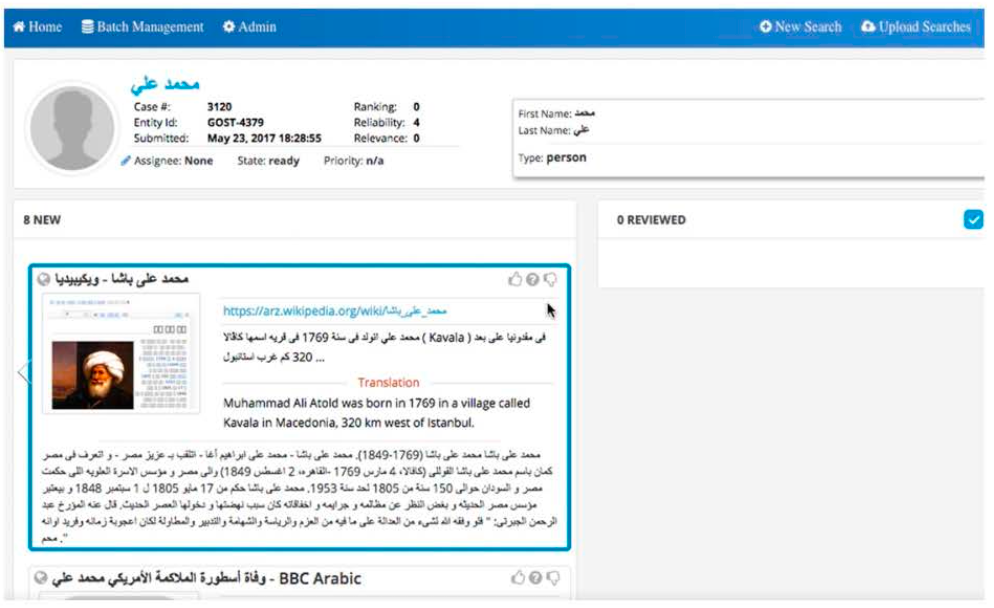

GOST’s catchphrase included in one document is “We see the people behind the data.” A GOST user guide included in the documents says GOST is “capable of providing behavioral based internet search capabilities.” Screenshots show analysts can search the system with identifiers such as name, address, email address, and country of citizenship. After a search, GOST provides a “ranking” from zero to 100 on what it thinks is relevant to the user’s specific mission.

The documents further explain that an applicant’s “potentially derogatory social media can be reviewed within the interface.” After clicking on a specific person, analysts can review images collected from social media or elsewhere, and give them a “thumbs up” or “thumbs down.” Analysts can also then review the target’s social media profiles themselves too, and their “social graph,” potentially showing who the system believes they are connected to.

DHS has used GOST since 2014, according to a page of the user guide. In turn, ICE has paid Giant Oak Inc., the company behind the system, in excess of $10 million since 2017, according to public procurement records. A Giant Oak and DHS contract ended in August 2022, according to the records. Records also show Customs and Border Protection (CBP), the Drug Enforcement Administration (DEA), the State Department, the Air Force, and the Bureau of the Fiscal Service which is part of the U.S. Treasury have all paid for Giant Oak services over the last nearly ten years.

A SELECTION OF SCREENSHOTS OF THE GOST USER GUIDE. REDACTIONS BY 404 MEDIA. IMAGE: 404 MEDIA.

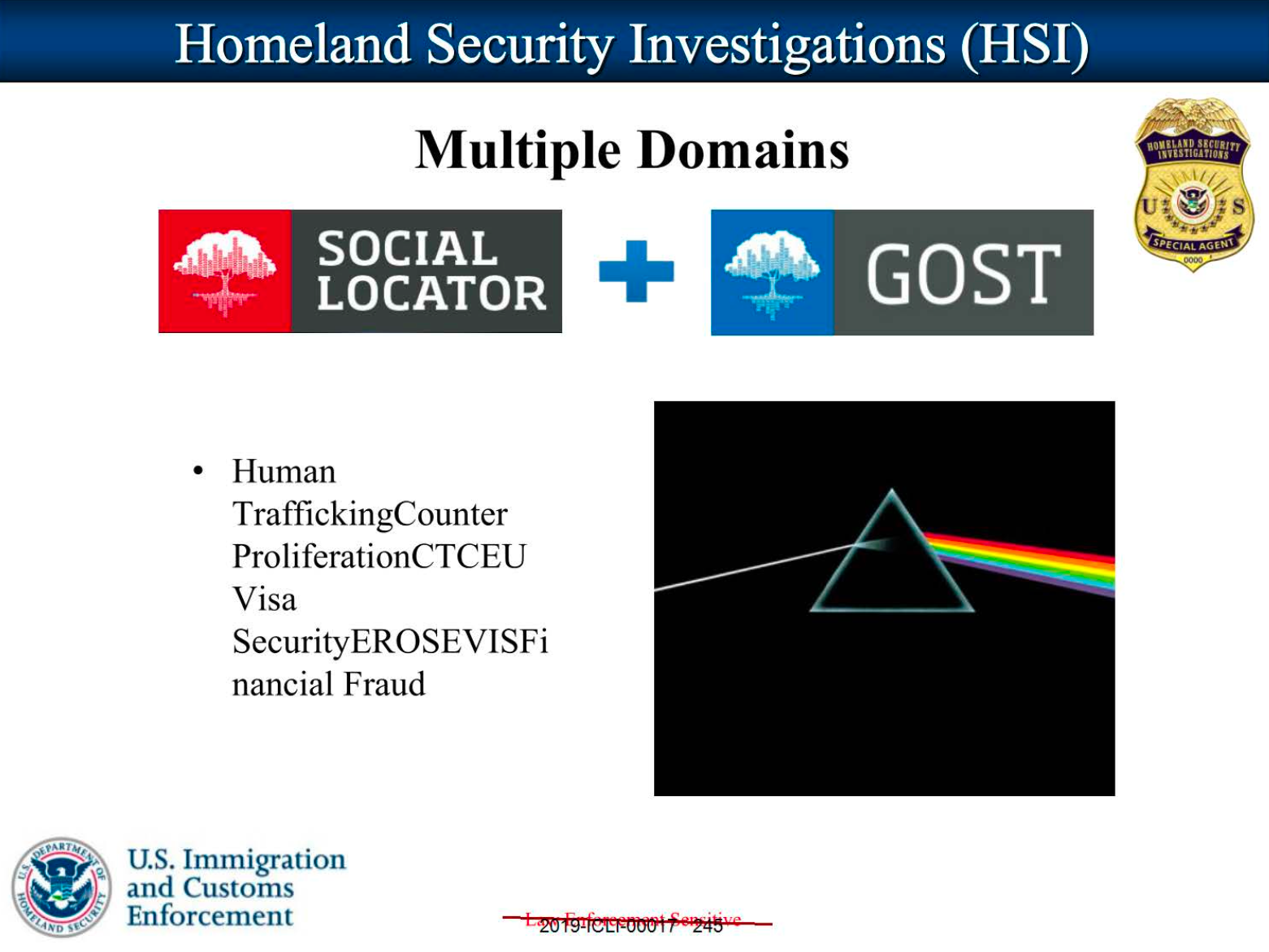

The FOIA documents specifically discuss Giant Oak’s use as part of an earlier 2016 pilot called the “HSI [Homeland Security Investigations] PATRIOT Social Media Pilot Program.” For this, the program would “target potential overstay violators from particular visa issuance Posts located in countries of concern.”

“This pilot project aims to more fully leverage social media as a tool to identify the whereabouts and activity of status violators, and provide enhanced knowledge about a non-immigrant visitors' social media postings, from the adjudication of the visa application, through admission to the United States, and during their time in the United States,” the document continues. In other words, the system would monitor social media to assist with immigration and visa issuance decisions. The document adds that “The process utilizes an automated social media vetting platform which is designed to communicate with the US government systems during the visa adjudication process and the time of travel to the US. The platform is designed to ingest biographic data that is then utilized to search for online social media presence, and provide vetting results at the time of visa application.” In a brochure included in the documents, GOST says it searches both the internet and the “deep web,” a generic term that can include all manner of things such as commercial databases or sites hosted on the Tor anonymity network.

Become a paid subscriber for unlimited, ad-free articles and access to bonus content. This site is funded by subscribers and you will be directly powering our journalism.

As Chinmayi Sharma, an associate professor at Fordham Law School, previously wrote on the legal analysis website Lawfare, PATRIOT is a system that cross-references a visa applicant across various government databases looking for derogatory information. The system “returns as an output either a red (recommendation to deny entry based on derogatory information) or a green light (no derogatory information unearthed) for the applicant. A HSI officer always reviews PATRIOT outputs before conveying them to consular officers for final decisions.” She writes that in May 2018, DHS backed away from a subsequent proposal to use machine learning technology to continuously monitor immigrants. (CBP is using an AI-powered monitoring tool to screen travelers, I reported this May).

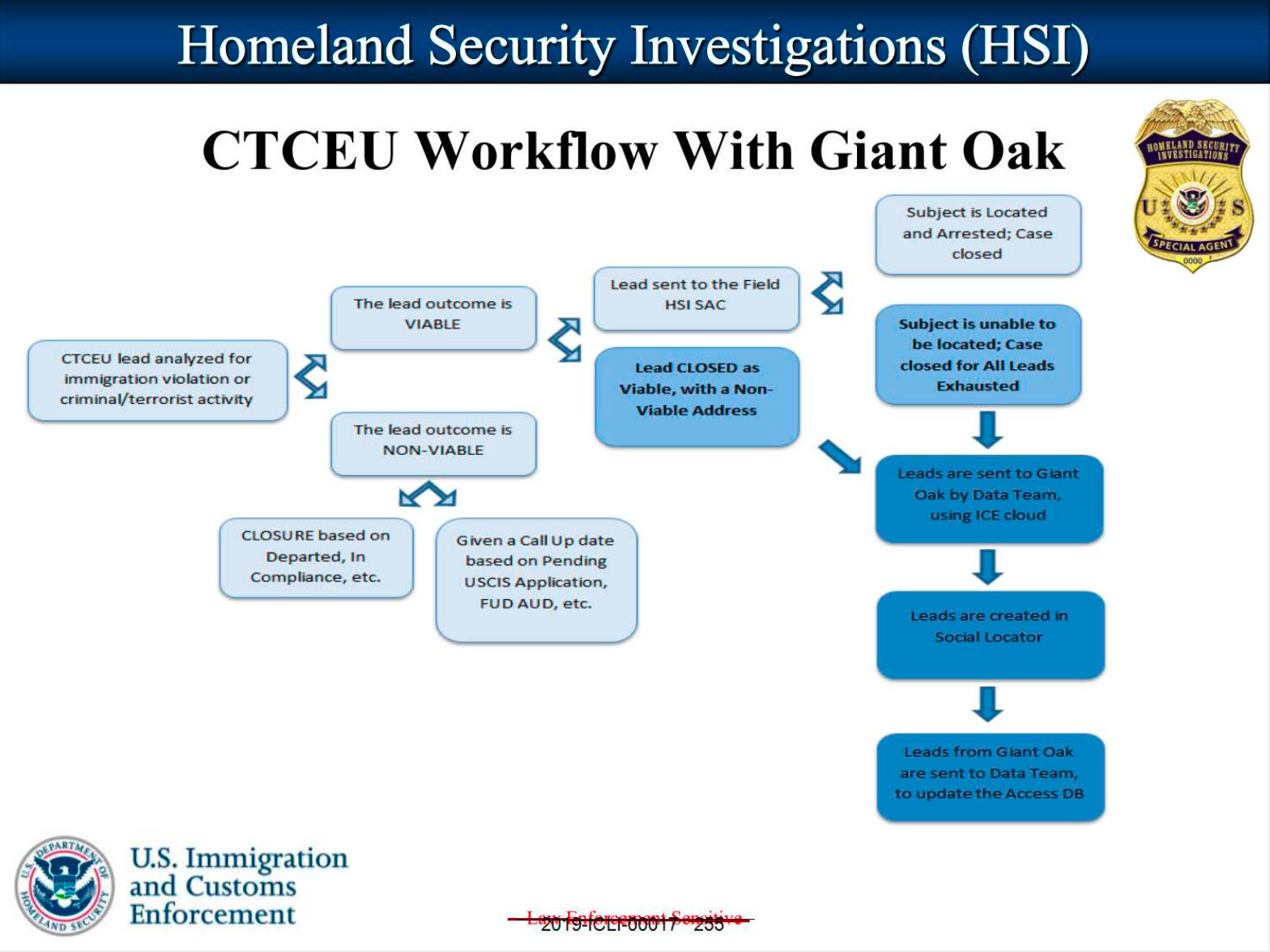

A SELECTION OF SCREENSHOTS OF A PRESENTATION INCLUDED WITH THE DOCUMENTS. IMAGE: 404 MEDIA.

The documents add that, at the time, the National Security Investigations Division (NSID) was working with Giant Oak “to further fine tune the targeting algorithm and refine the system's ability to filter various Arabic naming conventions.”

A presentation slide included in the documents also describes ICE’s Counterterrorism and Criminal Exploitation Unit’s (CTCEU) workflow with Giant Oak. Generally, analysts would check whether a lead on immigration violation or criminal/terrorist activity was viable or not, send that tip to another section, and then if the subject is unable to be located, feed that lead into Giant Oak to generate more leads.

JOIN THE NEWSLETTER TO GET THE LATEST UPDATES.

One slide says that the NSID is working with Giant Oak to “further fine tune the targeting algorithm and refine the system’s ability to filter various Arabic naming conventions.” After reviewing the documents, Julie Mao, co-founder and deputy director of Just Futures Law, told 404 Media in an email that “the records raise concern that ICE used Giant Oak to conduct discriminatory targeting of Arab communities and people from certain countries.” Mao also provided a copy of some GOST related records Just Futures Law obtained via FOIA.

In a 2017 interview with Forbes, Giant Oak’s CEO Gary Shiffman said the tool is capable of “continuous evaluation,” which is where “you want to see if there’s a change in the pattern in their behavior over time.” Shiffman told Forbes that while at the U.S. military’s research arm DARPA, he worked on Nexus 7, a big data tool used in Afghanistan. Shiffman is also a former chief of staff for CBP, Forbes writes.

Giant Oak did not respond to a request for comment. Neither did ICE.

Become a paid subscriber for unlimited, ad-free articles and access to bonus content. This site is funded by subscribers and you will be directly powering our journalism.