Ya' Cousin Cleon

OG COUCH CORNER HUSTLA

Revised draft: 1) I initially wrote that IQ predicts between 10 and 50% at best, turns out it beats random selection in the best of applications by less than 17%, typically <2%, 2) Showed noise IQ/Weath, 3) Added information showing the story behind the effectiveness of Average National IQ is, statistically, a fraud. The psychologists who engaged me on this piece — with verbose writeups —made the mistake of showing me the best they got: papers with the strongest pro-IQ arguments. They do not seem to know what noise means nor do they get the role of variance.)

Background : “IQ” is a stale test meant to measure mental capacity but in fact mostly measures extreme unintelligence (learning difficulties), as well as, to a lesser extent, a form of intelligence, stripped of 2nd order effects. It is via negativa not via positiva. Designed for learning disabilities, and given that it is not too needed there (see argument further down), it ends up selecting for exam-takers, paper shufflers, obedient IYIs (intellectuals yet idiots), ill adapted for “real life”. The concept is poorly thought out mathematically (a severe flaw in correlation under fat tails, fails to properly deal with dimensionality, treats the mind as an instrument not a complex system), and seems to be promoted by

If you want to detect how someone fares at a task, say loan sharking, tennis playing, or random matrix theory, make him/her do that task; we don’t need theoretical exams for a real world function by probability-challenged psychologists. Traders get it right away: hypothetical P/L from “simulated” paper strategies doesn’t count. Performance=actual. What goes in people’s head as a reaction to an image on a screen doesn’t exist (except via negativa).

Fat Tails If IQ is Gaussian by construction (well, almost) and if real world performance were, net, fat tailed (it is), then either the covariance between IQ and performance doesn’t exist or it is uninformational. It will show a finite number in sample but doesn’t exist statistically — and the metrics will overestimare the predictability. Another problem: when they say “black people are x standard deviations away”, they don’t know what they are talking about. Different populations have different variances, even different skewness and these comparisons require richer models. These are severe, severe mathematical flaws (a billion papers in psychometrics wouldn’t count if you have such a flaw). See the formal treatment "); background-size: 1px 1px; background-position: 0px calc(1em + 1px);">in my next book.

Mensa members: typically high “IQ” losers in Birkenstocks.

But the “intelligence” in IQ is determined by academic psychologists (no geniuses) like the “paper trading” we mentioned above, via statistical constructs s.a. correlation that I show here (see Fig. 1) that they patently don’t understand. It does correlate to very negative performance (as it was initially designed to detect learning special needs) but then any measure would work there. A measure that works in the left tail not the right tail (IQ decorrelates as it goes higher) is problematic. We have gotten similar results since the famous Terman longitudinal study, even with massaged data for later studies. To get the point, consider that if someone has mental needs, there will be 100% correlation between performance and IQ tests. But the performance doesn’t correlate as well at higher levels, though, unaware of the effect of the nonlinearity, the psychologists will think it does.(The statistical spin, as a marketing argument, is that a person with an IQ of 70 cannot prove theorems, which is obvious for a measure of unintelligence — but they fail to reveal how many IQs of 150 are doing menial jobs).

It is a false comparison to claim that IQ “measures the hardware” rather than the software. It can measures some arbitrarily selected mental abilities (in a testing environment) believed to be useful. However, if you take a Popperian-Hayekian view on intelligence, you would realize that to measure future needs it you would need to know the mental skills needed in a future ecology, which requires predictability of said future ecology. It also requires the skills to make it to the future (hence the need for mental biases for survival).

Real Life: In academia there is no difference between academia and the real world; in the real world there is. 1) When someone asks you a question in the real world, you focus first on “why is he/she asking me that?”, which shifts you to the environment (see Fat Tony vs Dr John in The Black Swan) and detracts you from the problem at hand. Philosophers have known about that problem forever. Only suckers don’t have that instinct. Further, take the sequence {1,2,3,4,x}. What should x be? Only someone who is clueless about induction would answer 5 as if it were the onlyanswer (see Goodman’s problem in a philosophy textbook or ask your closest Fat Tony) [Note: We can also apply here Wittgenstein’s rule-following problem, which states that any of an infinite number of functions is compatible with any finite sequence. Source: Paul Bogossian]. Not only clueless, but obedient enough to want to think in a certain way. 2) Real life never never offers crisp questions with crisp answers (most questions don’t have answers; perhaps the worst problem with IQ is that it seem to selects for people who don’t like to say “there is no answer, don’t waste time, find something else”.) 3) It takes a certain type of person to waste intelligent concentration on classroom/academic problems. These are lifeless bureaucrats who can muster sterile motivation. Some people can only focus on problems that are real, not fictional textbook ones (see the note below where I explain that I can only concentrate with real not fictional problems). 4) IQ doesn’t detect convexity of mistakes (by an argument similar to bias-variance you need to make a lot of small inconsequential mistake in order to avoid a large consequential one. See Antifragile and how any measure of “intelligence” w/o convexity is sterile "); background-size: 1px 1px; background-position: 0px calc(1em + 1px); font-family: medium-content-serif-font, Georgia, Cambria, "Times New Roman", Times, serif; font-size: 21px; letter-spacing: -0.063px;">edge.org/conversation/n…). To do well you must survive; survival requires some mental biases directing to some errors. 5) Fooled by Randomness: seeing shallow patterns in not a virtue — it leads to naive interventionism. Some psychologist wrote back to me: “IQ selects for pattern recognition, essential for functioning in modern society”. No. Not seeing patterns except when they are significant is a virtue in real life. 6) To do well in life you need depth and ability to select your own problems and to think independently.

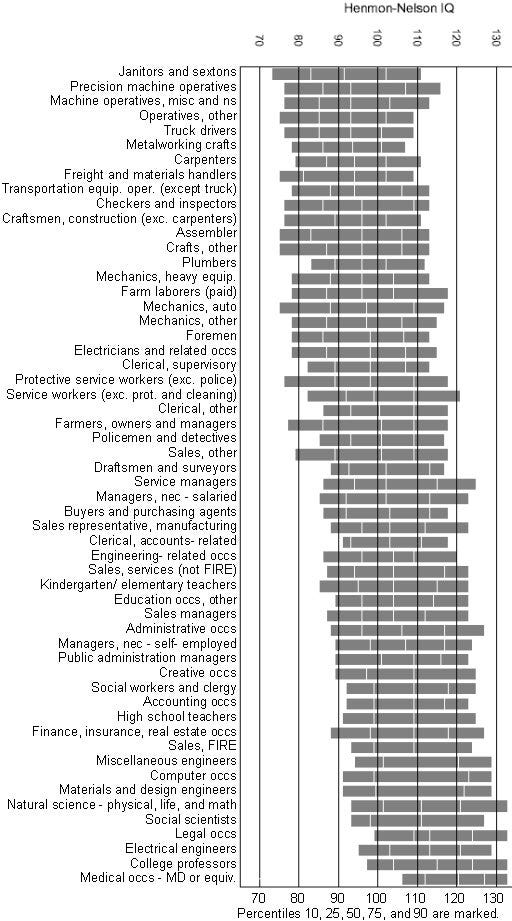

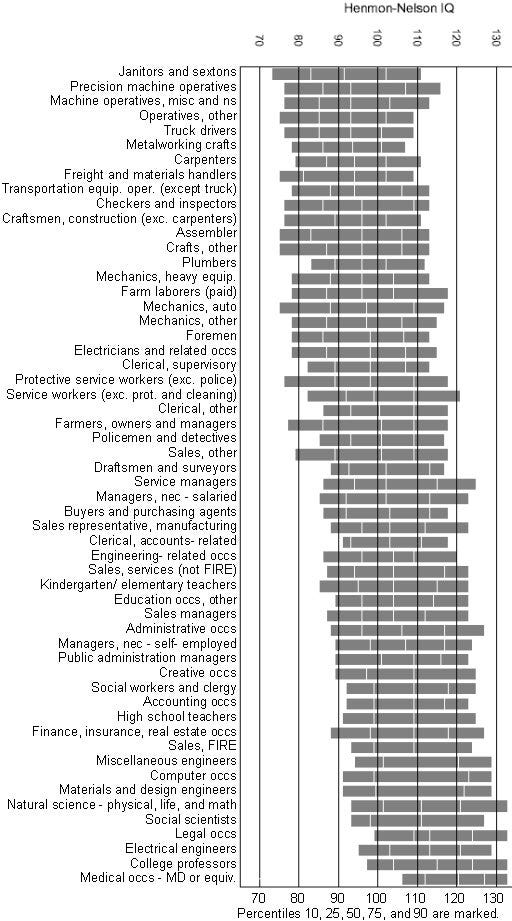

National IQ is a Fraud. From engaging participants (who throw buzzwords at you), I realized that the concept has huge variance, enough to be uninformative. See graph. And note that the variance withinpopulations is not used to draw conclusions (you average over functions, don’t use the funciton over averages) — a problem acute for tail contributions.

Notice the noise: the top 25% of janitors have higher IQ than the bottom 25% of college professors, even counting the circularity. The circularity bias shows most strikingly with MDs as medical schools require a higher SAT score.

Recall from Antifragile that if wealth were fat tailed, you’d need to focus on the tail minority (for which IQ has unpredictable payoff), never the average. Further it is leading to racist imbeciles who think that if a country has an IQ of 82 (assuming it is true not the result of lack of such training), it means politically that all the people there have an IQ of 82, hence let’s ban them from immigrating. As I said they don’t even get elementary statistical notions such as variance. Some people use National IQ as a basis for genetic differences: it doesn’t explain the sharp changes in Ireland and Croatia upon European integration, or, in the other direction, the difference between Israeli and U.S. Ashkenazis.

Additional Variance: Unlike measurements of height or wealth, which carry a tiny relative error, many people get yuugely different results for the same IQ test (I mean the same person!), up to 2 standard deviations as measured across people, higher than sampling error in the population itself! This additional source of sampling error weakens the effect by propagation of uncertainty way beyond its predictability when applied to the evaluation of a single individual. It also tells you that you as an individual are vastly more diverse than the crowd, at least with respect to that measure!

Background : “IQ” is a stale test meant to measure mental capacity but in fact mostly measures extreme unintelligence (learning difficulties), as well as, to a lesser extent, a form of intelligence, stripped of 2nd order effects. It is via negativa not via positiva. Designed for learning disabilities, and given that it is not too needed there (see argument further down), it ends up selecting for exam-takers, paper shufflers, obedient IYIs (intellectuals yet idiots), ill adapted for “real life”. The concept is poorly thought out mathematically (a severe flaw in correlation under fat tails, fails to properly deal with dimensionality, treats the mind as an instrument not a complex system), and seems to be promoted by

- racists/eugenists, people bent on showing that some populations have inferior mental abilities based on IQ test=intelligence; those have been upset with me for suddenly robbing them of a “scientific” tool, as evidenced by the bitter reactions to the initial post on twitter/smear campaigns by such mountebanks as Charles Murray. (Something observed by the great Karl Popper, psychologists have a tendency to pathologize people who bust them by tagging them with some type of disorder, or personality flaw such as “childish” , “narcissist”, “egomaniac”, or something similar).

- psychometrics peddlers looking for suckers (military, large corporations) buying the “this is the best measure in psychology” argument when it is not even technically a measure — it explains at best between 2 and 13% of the performance in some tasks (those tasks that are similar to the test itself)[see interpretation of .5 correlation further down], minus the data massaging and statistical cherrypicking by psychologists; it doesn’t satisfy the monotonicity and transitivity required to have a measure (at best it is a concave measure). No measure that fails 80–95% of the time should be part of “science” (nor should psychology — owing to its sinister track record — be part of science (rather scientism), but that’s another discussion).

- It is at the bottom an immoral measure that, while not working, can put people (and, worse, groups) in boxes for the rest of their lives.

- There is no significant correlation (or any robust statistical association) between IQ and hard measures such as wealth. Most “achievements” linked to IQ are measured in circular stuff s.a. bureaucratic or academic success, things for test takers and salary earners in structured jobs that resemble the tests. Wealth may not mean success but it is the only “hard” number, not some discrete score of achievements. You can buy food with a $30, not with other “successes” s.a. rank, social prominence, or having had a selfie with the Queen.

If you want to detect how someone fares at a task, say loan sharking, tennis playing, or random matrix theory, make him/her do that task; we don’t need theoretical exams for a real world function by probability-challenged psychologists. Traders get it right away: hypothetical P/L from “simulated” paper strategies doesn’t count. Performance=actual. What goes in people’s head as a reaction to an image on a screen doesn’t exist (except via negativa).

Fat Tails If IQ is Gaussian by construction (well, almost) and if real world performance were, net, fat tailed (it is), then either the covariance between IQ and performance doesn’t exist or it is uninformational. It will show a finite number in sample but doesn’t exist statistically — and the metrics will overestimare the predictability. Another problem: when they say “black people are x standard deviations away”, they don’t know what they are talking about. Different populations have different variances, even different skewness and these comparisons require richer models. These are severe, severe mathematical flaws (a billion papers in psychometrics wouldn’t count if you have such a flaw). See the formal treatment "); background-size: 1px 1px; background-position: 0px calc(1em + 1px);">in my next book.

Mensa members: typically high “IQ” losers in Birkenstocks.

But the “intelligence” in IQ is determined by academic psychologists (no geniuses) like the “paper trading” we mentioned above, via statistical constructs s.a. correlation that I show here (see Fig. 1) that they patently don’t understand. It does correlate to very negative performance (as it was initially designed to detect learning special needs) but then any measure would work there. A measure that works in the left tail not the right tail (IQ decorrelates as it goes higher) is problematic. We have gotten similar results since the famous Terman longitudinal study, even with massaged data for later studies. To get the point, consider that if someone has mental needs, there will be 100% correlation between performance and IQ tests. But the performance doesn’t correlate as well at higher levels, though, unaware of the effect of the nonlinearity, the psychologists will think it does.(The statistical spin, as a marketing argument, is that a person with an IQ of 70 cannot prove theorems, which is obvious for a measure of unintelligence — but they fail to reveal how many IQs of 150 are doing menial jobs).

It is a false comparison to claim that IQ “measures the hardware” rather than the software. It can measures some arbitrarily selected mental abilities (in a testing environment) believed to be useful. However, if you take a Popperian-Hayekian view on intelligence, you would realize that to measure future needs it you would need to know the mental skills needed in a future ecology, which requires predictability of said future ecology. It also requires the skills to make it to the future (hence the need for mental biases for survival).

Real Life: In academia there is no difference between academia and the real world; in the real world there is. 1) When someone asks you a question in the real world, you focus first on “why is he/she asking me that?”, which shifts you to the environment (see Fat Tony vs Dr John in The Black Swan) and detracts you from the problem at hand. Philosophers have known about that problem forever. Only suckers don’t have that instinct. Further, take the sequence {1,2,3,4,x}. What should x be? Only someone who is clueless about induction would answer 5 as if it were the onlyanswer (see Goodman’s problem in a philosophy textbook or ask your closest Fat Tony) [Note: We can also apply here Wittgenstein’s rule-following problem, which states that any of an infinite number of functions is compatible with any finite sequence. Source: Paul Bogossian]. Not only clueless, but obedient enough to want to think in a certain way. 2) Real life never never offers crisp questions with crisp answers (most questions don’t have answers; perhaps the worst problem with IQ is that it seem to selects for people who don’t like to say “there is no answer, don’t waste time, find something else”.) 3) It takes a certain type of person to waste intelligent concentration on classroom/academic problems. These are lifeless bureaucrats who can muster sterile motivation. Some people can only focus on problems that are real, not fictional textbook ones (see the note below where I explain that I can only concentrate with real not fictional problems). 4) IQ doesn’t detect convexity of mistakes (by an argument similar to bias-variance you need to make a lot of small inconsequential mistake in order to avoid a large consequential one. See Antifragile and how any measure of “intelligence” w/o convexity is sterile "); background-size: 1px 1px; background-position: 0px calc(1em + 1px); font-family: medium-content-serif-font, Georgia, Cambria, "Times New Roman", Times, serif; font-size: 21px; letter-spacing: -0.063px;">edge.org/conversation/n…). To do well you must survive; survival requires some mental biases directing to some errors. 5) Fooled by Randomness: seeing shallow patterns in not a virtue — it leads to naive interventionism. Some psychologist wrote back to me: “IQ selects for pattern recognition, essential for functioning in modern society”. No. Not seeing patterns except when they are significant is a virtue in real life. 6) To do well in life you need depth and ability to select your own problems and to think independently.

National IQ is a Fraud. From engaging participants (who throw buzzwords at you), I realized that the concept has huge variance, enough to be uninformative. See graph. And note that the variance withinpopulations is not used to draw conclusions (you average over functions, don’t use the funciton over averages) — a problem acute for tail contributions.

Notice the noise: the top 25% of janitors have higher IQ than the bottom 25% of college professors, even counting the circularity. The circularity bias shows most strikingly with MDs as medical schools require a higher SAT score.

Recall from Antifragile that if wealth were fat tailed, you’d need to focus on the tail minority (for which IQ has unpredictable payoff), never the average. Further it is leading to racist imbeciles who think that if a country has an IQ of 82 (assuming it is true not the result of lack of such training), it means politically that all the people there have an IQ of 82, hence let’s ban them from immigrating. As I said they don’t even get elementary statistical notions such as variance. Some people use National IQ as a basis for genetic differences: it doesn’t explain the sharp changes in Ireland and Croatia upon European integration, or, in the other direction, the difference between Israeli and U.S. Ashkenazis.

Additional Variance: Unlike measurements of height or wealth, which carry a tiny relative error, many people get yuugely different results for the same IQ test (I mean the same person!), up to 2 standard deviations as measured across people, higher than sampling error in the population itself! This additional source of sampling error weakens the effect by propagation of uncertainty way beyond its predictability when applied to the evaluation of a single individual. It also tells you that you as an individual are vastly more diverse than the crowd, at least with respect to that measure!