Ministers will not immediately enforce online safety bill powers to scan apps after WhatsApp threatened shutdown

www.ft.com

UK pulls back from clash with Big Tech over private messaging

Ministers will not immediately enforce online safety bill powers to scan apps after WhatsApp threatened shutdown

The online safety bill is one of the toughest attempts by any government to make tech companies responsible for the content shared on their networks © Getty Images/iStockphoto

Cristina Criddle and Anna Gross in London 4 HOURS AGO

The UK government will concede it will not use controversial powers in the online safety bill to scan messaging apps for harmful content until it is “technically feasible” to do so, postponing measures that critics say threaten users’ privacy.

A planned statement to the House of Lords on Wednesday afternoon will mark an eleventh-hour effort by ministers to end a stand-off with

tech companies, including WhatsApp, that have threatened to pull their services from the UK over what they claimed was an intolerable threat to millions of users’ security.

The statement is set to outline that Ofcom, the tech regulator, will only require companies to scan their networks when a technology is developed that is capable of doing so, according to people briefed on the plan. Many security experts believe it could be years before any such technology is developed, if ever.

“A notice can only be issued where technically feasible and where technology has been accredited as meeting minimum standards of accuracy in detecting only child sexual abuse and exploitation content,” the statement will say.

The online safety bill, which has been in development for several years and is now in its final stages in parliament, is one of the toughest attempts by any government to make Big Tech companies responsible for the content that is shared on their networks.

Social media platforms have railed against provisions in the bill that would allow the UK regulator to force them to allow their

encrypted messages to be monitored for harmful content, including child sexual exploitation material.

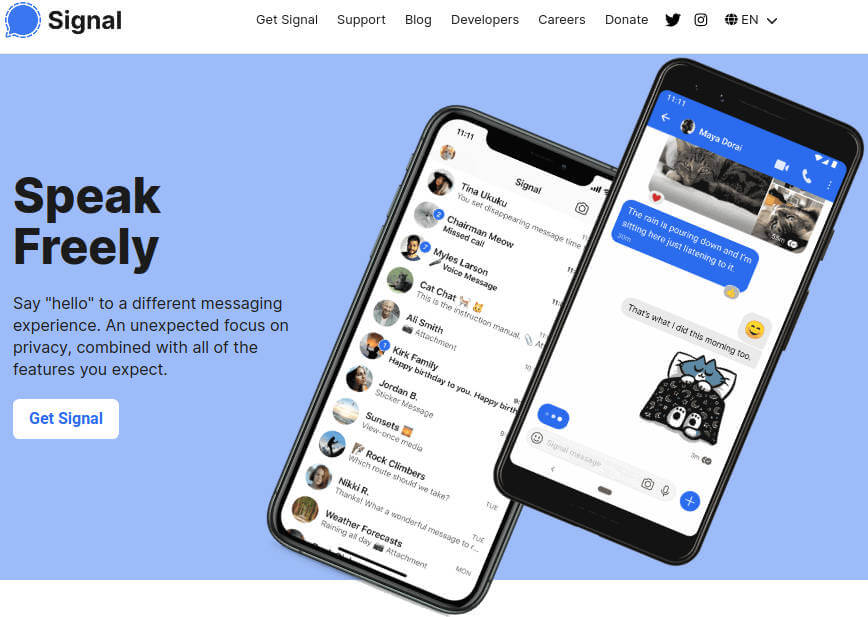

WhatsApp, owned by Facebook parent Meta, and Signal, another popular encrypted messaging app, are among those that have

threatened to exit the UK market should they be ordered to weaken encryption, a widely used security technology that allows only the sender and recipient of messages to view a message’s contents.

Officials have now privately acknowledged to tech companies that there is no current technology able to scan end-to-end encrypted messages that would not also undermine users’ privacy, according to several people briefed on the government’s thinking.

However, the statute will still give Ofcom powers to require platforms to develop or source new technology, the people said.

Critics have long argued such a technology does not exist and that current scanning technologies have been found to make errors, wrongly identifying safe content as harmful, and requiring flagged material to be checked by human monitors, therefore exposing private content.

The government said on Wednesday that its position on the issue “has not changed”.

“As has always been the case, as a last resort, on a case-by-case basis and only when stringent privacy safeguards have been met, [the legislation] will enable Ofcom to direct companies to either use, or make best efforts to develop or source, technology to identify and remove illegal child sexual abuse content — which we know can be developed,” the government said.

Child safety campaigners have spent years pushing the government to be tougher on tech companies over abuse material that is shared on their apps.

Richard Collard, head of child safety online policy at the National Society for the Prevention of Cruelty to Children, said: “Our polling shows the UK public overwhelmingly support measures to tackle child abuse in end-to-end encrypted environments. Tech firms can show industry leadership by listening to the public and investing in technology that protects both the safety and privacy rights of all users.”

Additional reporting by John Thornhill

en.wikipedia.org

the device the user is using is compromised.

the device the user is using is compromised.the device the user is using is compromised.

www.ft.com

www.ft.com