/cdn.vox-cdn.com/uploads/chorus_asset/file/25452448/2147829751.jpg)

Sony Music warns AI companies against “unauthorized use” of its content

Did that AI system use Doja Cat records for training data?

Sony Music warns AI companies against ‘unauthorized use’ of its content

The label representing superstars like Billy Joel, Doja Cat, and Lil Nas X sent a letter to 700 companies.

By Mia Sato, platforms and communities reporter with five years of experience covering the companies that shape technology and the people who use their tools.May 17, 2024, 10:29 AM EDT

1 Comment

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25452448/2147829751.jpg)

Photo: Dania Maxwell / Los Angeles Times via Getty Images

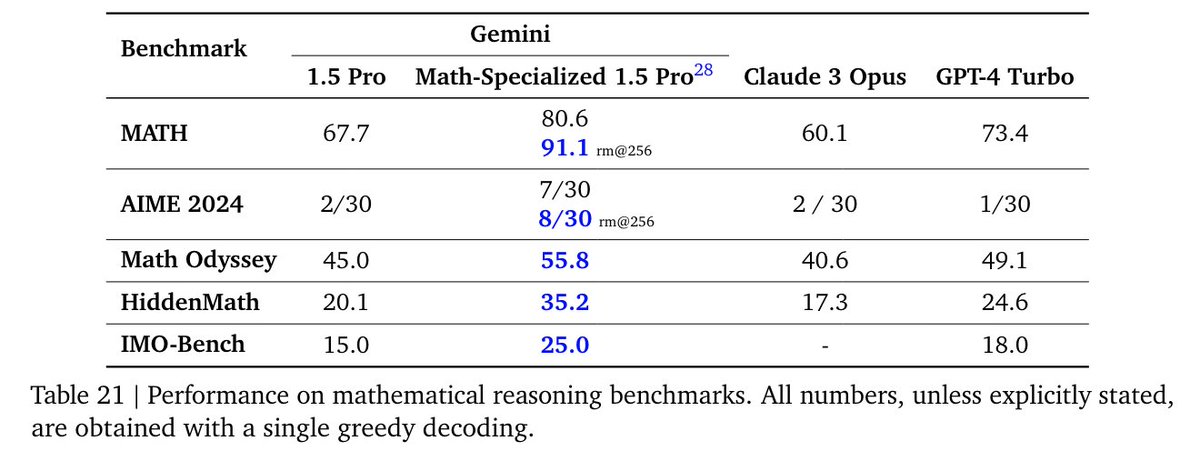

Sony Music sent letters to hundreds of tech companies and warned them against using its content without permission, according to Bloomberg, which obtained a copy of the letter.

The letter was sent to more than 700 AI companies and streaming platforms and said that “unauthorized use” of Sony Music content for AI systems denies the label and artists “control and compensation” of their work. The letter, according to Bloomberg, calls out the “training, development or commercialization of AI systems” that use copyrighted material, including music, art, and lyrics. Sony Music artists include Doja Cat, Billy Joel, Celine Dion, and Lil Nas X, among many others. Sony Music didn’t immediately respond to a request for comment.

The music industry has been particularly aggressive in its efforts to control how its copyrighted work is used when it comes to AI tools. On YouTube, where AI voice clones of musicians exploded last year, labels have brokered a strict set of rules that apply to the music industry (everyone else gets much looser protections). At the same time, the platform has introduced AI music tools like Dream Track, which generates songs in the style of a handful of artists based on text prompts.

Perhaps the most visible example of the fight over music copyright and AI has been on TikTok. In February, Universal Music Group pulled its entire roster of artists’ music from the platform after licensing negotiations fell apart. Viral videos fell silent as songs by artists like Taylor Swift and Ariana Grande disappeared from the platform.

The absence, though, didn’t last long: in April, leading up to the release of her new album, Swift’s music silently returned to TikTok (gotta get that promo somehow). By early May, the stand-off had ended, and UMG artists were back on TikTok. The two companies say a deal was reached with more protections around AI and “new monetization opportunities” around e-commerce.

“TikTok and UMG will work together to ensure AI development across the music industry will protect human artistry and the economics that flow to those artists and songwriters,” a press release read.

Beyond copyright, AI-generated voice clones used to create new songs have raised questions around how much control a person has over their voice. AI companies have trained models on libraries of recordings — often without consent — and allowed the public to use the models to generate new material. But even claiming right of publicity and likeness could be challenging, given the patchwork of laws that vary state by state in the US.