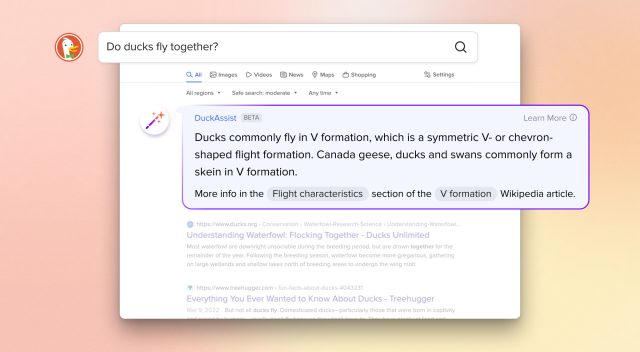

“Copilot” was trained using billions of lines of open-source code hosted on sites like Github. The people who wrote the code are not happy.

www.vice.com

GitHub Users Want to Sue Microsoft For Training an AI Tool With Their Code

“Copilot” was trained using billions of lines of open-source code hosted on sites like Github. The people who wrote the code are not happy.

Janus Rose

By Janus Rose

NEW YORK, US

October 18, 2022, 2:02pm

BLOOMBERG / GETTY IMAGES

Open-source coders are investigating a potential class-action lawsuit against Microsoft after the company used their publicly-available code to train its latest AI tool.

On

a website launched to spearhead an investigation of the company, programmer and lawyer Matthew Butterick writes that he has assembled a team of class-action litigators to lead a suit opposing the tool, called GitHub Copilot.

Microsoft, which bought the collaborative coding platform GitHub in 2018, was met with suspicion from open-source communities when it

launched Copilot back in June. The tool is an extension for Microsoft’s Visual Studio coding environment that uses prediction algorithms to auto-complete lines of code. This is done using an AI model called Codex, which was created and trained by OpenAI using data scraped from code repositories on the open web.

Microsoft has stated that the tool was “trained on tens of millions of public repositories” of code, including those on GitHub, and that it “believe{S} that is an instance of transformative fair use.”

Obviously, some open-source coders disagree.

“Like Neo plugged into the Matrix, or a cow on a farm, Copilot wants to convert us into nothing more than producers of a resource to be extracted,” Butterick wrote on his website. “Even the cows get food & shelter out of the deal. Copilot contributes nothing to our individual projects. And nothing to open source broadly.”

Some programmers have even noticed that Copilot seems to copy their code in its resulting outputs. On Twitter, open-source users have documented examples of the software spitting out lines of code that are strikingly similar to the ones in their own repositories.

GitHub

has stated that the training data taken from public repositories “is not intended to be included verbatim in Codex outputs,” and claims that “the vast majority of output (>99%) does not match training data,” according to the company’s internal analysis.

Microsoft essentially puts the legal onus on the end user to ensure that code Copilot spits out doesn't violate any intellectual property laws, but Butterick writes that this is merely a smokescreen and GitHub Copilot in practice acts as a "selfish" interface to open-source communities that hijacks their expertise while offering nothing in return. As the Joseph Saveri Law Firm—the firm Butterick is working with on the investigation—

put it, "It appears Microsoft is profiting from others' work by disregarding the conditions of the underlying open-source licenses and other legal requirements."

Microsoft and GitHub could not be reached for comment.

While open-source code is generally free to use and adapt, open source software licenses require anyone who utilizes the code to credit its original source. Naturally, this becomes practically impossible when you are scraping billions of lines of code to train an AI model—and hugely problematic when the resulting product is being sold by a massive corporation like Microsoft. As Butterick writes, "How can Copilot users comply with the license if they don’t even know it exists?"

The controversy is yet another chapter in an

ongoing debate over the ethics of training AI using artwork, music, and other data scraped from the open web without permission from its creators. Some artists have begun

publicly criticizing image-generating AI like DALL-E and Midjourney, which charge users for access to powerful algorithms that were trained on their original work—oftentimes gaining the ability to produce new works that mimic their style, usually with specific instructions to copy a particular artist.

In the past, human-created works that build or adapt previous works have been A-OK, and are labeled “fair use” or “transformative” under U.S. copyright law. But as Butterick notes on his website, that principle has never been tested when it comes to works created by AI systems that are trained on other works collected en-masse from the web.

Butterick seems intent on finding out, and is encouraging potential plaintiffs to contact his legal team in preparation for a potential class-action suit opposing Copilot.

“We needn’t delve into Microsoft’s

very checkered history with open source to see Copilot for what it is: a parasite,” he writes on the website. “The legality of Copilot must be tested before the damage to open source becomes irreparable.”[/S]

www.thecoli.com

www.thecoli.com