TheDarceKnight

Veteran

yeah it's gonna be a shytshow

arstechnica.com

arstechnica.com

Texas firm allegedly behind fake Biden robocall that told people not to vote

Tech and telecom firms helped New Hampshire AG trace call to “Life Corporation.”…arstechnica.com

Texas firm allegedly behind fake Biden robocall that told people not to vote

Tech and telecom firms helped New Hampshire AG trace call to "Life Corporation."

JON BRODKIN - 2/7/2024, 4:04 PM

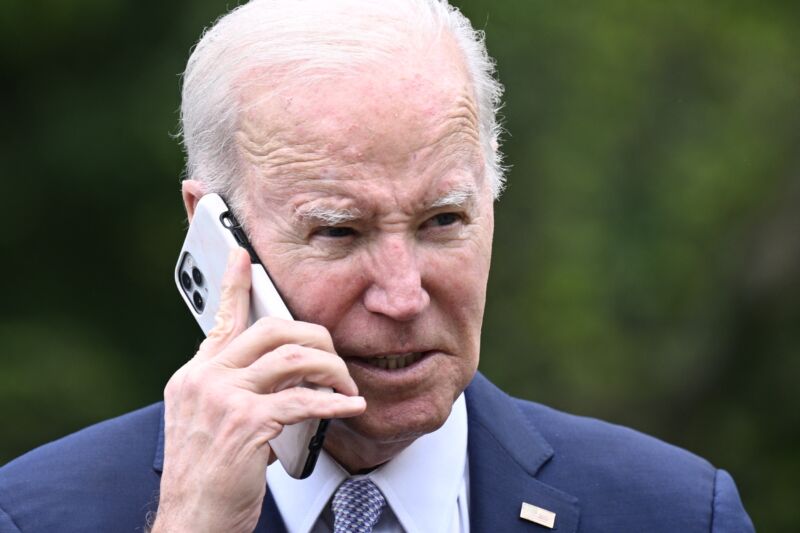

Enlarge / US President Joe Biden speaks on the phone in the Rose Garden of the White House in Washington, DC, on May 1, 2023.

Getty Images | Brendan Smialowski

93

An anti-voting robocall that used an artificially generated clone of President Biden's voice has been traced to a Texas company called Life Corporation "and an individual named Walter Monk," according to an announcement by New Hampshire Attorney General John Formella yesterday.

The AG office's Election Law Unit issued a cease-and-desist order to Life Corporation for violating a New Hampshire law that prohibits deterring people from voting "based on fraudulent, deceptive, misleading, or spurious grounds or information," the announcement said.

As previously reported, the fake Biden robocall was placed before the New Hampshire Presidential Primary Election on January 23. The AG's office said it is investigating "whether Life Corporation worked with or at the direction of any other persons or entities."

"What a bunch of malarkey," the fake Biden voice said. "You know the value of voting Democratic when our votes count. It's important that you save your vote for the November election. We'll need your help in electing Democrats up and down the ticket. Voting this Tuesday only enables the Republicans in their quest to elect Donald Trump again. Your vote makes a difference in November, not this Tuesday."

The artificial Biden voice seems to have been created using a text-to-speech engine offered by ElevenLabs, which reportedly responded to the news by suspending the account of the user who created the deepfake.

The robocalls "illegally spoofed their caller ID information to appear to come from a number belonging to a former New Hampshire Democratic Party Chair," the AG's office said. Formella, a Republican, said that "AI-generated recordings used to deceive voters have the potential to have devastating effects on the democratic election process."

Tech firms helped investigation

Formella's announcement said that YouMail and Nomorobo helped identify the robocalls and that the calls were traced to Life Corporation and Walter Monk with the help of the Industry Traceback Group run by the telecom industry. Nomorobo estimated the number of calls to be between 5,000 and 25,000.

"The tracebacks further identified the originating voice service provider for many of these calls to be Texas-based Lingo Telecom. After Lingo Telecom was informed that these calls were being investigated, Lingo Telecom suspended services to Life Corporation," the AG's office said.

The Election Law Unit issued document preservation notices and subpoenas for records to Life Corporation, Lingo Telecom, and other entities "that may possess records relevant to the Attorney General’s ongoing investigation," the announcement said.

Media outlets haven't had much luck in trying to get a comment from Monk. "At his Arlington office, the door was locked when NBC 5 knocked," an NBC 5 Dallas-Fort Worth article said. "A man inside peeked around the corner to see who was ringing the doorbell but did not answer the door."

The New York Times reports that "a subsidiary of Life Corporation called Voice Broadcasting Corp., which identifies Mr. Monk as its founder on its website, has received numerous payments from the Republican Party’s state committee in Delaware, most recently in 2022, as well as payments from congressional candidates in both parties."

A different company, also called Life Corporation, posted a message on its home page that said, "We are a medical device manufacturer located in Florida and are not affiliated with the Texas company named in current news stories."

FCC warns carrier

The Federal Communications Commission said yesterday that it is taking action against Lingo Telecom. The FCC said it sent a letter demanding that Lingo "immediately stop supporting unlawful robocall traffic on its networks," and a K4 Order that "strongly encourages other providers to refrain from carrying suspicious traffic from Lingo."

"The FCC may proceed to require other network providers affiliated with Lingo to block its traffic should the company continue this behavior," the agency said.

The FCC is separately planning a vote to declare that the use of AI-generated voices in robocalls is illegal under the Telephone Consumer Protection Act.

Microsoft just dropped VASA-1.This AI can make single image sing and talk from audio reference expressively. Similar to EMO from Alibaba10 wild examples:1. Mona Lisa rapping Paparazzi pic.twitter.com/LSGF3mMVnD April 18, 2024

Nah, the velocity of disinformation is much higherThis is already a problem though anyway

Happened REAL heavy back in 2016.

Nah, the velocity of disinformation is much higher