1/4

@AngryTomtweets

Skywork just dropped Super Agents.

The world's first open-source deep research agent framework.

More here

https://video.twimg.com/amplify_video/1925196592757592065/vid/avc1/1280x720/_0rkMLviQhK3hGBq.mp4

2/4

@heyrobinai

ok now i need to try this immediately

3/4

@AngryTomtweets

yeah... you should man!

4/4

@shawnchauhan1

This could redefine productivity.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@AngryTomtweets

Skywork just dropped Super Agents.

The world's first open-source deep research agent framework.

More here

https://video.twimg.com/amplify_video/1925196592757592065/vid/avc1/1280x720/_0rkMLviQhK3hGBq.mp4

2/4

@heyrobinai

ok now i need to try this immediately

3/4

@AngryTomtweets

yeah... you should man!

4/4

@shawnchauhan1

This could redefine productivity.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

@Skywork_ai

Introducing Skywork Super Agents — the originator of AI workspace agents, which turn your 8 hours of work into 8 minutes.

Try it now: The Originator of AI Workspace Agents

https://video.twimg.com/amplify_video/1925196592757592065/vid/avc1/1280x720/_0rkMLviQhK3hGBq.mp4

2/11

@Skywork_ai

Content creation is awful. We spend 60% of our week producing paperworks instead of driving real business value.

So there come Skywork Super Agents, letting you generate docs, slides, sheets, webpages, and podcasts from a SINGLE prompt, cutting your work time by up to 90%.

https://video.twimg.com/amplify_video/1925196679395033088/vid/avc1/640x368/DwQBCUKPGmVYkWg1.mp4

3/11

@Skywork_ai

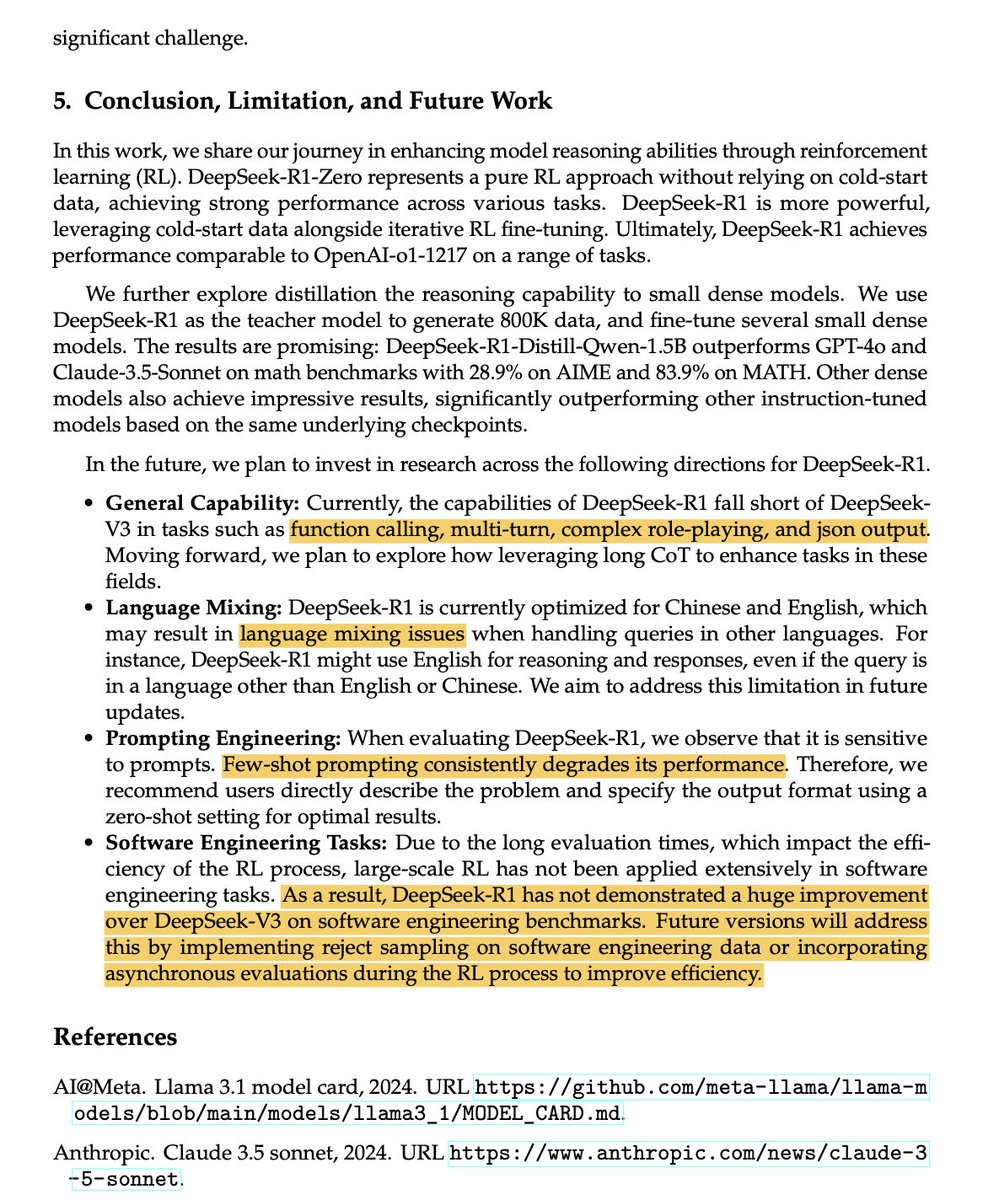

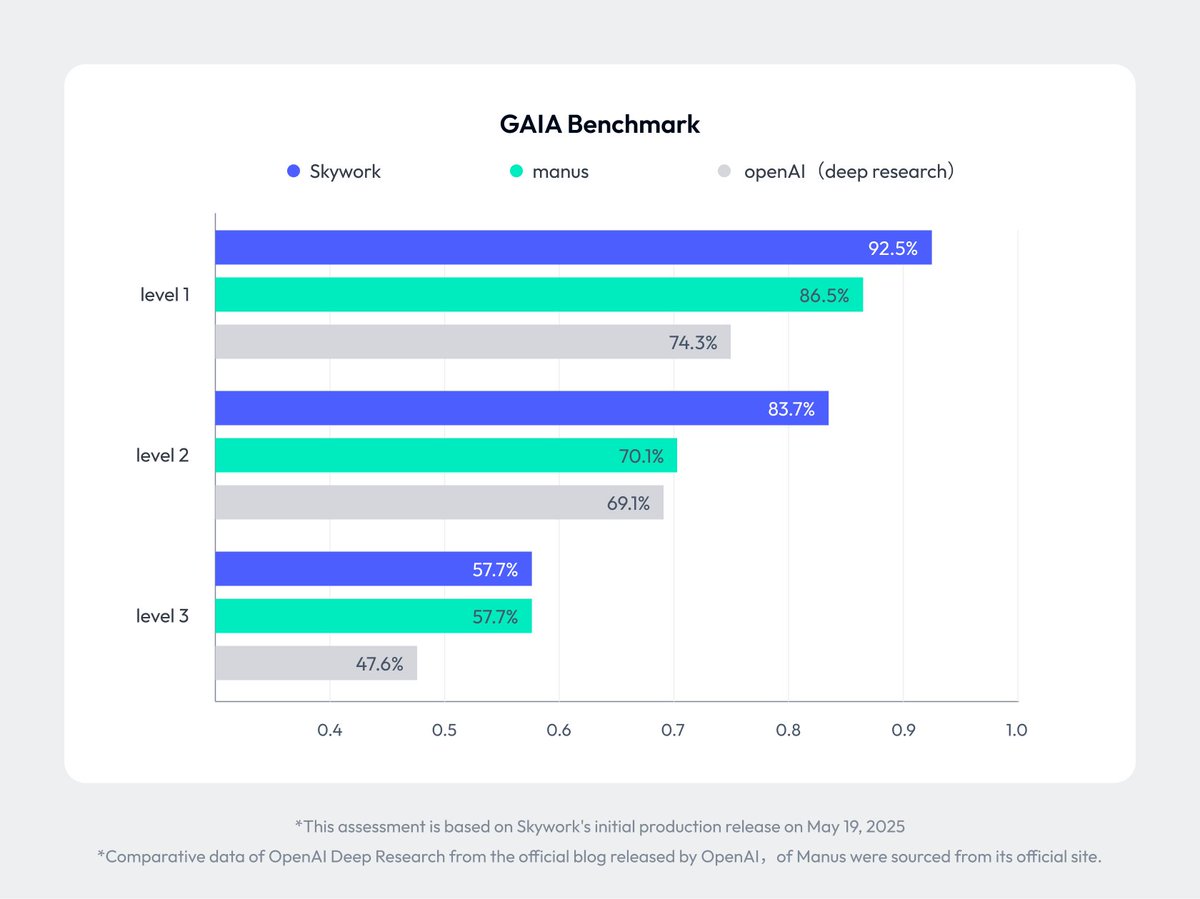

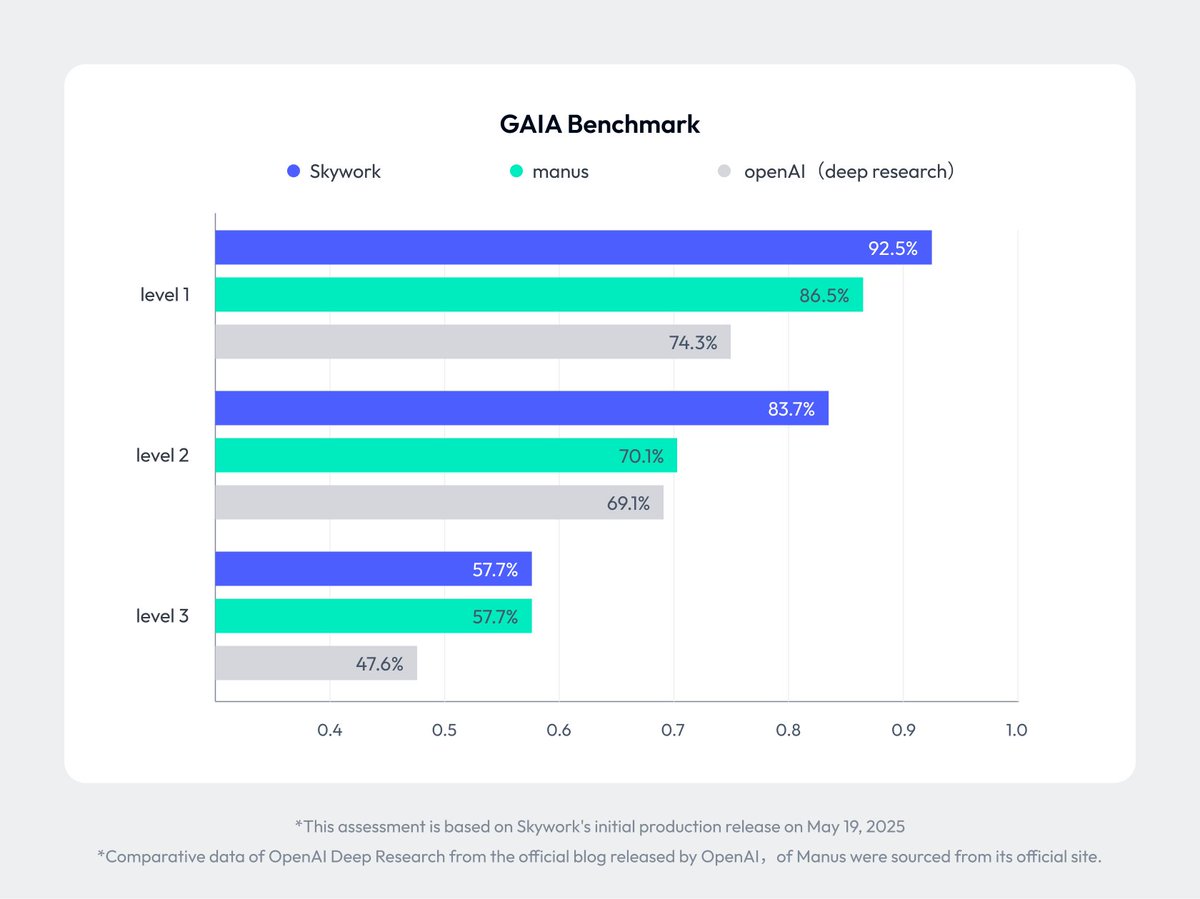

Skywork goes deeper than anyone else.

Our Super Agents boast unmatched deep research capabilities, surfacing 10x more source materials than competitors, while delivering professional-grade results at 40% lower cost than OpenAI.

We're proud to lead the GAIA Agent Leaderboard!

4/11

@Skywork_ai

Skywork offers seamless online editing for its outputs, especially slides. Easily export to local files or Google Workspace. Plus, integrate your private knowledge base for hyper-relevant content!

https://video.twimg.com/amplify_video/1925197054122553344/vid/avc1/2560x1440/kuoBpM3lIJcm4wfh.mp4

5/11

@Skywork_ai

Trust is key. Skywork delivers trusted, traceable results. Every piece of generated content can be traced back to the source paragraphs, so you can verify and use it with confidence.

https://video.twimg.com/amplify_video/1925197447682506752/vid/avc1/2560x1440/sDofyr7oSVQ7jwUV.mp4

6/11

@Skywork_ai

Calling all developers! Skywork is releasing the world’s first open-source deep research agent framework, along with 3 MCPs for docs, sheets, and slides. Integrate and extend!

https://video.twimg.com/amplify_video/1925197658232438785/vid/avc1/2560x1440/2ib4rKBPlEHT8m-O.mp4

7/11

@Skywork_ai

Check out some cool examples from Skywork users:

Analysis of NVIDIA Stock: The Originator of AI Workspace Agents

Analysis of NVIDIA Stock: The Originator of AI Workspace Agents

Tesla Cybertruck Competitive Analysis: The Originator of AI Workspace Agents

Tesla Cybertruck Competitive Analysis: The Originator of AI Workspace Agents

Family Budget Overview: The Originator of AI Workspace Agents

Family Budget Overview: The Originator of AI Workspace Agents

Try it now The Originator of AI Workspace Agents

The Originator of AI Workspace Agents

8/11

@mhdfaran

This is Huge. Congrats for the launch.

9/11

@Skywork_ai

Thank you so much! We’re beyond excited to finally share Skywork with the world.

We’re beyond excited to finally share Skywork with the world.

10/11

@samuelwoods_

Turning 8 hours into 8 minutes sounds like a massive productivity leap

11/11

@Skywork_ai

It really is a game changer! ️ Let’s gooo!

️ Let’s gooo!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@Skywork_ai

Introducing Skywork Super Agents — the originator of AI workspace agents, which turn your 8 hours of work into 8 minutes.

Try it now: The Originator of AI Workspace Agents

https://video.twimg.com/amplify_video/1925196592757592065/vid/avc1/1280x720/_0rkMLviQhK3hGBq.mp4

2/11

@Skywork_ai

Content creation is awful. We spend 60% of our week producing paperworks instead of driving real business value.

So there come Skywork Super Agents, letting you generate docs, slides, sheets, webpages, and podcasts from a SINGLE prompt, cutting your work time by up to 90%.

https://video.twimg.com/amplify_video/1925196679395033088/vid/avc1/640x368/DwQBCUKPGmVYkWg1.mp4

3/11

@Skywork_ai

Skywork goes deeper than anyone else.

Our Super Agents boast unmatched deep research capabilities, surfacing 10x more source materials than competitors, while delivering professional-grade results at 40% lower cost than OpenAI.

We're proud to lead the GAIA Agent Leaderboard!

4/11

@Skywork_ai

Skywork offers seamless online editing for its outputs, especially slides. Easily export to local files or Google Workspace. Plus, integrate your private knowledge base for hyper-relevant content!

https://video.twimg.com/amplify_video/1925197054122553344/vid/avc1/2560x1440/kuoBpM3lIJcm4wfh.mp4

5/11

@Skywork_ai

Trust is key. Skywork delivers trusted, traceable results. Every piece of generated content can be traced back to the source paragraphs, so you can verify and use it with confidence.

https://video.twimg.com/amplify_video/1925197447682506752/vid/avc1/2560x1440/sDofyr7oSVQ7jwUV.mp4

6/11

@Skywork_ai

Calling all developers! Skywork is releasing the world’s first open-source deep research agent framework, along with 3 MCPs for docs, sheets, and slides. Integrate and extend!

https://video.twimg.com/amplify_video/1925197658232438785/vid/avc1/2560x1440/2ib4rKBPlEHT8m-O.mp4

7/11

@Skywork_ai

Check out some cool examples from Skywork users:

Try it now

8/11

@mhdfaran

This is Huge. Congrats for the launch.

9/11

@Skywork_ai

Thank you so much!

10/11

@samuelwoods_

Turning 8 hours into 8 minutes sounds like a massive productivity leap

11/11

@Skywork_ai

It really is a game changer!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196