LLMs Struggle with Real Conversations: Microsoft and Salesforce Researchers Reveal a 39% Performance Drop in Multi-Turn Underspecified Tasks

LLMs Struggle with Real Conversations: Microsoft and Salesforce Researchers Reveal a 39% Performance Drop in Multi-Turn Underspecified Tasks

www.marktechpost.com

www.marktechpost.com

LLMs Struggle with Real Conversations: Microsoft and Salesforce Researchers Reveal a 39% Performance Drop in Multi-Turn Underspecified Tasks

By Nikhil

May 16, 2025

Conversational artificial intelligence is centered on enabling large language models (LLMs) to engage in dynamic interactions where user needs are revealed progressively. These systems are widely deployed in tools that assist with coding, writing, and research by interpreting and responding to natural language instructions. The aspiration is for these models to flexibly adjust to changing user inputs over multiple turns, adapting their understanding with each new piece of information. This contrasts with static, single-turn responses and highlights a major design goal: sustaining contextual coherence and delivering accurate outcomes in extended dialogues.

A persistent problem in conversational AI is the model’s inability to handle user instructions distributed across multiple conversation turns. Rather than receiving all necessary information simultaneously, LLMs must extract and integrate key details incrementally. However, when the task is not specified upfront, models tend to make early assumptions about what is being asked and attempt final solutions prematurely. This leads to errors that persist through the conversation, as the models often stick to their earlier interpretations. The result is that once an LLM makes a misstep in understanding, it struggles to recover, resulting in incomplete or misguided answers.

Most current tools evaluate LLMs using single-turn, fully-specified prompts, where all task requirements are presented in one go. Even in research claiming multi-turn analysis, the conversations are typically episodic, treated as isolated subtasks rather than an evolving flow. These evaluations fail to account for how models behave when the information is fragmented and context must be actively constructed from multiple exchanges. Consequently, evaluations often miss the core difficulty models face: integrating underspecified inputs over several conversational turns without explicit direction.

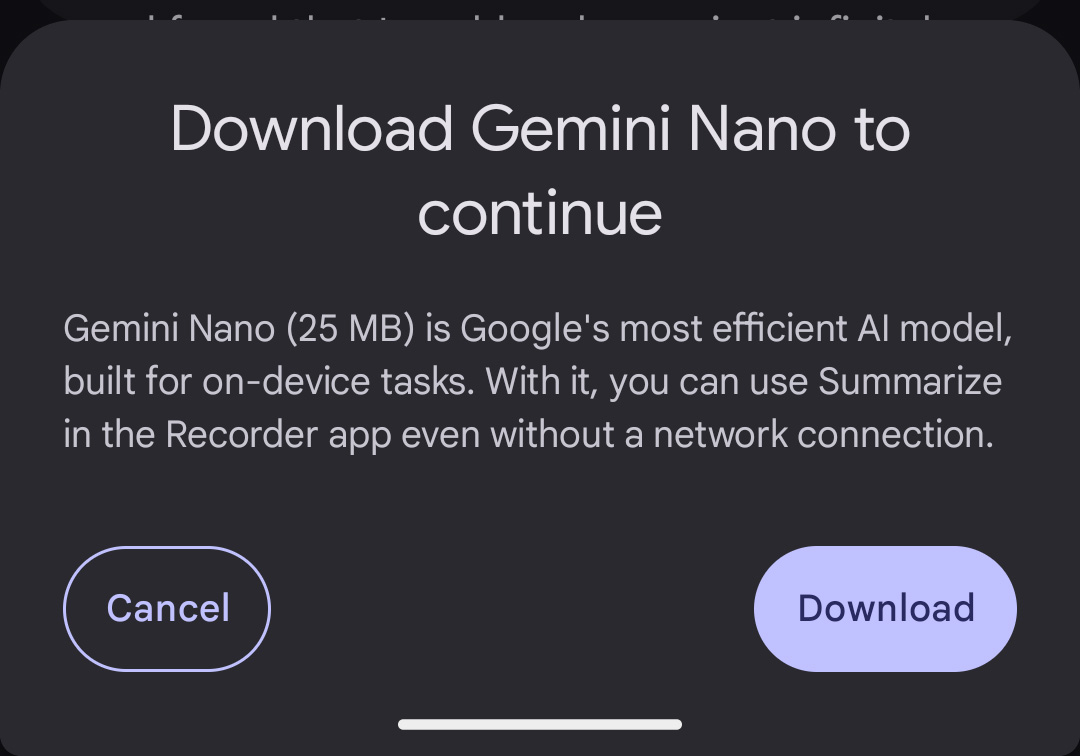

Researchers from Microsoft Research and Salesforce Research introduced a simulation setup that mimics how users reveal information in real conversations. Their “sharded simulation” method takes complete instructions from high-quality benchmarks and splits them into smaller, logically connected parts or “shards.” Each shard delivers a single element of the original instruction, which is then revealed sequentially over multiple turns. This simulates the progressive disclosure of information that happens in practice. The setup includes a simulated user powered by an LLM that decides which shard to reveal next and reformulates it naturally to fit the ongoing context. This setup also uses classification mechanisms to evaluate whether the assistant’s responses attempt a solution or require clarification, further refining the simulation of genuine interaction.

The technology developed simulates five types of conversations, including single-turn full instructions and multiple multi-turn setups. In SHARDED simulations, LLMs received instructions one shard at a time, forcing them to wait before proposing a complete answer. This setup evaluated 15 LLMs across six generation tasks: coding, SQL queries, API actions, math problems, data-to-text descriptions, and document summaries. Each task drew from established datasets such as GSM8K, Spider, and ToTTo. For every LLM and instruction, 10 simulations were conducted, totaling over 200,000 simulations. Aptitude, unreliability, and average performance were computed using a percentile-based scoring system, allowing direct comparison of best and worst-case outcomes per model.

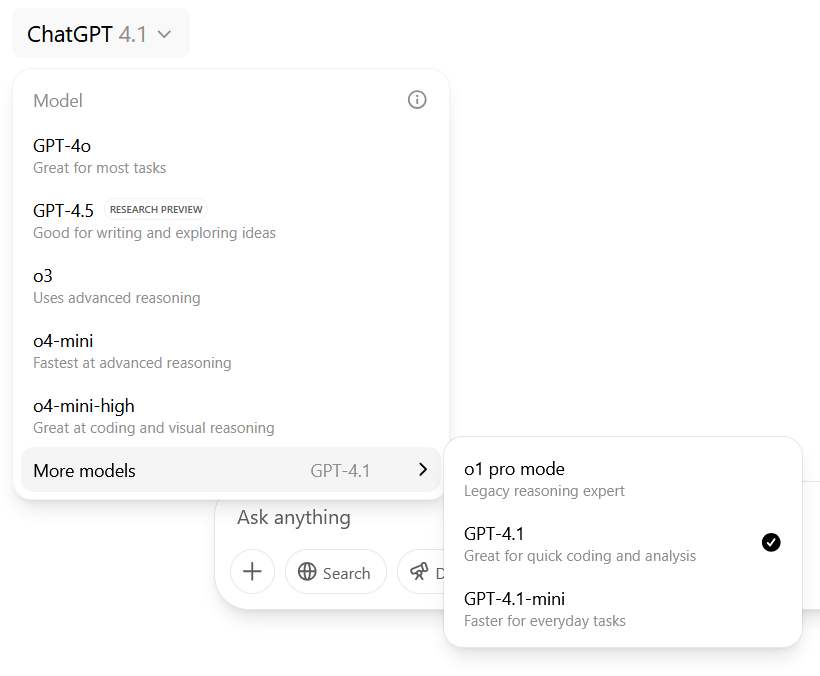

Across all tasks and models, a consistent decline in performance was observed in the SHARDED setting. On average, performance dropped from 90% in single-turn to 65% in multi-turn scenarios—a 25-point decline. The main cause was not reduced capability but a dramatic rise in unreliability. While aptitude dropped by 16%, unreliability increased by 112%, revealing that models varied wildly in how they performed when information was presented gradually. For example, even top-performing models like GPT-4.1 and Gemini 2.5 Pro exhibited 30-40% average degradations. Additional compute at generation time or lowering randomness (temperature settings) offered only minor improvements in consistency.

This research clarifies that even state-of-the-art LLMs are not yet equipped to manage complex conversations where task requirements unfold gradually. The sharded simulation methodology effectively exposes how models falter in adapting to evolving instructions, highlighting the urgent need to improve reliability in multi-turn settings. Enhancing the ability of LLMs to process incomplete instructions over time is essential for real-world applications where conversations are naturally unstructured and incremental.

Check out the Paper and GitHub Page . All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 90k+ ML SubReddit .